一个非常有趣的SQL优化案例

问题描述

分析

表的信息

估算cost

start-up cost

run cost

执行计划

实际执行时间

从内核视角来分析

解决方案

禁用走主键扫描

增加(user_id, id)索引

写在最后

Coding过程中经常会写SQL语句,有时写的SQL出现慢查询而被DBA鄙视。我们一起从使用者,DBA,内核开发三个不同角度来分析和解决一个SQL性能问题。

问题描述

A:两条SQL语句只有limit不一样,而 limit 1的执行比limit 10的慢N倍我:是不是缓存问题,先执行 limit 10再执行limit 1试试A:......,执行了, limit还是很慢

select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 10;

select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 1;

表结构

# \d user_gift;Table "yay.user_gift"Column | Type | Collation | Nullable | Default--------------+--------------------------+-----------+----------+------------------------------------------------id | bigint | | not null | nextval('user_gift_id_seq'::regclass)user_id | integer | | not null |ug_name | character varying(100) | | not null |expired_time | timestamp with time zone | | | now()created_time | timestamp with time zone | | not null | now()updated_time | timestamp with time zone | | not null | now()user_type | user_type | | not null | 'default'::user_typeIndexes:"user_gift_pkey" PRIMARY KEY, btree (id)"idx_user_type" btree (user_id, ug_name)"user_gift_ug_name_idx" btree (ug_name)

分析

执行计划

# explain analyze verbose select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 1;QUERY PLAN---------------------------------------------------------------------------------------------------------------------------------------------------------------------------Limit (cost=0.43..416.25 rows=1 width=73) (actual time=135.213..135.214 rows=1 loops=1)Output: xxx-> Index Scan Backward using user_gift_pkey on yay.user_gift (cost=0.43..368000.44 rows=885 width=73) (actual time=135.212..135.212 rows=1 loops=1)Output: xxxFilter: ((user_gift.user_id = 11695667) AND (user_gift.user_type = 'default'::user_type))Rows Removed by Filter: 330192Planning Time: 0.102 msExecution Time: 135.235 ms(8 rows)Time: 135.691 ms

# explain analyze verbose select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 10;QUERY PLAN----------------------------------------------------------------------------------------------------------------------------------------------------------Limit (cost=868.20..868.22 rows=10 width=73) (actual time=1.543..1.545 rows=10 loops=1)Output: xxx-> Sort (cost=868.20..870.41 rows=885 width=73) (actual time=1.543..1.543 rows=10 loops=1)Output: xxxSort Key: user_gift.id DESCSort Method: top-N heapsort Memory: 27kB-> Index Scan using idx_user_type on yay.user_gift (cost=0.56..849.07 rows=885 width=73) (actual time=0.020..1.366 rows=775 loops=1)Output: xxxIndex Cond: (user_gift.user_id = 11695667)Filter: (user_gift.user_type = 'default'::user_type)Planning Time: 0.079 msExecution Time: 1.564 ms(12 rows)Time: 1.871 ms

limit 1语句 :使用主键进行倒序扫描,Index Scan Backward using user_gift_pkey on yay.user_giftlimit 10语句 :使用(user_id, user_type)复合索引直接查找用户数据,Index Scan using idx_user_type on yay.user_gift

limit 1的total costLimit (cost=0.43..416.25 rows=1 width=73) 是416,run cost是416-0.43=415.57。而limit 10的total costLimit (cost=868.20..868.22 rows=10 width=73)是868.22。Index Scan Backward using user_gift_pkey的方式估算,那么limit 1成本是415, limit 2是415*2=830, limit 3 是 1245,大于868,所以当limit 3的时候会使用Index Scan using idx_user_type扫索引的计划。# explain select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 2;QUERY PLAN-------------------------------------------------------------------------------------------------------------------------Limit (cost=0.43..831.95 rows=2 width=73)-> Index Scan Backward using user_gift_pkey on user_gift (cost=0.43..367528.67 rows=884 width=73)Filter: ((user_id = 11695667) AND (user_type = 'default'::user_type))(3 rows)Time: 0.341 ms# explain select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 3;QUERY PLAN----------------------------------------------------------------------------------------------------------Limit (cost=866.19..866.20 rows=3 width=73)-> Sort (cost=866.19..868.40 rows=884 width=73)Sort Key: id DESC-> Index Scan using idx_user_type on user_gift (cost=0.56..854.76 rows=884 width=73)Index Cond: (user_id = 11695667)Filter: (user_type = 'default'::user_type)(6 rows)Time: 0.352 ms

当 limit 2时,执行计划是Index Scan Backward using user_gift_pkey当 limit 3时,就改变计划了,Index Scan using idx_user_type on user_gift

实际执行时间

limit 1时成本估算的是416.25,比limit 10的868.22还是要快的。但是实际

limit 1执行cost是135.691 ms,而limit 10执行cost是1.871 ms,比limit 10慢了70倍!!!# explain (analyze, buffers, verbose) select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 1;QUERY PLAN---------------------------------------------------------------------------------------------------------------------------------------------------------------------------Limit (cost=0.43..416.29 rows=1 width=73) (actual time=451.542..451.544 rows=1 loops=1)Output: xxxBuffers: shared hit=214402 read=5280 dirtied=2302I/O Timings: read=205.027-> Index Scan Backward using user_gift_pkey on yay.user_gift (cost=0.43..368032.94 rows=885 width=73) (actual time=451.540..451.540 rows=1 loops=1)Output: xxxFilter: ((user_gift.user_id = 11695667) AND (user_gift.user_type = 'default'::user_type))Rows Removed by Filter: 333462Buffers: shared hit=214402 read=5280 dirtied=2302I/O Timings: read=205.027Planning Time: 1.106 msExecution Time: 451.594 ms(12 rows)

# explain (analyze, buffers, verbose) select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id desc limit 3;QUERY PLAN-----------------------------------------------------------------------------------------------------------------------------------------------------------Limit (cost=860.51..860.52 rows=3 width=73) (actual time=14.633..14.634 rows=3 loops=1)Output: xxxBuffers: shared hit=467 read=321I/O Timings: read=10.112-> Sort (cost=860.51..862.72 rows=885 width=73) (actual time=14.632..14.632 rows=3 loops=1)Output: xxxSort Key: user_gift.id DESCSort Method: top-N heapsort Memory: 25kBBuffers: shared hit=467 read=321I/O Timings: read=10.112-> Index Scan using idx_user_type on yay.user_gift (cost=0.56..849.07 rows=885 width=73) (actual time=0.192..14.424 rows=775 loops=1)Output: xxxIndex Cond: (user_gift.user_id = 11695667)Filter: (user_gift.user_type = 'default'::user_type)Buffers: shared hit=464 read=321I/O Timings: read=10.112Planning Time: 0.111 msExecution Time: 14.658 ms(18 rows)

limit 1时的IO成本I/O Timings: read=205.027,Rows Removed by Filter: 333462显示过滤了333462行记录limit 3时IO成本I/O Timings: read=10.112,

Buffers: shared hit=214402 read=5280 dirtied=2302可以看出limit 1的计划从磁盘读取了5280个blocks(pages)才找到符合where条件的记录。schemaname | yaytablename | user_giftattname | idinherited | fnull_frac | 0avg_width | 8n_distinct | -1most_common_vals |most_common_freqs |histogram_bounds | {93,9817,19893,28177,.......}correlation | 0.788011most_common_elems |most_common_elem_freqs |elem_count_histogram |schemaname | yaytablename | user_giftattname | user_idinherited | fnull_frac | 0avg_width | 4n_distinct | -0.175761most_common_vals | {11576819,10299480,14020501,.......,11695667,......}most_common_freqs | {0.000353333,0.000326667,0.000246667,......,9.33333e-05,......}histogram_bounds | {3,10002181,10005599,10009672,......,11693300,11698290,......}correlation | 0.53375most_common_elems |most_common_elem_freqs |elem_count_histogram |schemaname | yaytablename | user_giftattname | user_typeinherited | fnull_frac | 0avg_width | 4n_distinct | 3most_common_vals | {default, invalid, deleted}most_common_freqs | {0.997923,0.00194,0.000136667}histogram_bounds |correlation | 0.99763most_common_elems |most_common_elem_freqs |elem_count_histogram |

user_id字段的most_common_vals中有11695667(user_id)的值,则可以直接通过其对应的most_common_freqs来得到其selectivity是9.33333e-05;user_type字段为default对应的selectivity是0.997923。所以 where user_id=11695667 and user_type='default'的selectivity是0.0000933333*0.997923 = 0.0000931394467359。

(cost=0.43..367528.67 rows=884 width=73)的884行一样。从user_gift_pkey(主键id)扫描的话:只要扫描9499740/884=10746行就能找到满足条件的记录,且无须进行排序( order by id desc)从idx_user_type索引扫描的话:虽然能很快找到此用户的数据,但是需要给884行进行排序,扫描+排序的cost比从主键扫描要高。

表最大的page=128709

# select max(ctid) from user_gift;max-------------(128709,29)(1 row)

user id=11695667的最大page=124329

# select max(ctid), min(ctid) from user_gift where user_id=11695667;max | min-------------+-----------(124329,22) | (3951,64)(1 row)

表本身的pages和tuples数量

# SELECT relpages, reltuples FROM pg_class WHERE relname = 'user_gift';relpages | reltuples----------+-------------128875 | 9.49974e+06(1 row)

limit 1时扫描了5280个pages(包含了主键索引的pages),过滤了333462万行记录,和估算的基本一样:Rows Removed by Filter: 333462Buffers: shared hit=214402 read=5280 dirtied=2302I/O Timings: read=205.027

优化器假设数据分布均匀,只需要扫描10746个记录 而实际需要扫描322862个记录

[root]$ fio -name iops -rw=read -bs=8k -runtime=10 -iodepth=1 -filename /dev/sdb -ioengine libaio -direct=1...Run status group 0 (all jobs):READ: bw=193MiB/s (202MB/s), 193MiB/s-193MiB/s (202MB/s-202MB/s), io=1928MiB (2022MB), run=10001-10001msec

fio结果可以看出,此数据库机器磁盘的顺序读取速度约为 200MB/s,那么扫描40MB数据需要约200ms,与实际需要的时间205ms基本相等。postgreSQL的优化器认为数据分布是均匀的,只需要倒序扫描很快就找到符合条件的记录,而实际上此用户的数据分布在表的前端,就导致了实际执行start-up time如此慢了。

从内核视角来分析

优化器如何估算cost 优化器如何统计actual time

表的信息

主键索引

# SELECT relpages, reltuples FROM pg_class WHERE relname = 'user_gift_pkey';relpages | reltuples----------+-------------40035 | 9.49974e+06(1 row)

user_id 索引

# SELECT relpages, reltuples FROM pg_class WHERE relname = 'idx_user_type';relpages | reltuples----------+-------------113572 | 9.49974e+06(1 row)

表本身的pages是128875

# SELECT relpages, reltuples FROM pg_class WHERE relname = 'user_gift';relpages | reltuples----------+-------------128875 | 9.49974e+06(1 row)

user id=11695667的数据775行

=# select count(1) from user_gift where user_id=11695667;count-------775(1 row)=# select count(1) from user_gift where user_id=11695667 and user_type = 'default' ;count-------775(1 row)

树高度

# 主键高度# select * from bt_metap('user_gift_pkey');magic | version | root | level | fastroot | fastlevel | oldest_xact | last_cleanup_num_tuples--------+---------+------+-------+----------+-----------+-------------+-------------------------340322 | 3 | 412 | 2 | 412 | 2 | 0 | 9.31928e+06(1 row)// idx_user_type 高度# select * from bt_metap('idx_user_type');magic | version | root | level | fastroot | fastlevel | oldest_xact | last_cleanup_num_tuples--------+---------+-------+-------+----------+-----------+-------------+-------------------------340322 | 3 | 15094 | 3 | 15094 | 3 | 0 | 9.49974e+06(1 row)

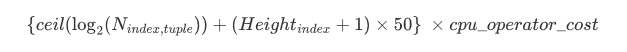

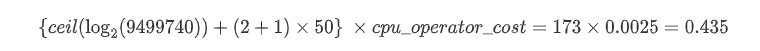

估算cost

start-up cost

// selfuncs.cvoidbtcostestimate(PlannerInfo *root, IndexPath *path, double loop_count,Cost *indexStartupCost, Cost *indexTotalCost,Selectivity *indexSelectivity, double *indexCorrelation,double *indexPages){......descentCost = ceil(log(index->tuples) / log(2.0)) * cpu_operator_cost;costs.indexStartupCost += descentCost;......// This cost is somewhat arbitrarily set at 50x cpu_operator_cost per page toucheddescentCost = (index->tree_height + 1) * 50.0 * cpu_operator_cost;costs.indexStartupCost += descentCost;......}

N(index,tuple) :索引tuples(记录)数量 Height(index) :索引B+tree的高度 cpu_operator_cost : 默认值0.0025

N(index,tuple) :9.49974e+06, Height(index) :2

和postgreSQL估算的start-up cost=0.43 一样。

N(index,tuple) :9.49974e+06, Height(index) :3

run cost

src/backend/optimizer/path/costsize.c的cost_index函数。

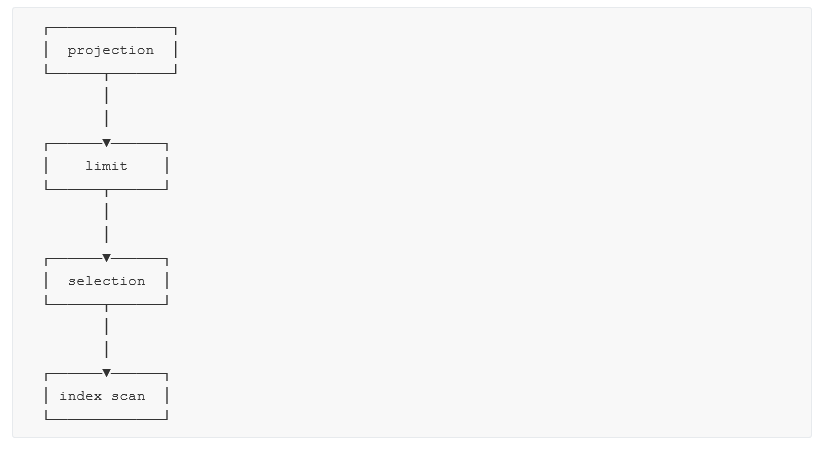

index scan executor:扫描到一个tuple,就返回给selection executor selection executor:对tuple进行过滤,如果符合条件则返回给limit executor,如果不符合则继续调用index scan executor limit executor:当达到limit限制则将数据返回给projection executor projection executor:过滤掉非 select列的数据

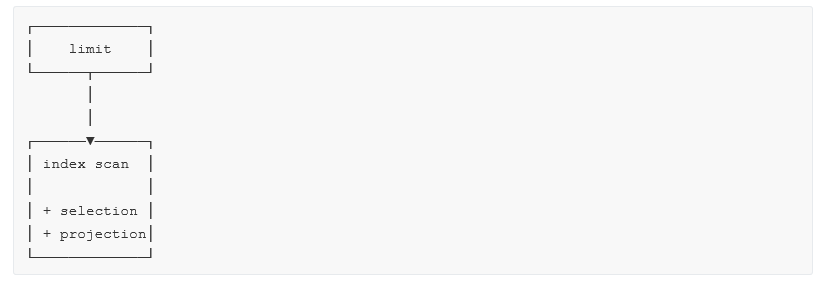

selection executor和projection executor合并到index scan executor中执行,以减少数据在executor之间的传递。

index scan executor:扫描到tuple,然后进行selection过滤,如果符合条件就进行projection再返回给limit,如果不符合条件,则继续扫描 limit executor:当达到limit限制则将数据返回

// src/backend/executor/execProcnode.cstatic TupleTableSlot *ExecProcNodeInstr(PlanState *node){TupleTableSlot *result;InstrStartNode(node->instrument);result = node->ExecProcNodeReal(node);// 统计执行指标InstrStopNode(node->instrument, TupIsNull(result) ? 0.0 : 1.0);return result;}

where语句的第一条结果为止。user_id=xxx的过滤已经下沉到index scan executor里面了。---> int4eq(FunctionCallInfo fcinfo) (/home/ken/cpp/postgres/src/backend/utils/adt/int.c:379)ExecInterpExpr(ExprState * state, ExprContext * econtext, _Bool * isnull) (/home/ken/cpp/postgres/src/backend/executor/execExprInterp.c:704)ExecInterpExprStillValid(ExprState * state, ExprContext * econtext, _Bool * isNull) (/home/ken/cpp/postgres/src/backend/executor/execExprInterp.c:1807)ExecEvalExprSwitchContext(ExprState * state, ExprContext * econtext, _Bool * isNull) (/home/ken/cpp/postgres/src/include/executor/executor.h:322)---> ExecQual(ExprState * state, ExprContext * econtext) (/home/ken/cpp/postgres/src/include/executor/executor.h:391)ExecScan(ScanState * node, ExecScanAccessMtd accessMtd, ExecScanRecheckMtd recheckMtd) (/home/ken/cpp/postgres/src/backend/executor/execScan.c:227)---> ExecIndexScan(PlanState * pstate) (/home/ken/cpp/postgres/src/backend/executor/nodeIndexscan.c:537)ExecProcNodeInstr(PlanState * node) (/home/ken/cpp/postgres/src/backend/executor/execProcnode.c:466)ExecProcNodeFirst(PlanState * node) (/home/ken/cpp/postgres/src/backend/executor/execProcnode.c:450)ExecProcNode(PlanState * node) (/home/ken/cpp/postgres/src/include/executor/executor.h:248)---> ExecLimit(PlanState * pstate) (/home/ken/cpp/postgres/src/backend/executor/nodeLimit.c:96)ExecProcNodeInstr(PlanState * node) (/home/ken/cpp/postgres/src/backend/executor/execProcnode.c:466)ExecProcNodeFirst(PlanState * node) (/home/ken/cpp/postgres/src/backend/executor/execProcnode.c:450)ExecProcNode(PlanState * node) (/home/ken/cpp/postgres/src/include/executor/executor.h:248)ExecutePlan(EState * estate, PlanState * planstate, _Bool use_parallel_mode, CmdType operation, _Bool sendTuples, uint64 numberTuples, ScanDirection direction, DestReceiver * dest, _Bool execute_once) (/home/ken/cpp/postgres/src/backend/executor/execMain.c:1632)standard_ExecutorRun(QueryDesc * queryDesc, ScanDirection direction, uint64 count, _Bool execute_once) (/home/ken/cpp/postgres/src/backend/executor/execMain.c:350)ExecutorRun(QueryDesc * queryDesc, ScanDirection direction, uint64 count, _Bool execute_once) (/home/ken/cpp/postgres/src/backend/executor/execMain.c:294)ExplainOnePlan(PlannedStmt * plannedstmt, IntoClause * into, ExplainState * es, const char * queryString, ParamListInfo params, QueryEnvironment * queryEnv, const instr_time * planduration, const BufferUsage * bufusage) (/home/ken/cpp/postgres/src/backend/commands/explain.c:571)ExplainOneQuery(Query * query, int cursorOptions, IntoClause * into, ExplainState * es, const char * queryString, ParamListInfo params, QueryEnvironment * queryEnv) (/home/ken/cpp/postgres/src/backend/commands/explain.c:404)ExplainQuery(ParseState * pstate, ExplainStmt * stmt, ParamListInfo params, DestReceiver * dest) (/home/ken/cpp/postgres/src/backend/commands/explain.c:275)

ExecQual(qual, econtext)是对tuple进行过滤,因为selection已经合并到scan中了。TupleTableSlot *ExecScan(ScanState *node, ExecScanAccessMtd accessMtd, ExecScanRecheckMtd recheckMtd){......for (;;){TupleTableSlot *slot;slot = ExecScanFetch(node, accessMtd, recheckMtd);......econtext->ecxt_scantuple = slot;// Note : selection判断if (qual == NULL || ExecQual(qual, econtext)){if (projInfo){return ExecProject(projInfo);}else{return slot;}}elseInstrCountFiltered1(node, 1);}}

解决方案

禁用走主键扫描

# explain analyze verbose select xxx from user_gift where user_id=11695667 and user_type = 'default' order by id+0 desc limit 1;

order by id改成order by id+0,由于id+0是个表达式所以优化器就就不会使用user_gift_pkey这个索引了。增加(user_id, id)索引

create index idx_user_id on user_gift(user_id, id);

写在最后

END

END

分享一下我写的《10万字Springboot经典学习笔记》中,点击下面小卡片,进入【Java秃头哥】,回复:笔记,即可免费获取。

点赞是最大的支持

评论