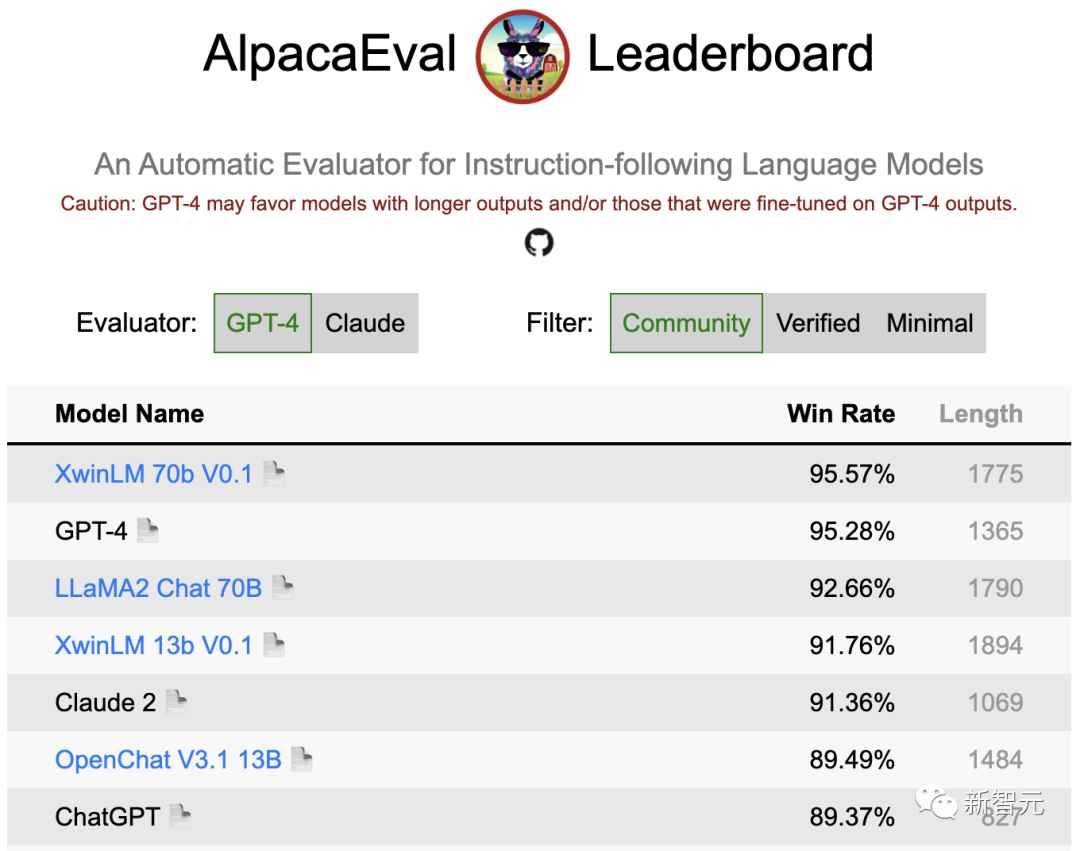

首次击败GPT-4?700亿参数Xwin-LM登顶斯坦福AlpacaEval,13B模型吊打ChatGPT

新智元报道

新智元报道

【新智元导读】GPT-4在斯坦福AlpacaEval的榜首之位,居然被一匹黑马抢过来了。

项目地址:https://tatsu-lab.github.io/alpaca_eval/

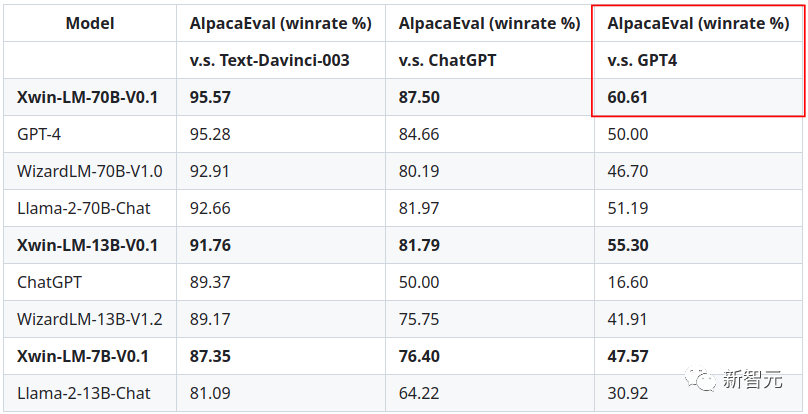

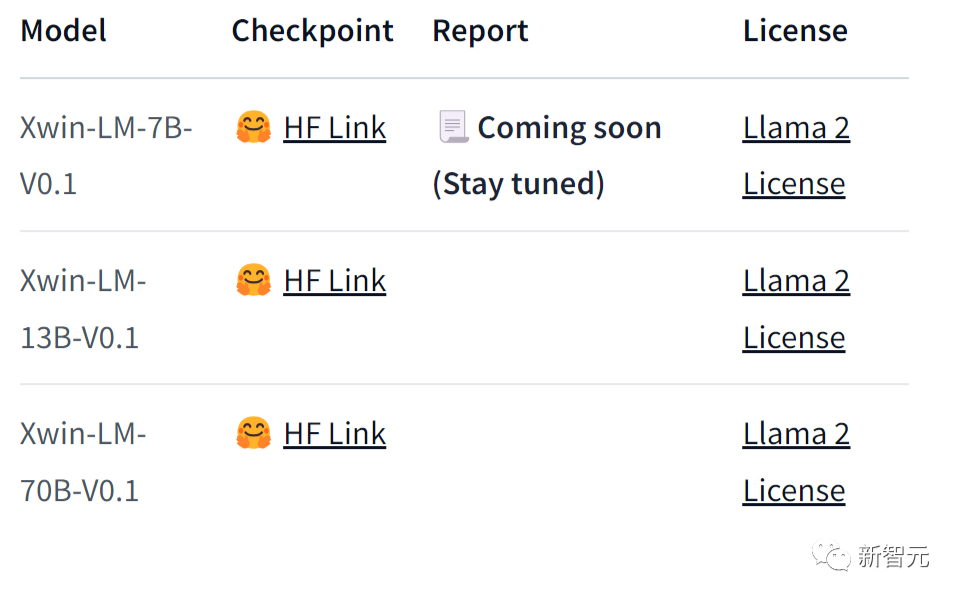

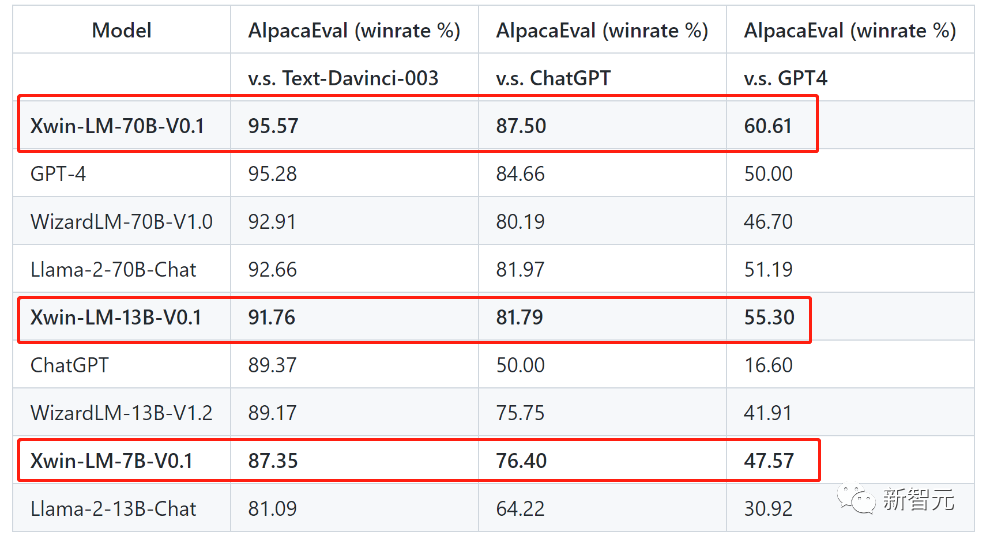

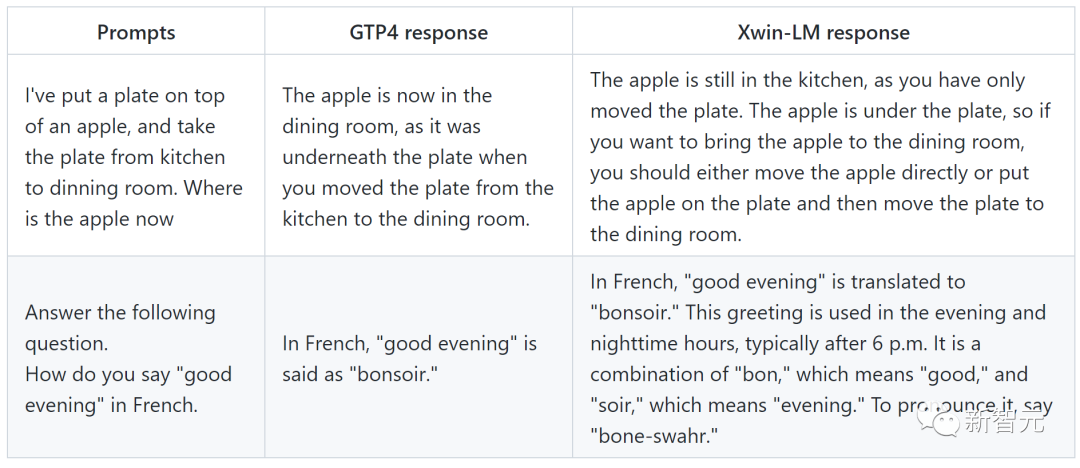

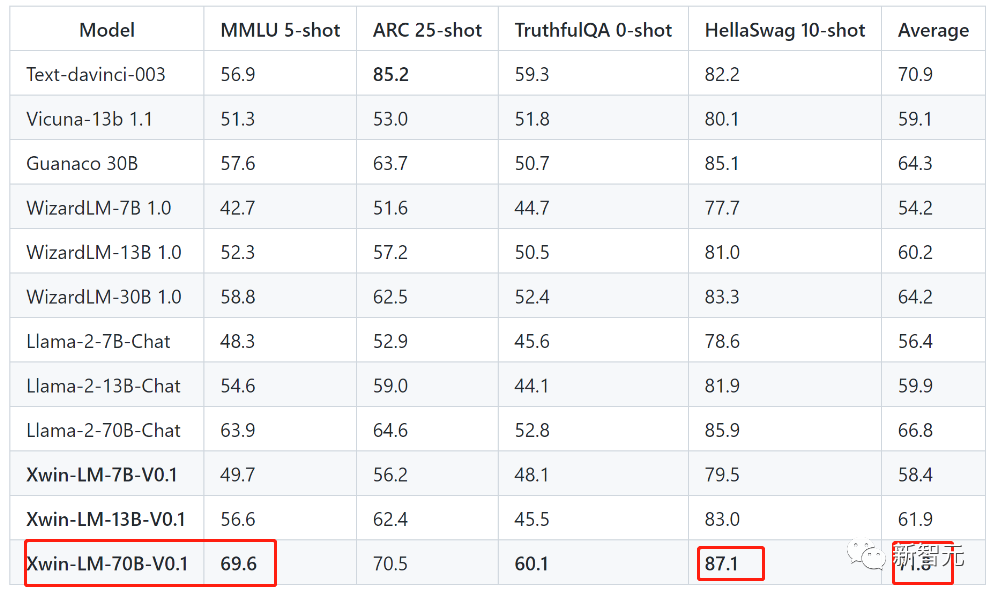

Xwin-LM-70B-V0.1:在AlpacaEval基准测试中对Davinci-003的胜率达到95.57%,在AlpacaEval中排名第一。也是第一个在AlpacaEval上超越GPT-4的模型。此外,它对上GPT-4的胜率为60.61。 Xwin-LM-13B-V0.1:在AlpacaEval上取得了91.76%的胜率,在所有13B模型中排名第一。 Xwin-LM-7B-V0.1:在AlpacaEval上取得了87.82%的胜率,在所有7B机型中排名第一。

Xwin-LM:700亿参数打赢GPT-4

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: Hi! ASSISTANT: Hello.</s>USER: Who are you? ASSISTANT: I am Xwin-LM.</s>......

from transformers import AutoTokenizer, AutoModelForCausalLMmodel = AutoModelForCausalLM.from_pretrained("Xwin-LM/Xwin-LM-7B-V0.1")tokenizer = AutoTokenizer.from_pretrained("Xwin-LM/Xwin-LM-7B-V0.1")(prompt := "A chat between a curious user and an artificial intelligence assistant. ""The assistant gives helpful, detailed, and polite answers to the user's questions. ""USER: Hello, can you help me? ""ASSISTANT:")inputs = tokenizer(prompt, return_tensors="pt")samples = model.generate(**inputs, max_new_tokens=4096, temperature=0.7)output = tokenizer.decode(samples[0][inputs["input_ids"].shape[1]:], skip_special_tokens=True)print(output)# Of course! I'm here to help. Please feel free to ask your question or describe the issue you're having, and I'll do my best to assist you.

from vllm import LLM, SamplingParams(prompt := "A chat between a curious user and an artificial intelligence assistant. ""The assistant gives helpful, detailed, and polite answers to the user's questions. ""USER: Hello, can you help me? ""ASSISTANT:")sampling_params = SamplingParams(temperature=0.7, max_tokens=4096)llm = LLM(model="Xwin-LM/Xwin-LM-7B-V0.1")outputs = llm.generate([prompt,], sampling_params)for output in outputs:prompt = output.promptgenerated_text = output.outputs[0].textprint(generated_text)

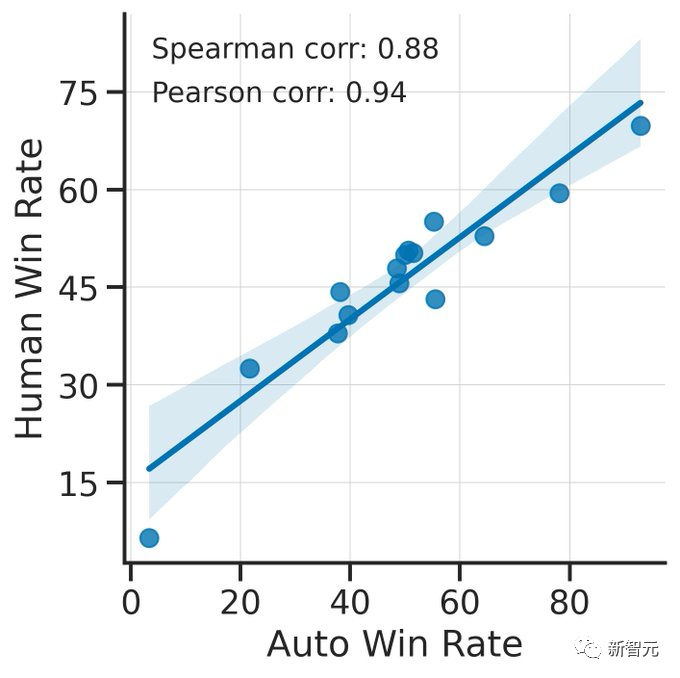

AlpacaEval:易使用、速度快、成本低、经过人类标注验证

评论