使用深度学习阅读和分类扫描文档

点击上方“小白学视觉”,选择加"星标"或“置顶”

重磅干货,第一时间送达

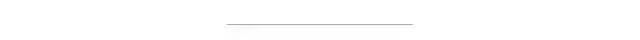

首先,我们要做的第一件事是创建一个简单的数据集,这样我们就可以测试我们工作流程的每一部分。理想情况下,我们的数据集将包含各种易读性和时间段的扫描文档,以及每个文档所属的高级主题。我找不到具有这些精确规格的数据集,所以我开始构建自己的数据集。我决定的高层次话题是政府、信件、吸烟和专利,随机的选择这些主要是因为每个地区都有各种各样的扫描文件。

我从这些来源中的每一个中挑选了 20 个左右的大小合适的文档,并将它们放入由主题定义的单独文件夹中。

经过将近一整天的搜索和编目所有图像后,我们将它们全部调整为 600x800 并将它们转换为 PNG 格式。

简单的调整大小和转换脚本如下:

from PIL import Imageimg_folder = r'F:\Data\Imagery\OCR' # Folder containing topic folders (i.e "News", "Letters" ..etc.)for subfol in os.listdir(img_folder): # For each of the topic folderssfpath = os.path.join(img_folder, subfol)for imgfile in os.listdir(sfpath): # Get all images in the topicimgpath = os.path.join(sfpath, imgfile)img = Image.open(imgpath) # Read in the image with Pillowimg = img.resize((600,800)) # Resize the imagenewip = imgpath[0:-4] + ".png" # Convert to PNGimg.save(newip) # Save

光学字符识别是从图像中提取文字的过程。这通常是通过机器学习模型完成的,最常见的是通过包含卷积神经网络的管道来完成。虽然我们可以为我们的应用程序训练自定义 OCR 模型,但它需要更多的训练数据和计算资源。相反,我们将使用出色的 Microsoft 计算机视觉 API,其中包括专门用于 OCR 的特定模块。API 调用将使用图像(作为 PIL 图像)并输出几位信息,包括图像上文本的位置/方向作为以及文本本身。以下函数将接收一个 PIL 图像列表并输出一个大小相等的提取文本列表:

def image_to_text(imglist, ndocs=10):'''Take in a list of PIL images and return a list of extracted text using OCR'''headers = {# Request headers'Content-Type': 'application/octet-stream','Ocp-Apim-Subscription-Key': 'YOUR_KEY_HERE',}params = urllib.parse.urlencode({# Request parameters'language': 'en','detectOrientation ': 'true',})outtext = []docnum = 0for cropped_image in imglist:print("Processing document -- ", str(docnum))# Cropped image must have both height and width > 50 px to run Computer Vision API#if (cropped_image.height or cropped_image.width) < 50:# cropped_images_ocr.append("N/A")# continueocr_image = cropped_imageimgByteArr = io.BytesIO()ocr_image.save(imgByteArr, format='PNG')imgByteArr = imgByteArr.getvalue()try:conn = http.client.HTTPSConnection('westus.api.cognitive.microsoft.com')conn.request("POST", "/vision/v1.0/ocr?%s" % params, imgByteArr, headers)response = conn.getresponse()data = json.loads(response.read().decode("utf-8"))curr_text = []for r in data['regions']:for l in r['lines']:for w in l['words']:curr_text.append(str(w['text']))conn.close()except Exception as e:print("Could not process imageouttext.append(' '.join(curr_text))docnum += 1return(outtext)

由于在某些情况下我们可能希望在这里结束我们的工作流程,而不是仅仅将提取的文本作为一个巨大的列表保存在内存中,我们还可以将提取的文本写入与原始输入文件同名的单个 txt 文件中。微软的OCR技术虽然不错,但偶尔也会出错。我们可以使用 SpellChecker 模块减少其中的一些错误,以下脚本接受输入和输出文件夹,读取输入文件夹中的所有扫描文档,使用我们的 OCR 脚本读取它们,运行拼写检查并纠正拼写错误的单词,最后将原始txt文件导出目录。

'''Read in a list of scanned images (as .png files > 50x50px) and output a set of .txt files containing the text content of these scans'''from functions import preprocess, image_to_textfrom PIL import Imageimport osfrom spellchecker import SpellCheckerimport matplotlib.pyplot as pltINPUT_FOLDER = r'F:\Data\Imagery\OCR2\Images'OUTPUT_FOLDER = r'F:\Research\OCR\Outputs\AllDocuments'## First, read in all the scanned document images into PIL imagesscanned_docs_path = os.listdir(INPUT_FOLDER)scanned_docs_path = [x for x in scanned_docs_path if x.endswith('.png')]scanned_docs = [Image.open(os.path.join(INPUT_FOLDER, path)) for path in scanned_docs_path]## Second, utilize Microsoft CV API to extract text from these images using OCRscanned_docs_text = image_to_text(scanned_docs)## Third, remove mis-spellings that might have occured from bad OCR readingsspell = SpellChecker()for i in range(len(scanned_docs_text)):clean = scanned_docs_text[i]misspelled = spell.unknown(clean)clean = clean.split(" ")for word in range(len(clean)):if clean[word] in misspelled:clean[word] = spell.correction(clean[word])# Get the one `most likely` answerclean = ' '.join(clean)scanned_docs_text[i] = clean## Fourth, write the extracted text to individual .txt files with the same name as input filesfor k in range(len(scanned_docs_text)): # For each scanned documenttext = scanned_docs_text[k]path = scanned_docs_path[k] # Get the corresponding input filenametext_file_path = path[:-4] + ".txt" # Create the output text filetext_file = open(text_file_path, "wt")n = text_file.write(text) # Write the text to the ouput text filetext_file.close()print("Done")

如果我们的扫描文档集足够大,将它们全部写入一个大文件夹会使它们难以分类,并且我们可能已经在文档中进行了某种隐式分组。如果我们大致了解我们拥有多少种不同的“类型”或文档主题,我们可以使用主题建模来帮助自动识别这些。这将为我们提供基础架构,以根据文档内容将 OCR 中识别的文本拆分为单独的文件夹,我们将使用该主题模型被称为LDA。为了运行这个模型,我们需要对我们的数据进行更多的预处理和组织,因此为了防止我们的脚本变得冗长和拥挤,我们将假设已经使用上述工作流程读取了扫描的文档并将其转换为 txt 文件. 然后主题模型将读入这些 txt 文件,将它们分类到我们指定的任意多个主题中,并将它们放入适当的文件夹中。

我们将从一个简单的函数开始,读取文件夹中所有输出的 txt 文件,并将它们读入包含 (filename, text) 的元组列表。

def read_and_return(foldername, fileext='.txt'):'''Read all text files with fileext from foldername, and place them into a list of tuples as[(filename, text), ... , (filename, text)]'''allfiles = os.listdir(foldername)allfiles = [os.path.join(foldername, f) for f in allfiles if f.endswith(fileext)]alltext = []for filename in allfiles:with open(filename, 'r') as f:alltext.append((filename, f.read()))f.close()return(alltext) # Returns list of tuples [(filename, text), ... (filename,text)]

接下来,我们需要确保所有无用的词(那些不能帮助我们区分特定文档主题的词)。我们将使用三种不同的方法来做到这一点:

删除停用词

去除标签、标点、数字和多个空格

TF-IDF 过滤

为了实现所有这些(以及我们的主题模型),我们将使用 Gensim 包。下面的脚本将对文本列表(上述函数的输出)运行必要的预处理步骤并训练 LDA 模型。

from gensim import corpora, models, similaritiesfrom gensim.parsing.preprocessing import remove_stopwords, preprocess_stringdef preprocess(document):clean = remove_stopwords(document)clean = preprocess_string(document)return(clean)def run_lda(textlist,num_topics=10,preprocess_docs=True):'''Train and return an LDA model against a list of documents'''if preprocess_docs:doc_text = [preprocess(d) for d in textlist]dictionary = corpora.Dictionary(doc_text)corpus = [dictionary.doc2bow(text) for text in doc_text]tfidf = models.tfidfmodel.TfidfModel(corpus)transformed_tfidf = tfidf[corpus]lda = models.ldamulticore.LdaMulticore(transformed_tfidf, num_topics=num_topics, id2word=dictionary)return(lda, dictionary)

一旦我们训练了我们的 LDA 模型,我们就可以使用它来将我们的训练文档集(以及可能出现的未来文档)分类为主题,然后将它们放入适当的文件夹中。

对新的文本字符串使用经过训练的 LDA 模型需要一些麻烦,所有的复杂性都包含在下面的函数中:

def find_topic(textlist, dictionary, lda):'''https://stackoverflow.com/questions/16262016/how-to-predict-the-topic-of-a-new-query-using-a-trained-lda-model-using-gensimFor each query ( document in the test file) , tokenize thequery, create a feature vector just like how it was done while trainingand create text_corpus'''text_corpus = []for query in textlist:temp_doc = tokenize(query.strip())current_doc = []temp_doc = list(temp_doc)for word in range(len(temp_doc)):current_doc.append(temp_doc[word])text_corpus.append(current_doc)'''For each feature vector text, lda[doc_bow] gives the topicdistribution, which can be sorted in descending order to print thevery first topic'''tops = []for text in text_corpus:doc_bow = dictionary.doc2bow(text)topics = sorted(lda[doc_bow],key=lambda x:x[1],reverse=True)[0]tops.append(topics)return(tops)

最后,我们需要另一种方法来根据主题索引获取主题的实际名称:

def topic_label(ldamodel, topicnum):alltopics = ldamodel.show_topics(formatted=False)topic = alltopics[topicnum]topic = dict(topic[1])return(max(topic, key=lambda key: topic[key]))

现在,我们可以将上面编写的所有函数粘贴到一个接受输入文件夹、输出文件夹和主题计数的脚本中。该脚本将读取输入文件夹中所有扫描的文档图像,将它们写入txt 文件,构建LDA 模型以查找文档中的高级主题,并根据文档主题将输出的txt 文件归类到文件夹中。

################################################################## This script takes in an input folder of scanned documents ## and reads these documents, seperates them into topics ## and outputs raw .txt files into the output folder, seperated ## by topic ##################################################################import osfrom PIL import Imageimport base64import http.client, urllib.request, urllib.parse, urllib.error, base64import ioimport jsonimport requestsimport urllibfrom gensim import corpora, models, similaritiesfrom gensim.utils import tokenizefrom gensim.parsing.preprocessing import remove_stopwords, preprocess_stringimport httpimport shutilimport tqdmdef filter_for_english(text):dict_url = 'https://raw.githubusercontent.com/first20hours/' \'google-10000-english/master/20k.txt'dict_words = set(requests.get(dict_url).text.splitlines())english_words = tokenize(text)english_words = [w for w in english_words if w in list(dict_words)]english_words = [w for w in english_words if (len(w)>1 or w.lower()=='i')]return(' '.join(english_words))def preprocess(document):clean = filter_for_english(document)clean = remove_stopwords(clean)clean = preprocess_string(clean)# Remove non-english wordsreturn(clean)def read_and_return(foldername, fileext='.txt', delete_after_read=False):allfiles = os.listdir(foldername)allfiles = [os.path.join(foldername, f) for f in allfiles if f.endswith(fileext)]alltext = []for filename in allfiles:with open(filename, 'r') as f:alltext.append((filename, f.read()))f.close()if delete_after_read:os.remove(filename)return(alltext) # Returns list of tuples [(filename, text), ... (filename,text)]def image_to_text(imglist, ndocs=10):'''Take in a list of PIL images and return a list of extracted text'''headers = {# Request headers'Content-Type': 'application/octet-stream','Ocp-Apim-Subscription-Key': '89279deb653049078dd18b1b116777ea',}params = urllib.parse.urlencode({# Request parameters'language': 'en','detectOrientation ': 'true',})outtext = []docnum = 0for cropped_image in tqdm.tqdm(imglist, total=len(imglist)):# Cropped image must have both height and width > 50 px to run Computer Vision API#if (cropped_image.height or cropped_image.width) < 50:# cropped_images_ocr.append("N/A")# continueocr_image = cropped_imageimgByteArr = io.BytesIO()ocr_image.save(imgByteArr, format='PNG')imgByteArr = imgByteArr.getvalue()try:conn = http.client.HTTPSConnection('westus.api.cognitive.microsoft.com')conn.request("POST", "/vision/v1.0/ocr?%s" % params, imgByteArr, headers)response = conn.getresponse()data = json.loads(response.read().decode("utf-8"))curr_text = []for r in data['regions']:for l in r['lines']:for w in l['words']:curr_text.append(str(w['text']))conn.close()except Exception as e:print("[Errno {0}] {1}".format(e.errno, e.strerror))outtext.append(' '.join(curr_text))docnum += 1return(outtext)def run_lda(textlist,num_topics=10,return_model=False,preprocess_docs=True):'''Train and return an LDA model against a list of documents'''if preprocess_docs:doc_text = [preprocess(d) for d in textlist]dictionary = corpora.Dictionary(doc_text)corpus = [dictionary.doc2bow(text) for text in doc_text]tfidf = models.tfidfmodel.TfidfModel(corpus)transformed_tfidf = tfidf[corpus]lda = models.ldamulticore.LdaMulticore(transformed_tfidf, num_topics=num_topics, id2word=dictionary)input_doc_topics = lda.get_document_topics(corpus)return(lda, dictionary)def find_topic(text, dictionary, lda):'''https://stackoverflow.com/questions/16262016/how-to-predict-the-topic-of-a-new-query-using-a-trained-lda-model-using-gensimFor each query ( document in the test file) , tokenize thequery, create a feature vector just like how it was done while trainingand create text_corpus'''text_corpus = []for query in text:temp_doc = tokenize(query.strip())current_doc = []temp_doc = list(temp_doc)for word in range(len(temp_doc)):current_doc.append(temp_doc[word])text_corpus.append(current_doc)'''For each feature vector text, lda[doc_bow] gives the topicdistribution, which can be sorted in descending order to print thevery first topic'''tops = []for text in text_corpus:doc_bow = dictionary.doc2bow(text)topics = sorted(lda[doc_bow],key=lambda x:x[1],reverse=True)[0]tops.append(topics)return(tops)def topic_label(ldamodel, topicnum):alltopics = ldamodel.show_topics(formatted=False)topic = alltopics[topicnum]topic = dict(topic[1])import operatorreturn(max(topic, key=lambda key: topic[key]))INPUT_FOLDER = r'F:/Research/OCR/Outputs/AllDocuments'OUTPUT_FOLDER = r'F:/Research/OCR/Outputs/AllDocumentsByTopic'TOPICS = 4if __name__ == '__main__':print("Reading scanned documents")## First, read in all the scanned document images into PIL imagesscanned_docs_fol = r'F:/Research/OCR/Outputs/AllDocuments'scanned_docs_path = os.listdir(scanned_docs_fol)scanned_docs_path = [os.path.join(scanned_docs_fol, p) for p in scanned_docs_path]scanned_docs = [Image.open(x) for x in scanned_docs_path if x.endswith('.png')]## Second, utilize Microsoft CV API to extract text from these images using OCRscanned_docs_text = image_to_text(scanned_docs)print("Post-processing extracted text")## Third, remove mis-spellings that might have occured from bad OCR readingsspell = SpellChecker()for i in range(len(scanned_docs_text)):clean = scanned_docs_text[i]misspelled = spell.unknown(clean)clean = clean.split(" ")for word in range(len(clean)):if clean[word] in misspelled:clean[word] = spell.correction(clean[word])# Get the one `most likely` answerclean = ' '.join(clean)scanned_docs_text[i] = cleanprint("Writing read text into files")## Fourth, write the extracted text to individual .txt files with the same name as input filesfor k in range(len(scanned_docs_text)): # For each scanned documenttext = scanned_docs_text[k]text = filter_for_english(text)path = scanned_docs_path[k] # Get the corresponding input filenamepath = path.split("\\")[-1]text_file_path = OUTPUT_FOLDER + "//" + path[0:-4] + ".txt" # Create the output text filetext_file = open(text_file_path, "wt")n = text_file.write(text) # Write the text to the ouput text filetext_file.close()# First, read all the output .txt filesprint("Reading files")texts = read_and_return(OUTPUT_FOLDER)print("Building LDA topic model")# Second, train the LDA model (pre-processing is internally done)print("Preprocessing Text")textlist = [t[1] for t in texts]ldamodel, dictionary = run_lda(textlist, num_topics=TOPICS)# Third, extract the top topic for each documentprint("Extracting Topics")topics = []for t in texts:topics.append((t[0], find_topic([t[1]], dictionary, ldamodel)))# Convert topics to topic namesfor i in range(len(topics)):topnum = topics[i][1][0][0]#print(topnum)topics[i][1][0] = topic_label(ldamodel, topnum)# [(filename, topic), ..., (filename, topic)]# Create folders for the topicsprint("Copying Documents into Topic Folders")foundtopics = []for t in topics:foundtopics+= t[1]foundtopics = set(foundtopics)topicfolders = [os.path.join(OUTPUT_FOLDER, f) for f in foundtopics]topicfolders = set(topicfolders)[os.makedirs(m) for m in topicfolders]# Copy files into appropriate topic foldersfor t in topics:filename, topic = tsrc = filenamefilename = filename.split("\\")dest = os.path.join(OUTPUT_FOLDER, topic[0])dest = dest + "/" + filename[-1]copystr = "copy " + src + " " + destshutil.copyfile(src, dest)os.remove(src)print("Done")

本文代码Github链接:

https://github.com/ShairozS/Scan2Topic

好消息,小白学视觉团队的知识星球开通啦,为了感谢大家的支持与厚爱,团队决定将价值149元的知识星球现时免费加入。各位小伙伴们要抓住机会哦!

交流群

欢迎加入公众号读者群一起和同行交流,目前有SLAM、三维视觉、传感器、自动驾驶、计算摄影、检测、分割、识别、医学影像、GAN、算法竞赛等微信群(以后会逐渐细分),请扫描下面微信号加群,备注:”昵称+学校/公司+研究方向“,例如:”张三 + 上海交大 + 视觉SLAM“。请按照格式备注,否则不予通过。添加成功后会根据研究方向邀请进入相关微信群。请勿在群内发送广告,否则会请出群,谢谢理解~