python爬虫开发:多线程抓取猫眼电影TOP100实例

使用Python爬虫库requests多线程抓取猫眼电影TOP100思路:

查看网页源代码

抓取单页内容

正则表达式提取信息

猫眼TOP100所有信息写入文件

多线程抓取

运行平台:windows

Python版本:Python 3.7.

IDE:Sublime Text

浏览器:Chrome浏览器

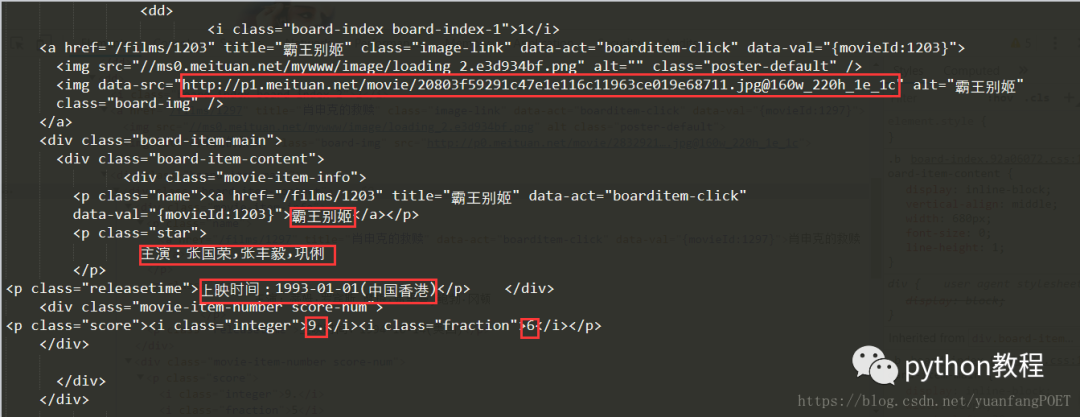

1.查看猫眼电影TOP100网页原代码

按F12查看网页源代码发现每一个电影的信息都在“<dd></dd>”标签之中。

点开之后,信息如下:

2.抓取单页内容

在浏览器中打开猫眼电影网站,点击“榜单”,再点击“TOP100榜”如下图:

接下来通过以下代码获取网页源代码:

#-*-coding:utf-8-*-import requestsfrom requests.exceptions import RequestException#猫眼电影网站有反爬虫措施,设置headers后可以爬取headers = {'Content-Type': 'text/plain; charset=UTF-8','Origin':'https://maoyan.com','Referer':'https://maoyan.com/board/4','User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'}#爬取网页源代码def get_one_page(url,headers):try:response =requests.get(url,headers =headers)if response.status_code == 200:return response.textreturn Noneexcept RequestsException:return Nonedef main():url = "https://maoyan.com/board/4"html = get_one_page(url,headers)print(html)if __name__ == '__main__':main()

执行结果如下:

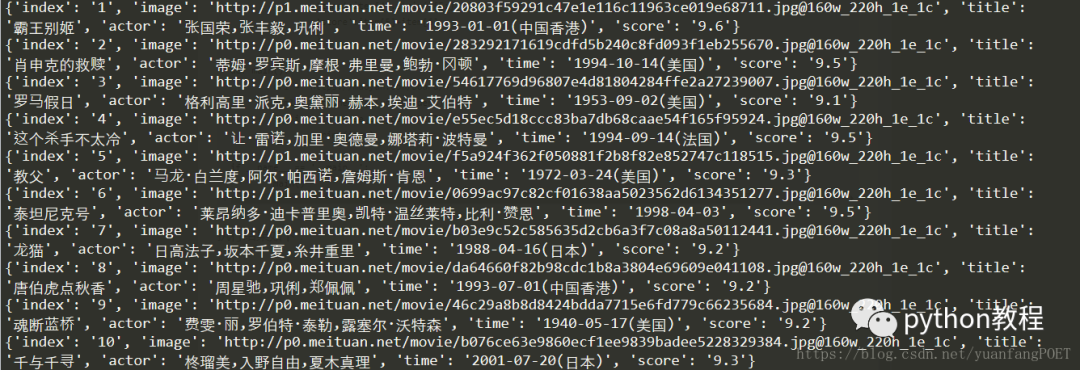

3.正则表达式提取信息

上图标示信息即为要提取的信息,代码实现如下:

#-*-coding:utf-8-*-import requestsimport refrom requests.exceptions import RequestException#猫眼电影网站有反爬虫措施,设置headers后可以爬取headers = {'Content-Type': 'text/plain; charset=UTF-8','Origin':'https://maoyan.com','Referer':'https://maoyan.com/board/4','User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'}#爬取网页源代码def get_one_page(url,headers):try:response =requests.get(url,headers =headers)if response.status_code == 200:return response.textreturn Noneexcept RequestsException:return None#正则表达式提取信息def parse_one_page(html):pattern = re.compile('<dd>.*?board-index.*?>(\d+)</i>.*?src="(.*?)".*?name"><a'+'.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>',re.S)items = re.findall(pattern,html)for item in items:yield{'index':item[0],'image':item[1],'title':item[2],'actor':item[3].strip()[3:],'time':item[4].strip()[5:],'score':item[5]+item[6]}def main():url = "https://maoyan.com/board/4"html = get_one_page(url,headers)for item in parse_one_page(html):print(item)if __name__ == '__main__':main()

执行结果如下:

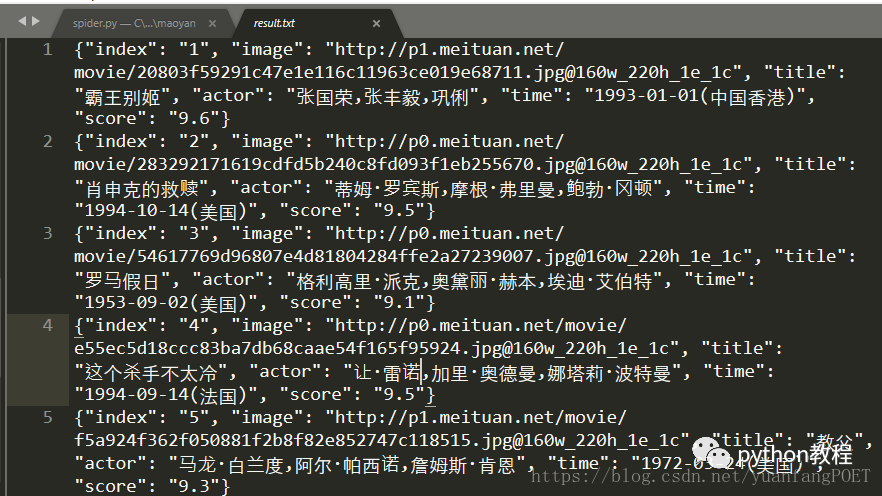

4.猫眼TOP100所有信息写入文件

上边代码实现单页的信息抓取,要想爬取100个电影的信息,先观察每一页url的变化,点开每一页我们会发现url进行变化,原url后面多了‘?offset=0',且offset的值变化从0,10,20,变化如下:

代码实现如下:

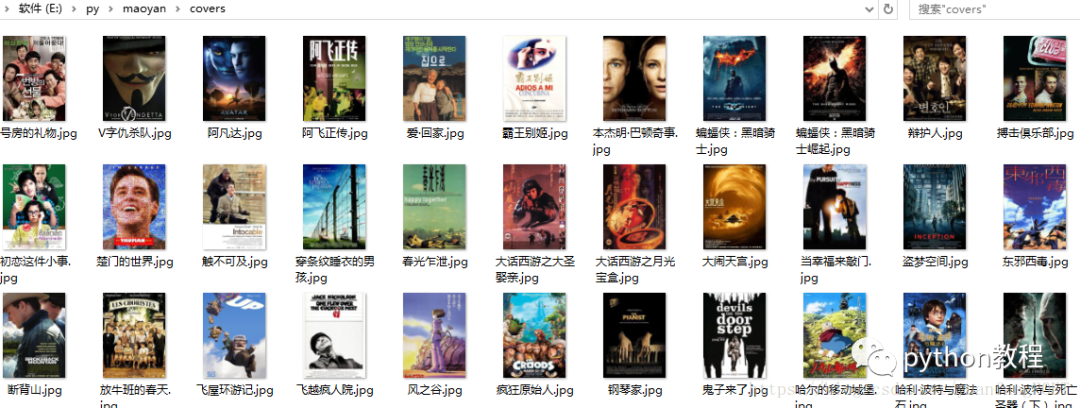

#-*-coding:utf-8-*-import requestsimport reimport jsonimport osfrom requests.exceptions import RequestException#猫眼电影网站有反爬虫措施,设置headers后可以爬取headers = {'Content-Type': 'text/plain; charset=UTF-8','Origin':'https://maoyan.com','Referer':'https://maoyan.com/board/4','User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'}#爬取网页源代码def get_one_page(url,headers):try:response =requests.get(url,headers =headers)if response.status_code == 200:return response.textreturn Noneexcept RequestsException:return None#正则表达式提取信息def parse_one_page(html):pattern = re.compile('<dd>.*?board-index.*?>(\d+)</i>.*?src="(.*?)".*?name"><a'+'.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>.*?integer">(.*?)</i>.*?fraction">(.*?)</i>.*?</dd>',re.S)items = re.findall(pattern,html)for item in items:yield{'index':item[0],'image':item[1],'title':item[2],'actor':item[3].strip()[3:],'time':item[4].strip()[5:],'score':item[5]+item[6]}#猫眼TOP100所有信息写入文件def write_to_file(content):#encoding ='utf-8',ensure_ascii =False,使写入文件的代码显示为中文with open('result.txt','a',encoding ='utf-8') as f:f.write(json.dumps(content,ensure_ascii =False)+'\n')f.close()#下载电影封面def save_image_file(url,path):jd = requests.get(url)if jd.status_code == 200:with open(path,'wb') as f:f.write(jd.content)f.close()def main(offset):url = "https://maoyan.com/board/4?offset="+str(offset)html = get_one_page(url,headers)if not os.path.exists('covers'):os.mkdir('covers')for item in parse_one_page(html):print(item)write_to_file(item)save_image_file(item['image'],'covers/'+item['title']+'.jpg')if __name__ == '__main__':#对每一页信息进行爬取for i in range(10):main(i*10)

爬取结果如下:

原文链接:https://blog.csdn.net/yuanfangPOET/article/details/81006521

*声明:本文于网络整理,版权归原作者所有,如来源信息有误或侵犯权益,请联系我们删除或授权

评论