【数据竞赛】Kaggle神技:一项堪比Dropout的NN训练技巧!

Swap Noise: 一种论文中所没有的NN神技

01

背景

本文介绍一种论文中所没有,但是却效果极佳的,拿了N项Kaggle大赛奖项的技巧,俗称Swap Noise,至于为什么有效,大家可以参考kaggle的论文,该技巧是几年前Kaggle GM Michael Jahrer提出。至于效果怎么样,下面一个案例足以说明一切。

常见的Swap Noise一共有三种类型,包括:

1. 列交换噪声(Columnwise swap noise): The idea of columwise noise is to noise only 20% of the batch by touching only full columns (check the animation below).

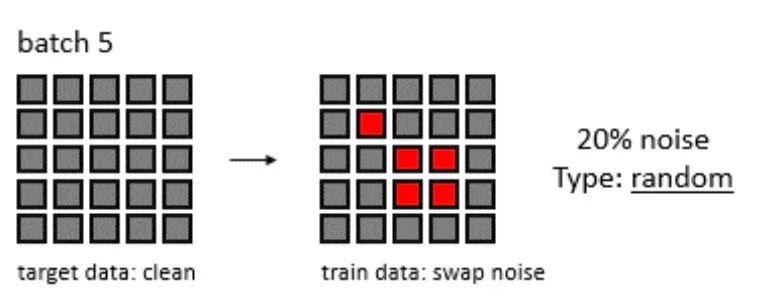

2. 随机噪声交换(randomized swap noise): Randomized noise ads 20% noisy values in a random way (see animation below)

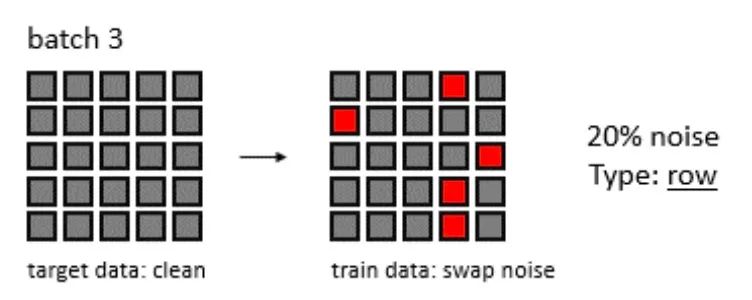

3. 行交换噪声(rowwise swap noise):As the name indicates rowwise noise replaces 20% of data so that each and every row is beeing transformed by a certain amount of noise.

02

案例

1. 导入工具包

1.1 导入使用的工具包

import pandas as pd

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import KFold

import xgboost as xgb

from tqdm import tqdm

import numpy as np

import pandas as pd

import tensorflow as tf

from lightgbm import LGBMRegressor

from sklearn.model_selection import KFold

import numpy as np

import seaborn as sns

from sklearn.metrics import mean_squared_error

def RMSE(y_true, y_pred):

return tf.sqrt(tf.reduce_mean(tf.square(y_true - y_pred)))

1.2 数据读取

train = pd.read_csv('./data/train.csv')

test = pd.read_csv('./data/test.csv')

2. 数据预处理

2.1 数据拼接

train_test = pd.concat([train,test],axis=0,ignore_index=True)

train_test.head()

| id | cont1 | cont2 | cont3 | cont4 | cont5 | cont6 | cont7 | cont8 | cont9 | cont10 | cont11 | cont12 | cont13 | cont14 | target | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0.670390 | 0.811300 | 0.643968 | 0.291791 | 0.284117 | 0.855953 | 0.890700 | 0.285542 | 0.558245 | 0.779418 | 0.921832 | 0.866772 | 0.878733 | 0.305411 | 7.243043 |

| 1 | 3 | 0.388053 | 0.621104 | 0.686102 | 0.501149 | 0.643790 | 0.449805 | 0.510824 | 0.580748 | 0.418335 | 0.432632 | 0.439872 | 0.434971 | 0.369957 | 0.369484 | 8.203331 |

| 2 | 4 | 0.834950 | 0.227436 | 0.301584 | 0.293408 | 0.606839 | 0.829175 | 0.506143 | 0.558771 | 0.587603 | 0.823312 | 0.567007 | 0.677708 | 0.882938 | 0.303047 | 7.776091 |

| 3 | 5 | 0.820708 | 0.160155 | 0.546887 | 0.726104 | 0.282444 | 0.785108 | 0.752758 | 0.823267 | 0.574466 | 0.580843 | 0.769594 | 0.818143 | 0.914281 | 0.279528 | 6.957716 |

| 4 | 8 | 0.935278 | 0.421235 | 0.303801 | 0.880214 | 0.665610 | 0.830131 | 0.487113 | 0.604157 | 0.874658 | 0.863427 | 0.983575 | 0.900464 | 0.935918 | 0.435772 | 7.951046 |

2.2 用于神经网络预处理的GaussianRank

import numpy as np

from joblib import Parallel, delayed

from scipy.interpolate import interp1d

from scipy.special import erf, erfinv

from sklearn.preprocessing import QuantileTransformer,PowerTransformer

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.utils.validation import FLOAT_DTYPES, check_array, check_is_fitted

class GaussRankScaler(BaseEstimator, TransformerMixin):

"""Transform features by scaling each feature to a normal distribution.

Parameters

----------

epsilon : float, optional, default 1e-4

A small amount added to the lower bound or subtracted

from the upper bound. This value prevents infinite number

from occurring when applying the inverse error function.

copy : boolean, optional, default True

If False, try to avoid a copy and do inplace scaling instead.

This is not guaranteed to always work inplace; e.g. if the data is

not a NumPy array, a copy may still be returned.

n_jobs : int or None, optional, default None

Number of jobs to run in parallel.

``None`` means 1 and ``-1`` means using all processors.

interp_kind : str or int, optional, default 'linear'

Specifies the kind of interpolation as a string

('linear', 'nearest', 'zero', 'slinear', 'quadratic', 'cubic',

'previous', 'next', where 'zero', 'slinear', 'quadratic' and 'cubic'

refer to a spline interpolation of zeroth, first, second or third

order; 'previous' and 'next' simply return the previous or next value

of the point) or as an integer specifying the order of the spline

interpolator to use.

interp_copy : bool, optional, default False

If True, the interpolation function makes internal copies of x and y.

If False, references to `x` and `y` are used.

Attributes

----------

interp_func_ : list

The interpolation function for each feature in the training set.

"""

def __init__(self, epsilon=1e-4, copy=True, n_jobs=None, interp_kind='linear', interp_copy=False):

self.epsilon = epsilon

self.copy = copy

self.interp_kind = interp_kind

self.interp_copy = interp_copy

self.fill_value = 'extrapolate'

self.n_jobs = n_jobs

def fit(self, X, y=None):

"""Fit interpolation function to link rank with original data for future scaling

Parameters

----------

X : array-like, shape (n_samples, n_features)

The data used to fit interpolation function for later scaling along the features axis.

y

Ignored

"""

X = check_array(X, copy=self.copy, estimator=self, dtype=FLOAT_DTYPES, force_all_finite=True)

self.interp_func_ = Parallel(n_jobs=self.n_jobs)(delayed(self._fit)(x) for x in X.T)

return self

def _fit(self, x):

x = self.drop_duplicates(x)

rank = np.argsort(np.argsort(x))

bound = 1.0 - self.epsilon

factor = np.max(rank) / 2.0 * bound

scaled_rank = np.clip(rank / factor - bound, -bound, bound)

return interp1d(

x, scaled_rank, kind=self.interp_kind, copy=self.interp_copy, fill_value=self.fill_value)

def transform(self, X, copy=None):

"""Scale the data with the Gauss Rank algorithm

Parameters

----------

X : array-like, shape (n_samples, n_features)

The data used to scale along the features axis.

copy : bool, optional (default: None)

Copy the input X or not.

"""

check_is_fitted(self, 'interp_func_')

copy = copy if copy is not None else self.copy

X = check_array(X, copy=copy, estimator=self, dtype=FLOAT_DTYPES, force_all_finite=True)

X = np.array(Parallel(n_jobs=self.n_jobs)(delayed(self._transform)(i, x) for i, x in enumerate(X.T))).T

return X

def _transform(self, i, x):

return erfinv(self.interp_func_[i](x))

def inverse_transform(self, X, copy=None):

"""Scale back the data to the original representation

Parameters

----------

X : array-like, shape [n_samples, n_features]

The data used to scale along the features axis.

copy : bool, optional (default: None)

Copy the input X or not.

"""

check_is_fitted(self, 'interp_func_')

copy = copy if copy is not None else self.copy

X = check_array(X, copy=copy, estimator=self, dtype=FLOAT_DTYPES, force_all_finite=True)

X = np.array(Parallel(n_jobs=self.n_jobs)(delayed(self._inverse_transform)(i, x) for i, x in enumerate(X.T))).T

return X

def _inverse_transform(self, i, x):

inv_interp_func = interp1d(self.interp_func_[i].y, self.interp_func_[i].x, kind=self.interp_kind,

copy=self.interp_copy, fill_value=self.fill_value)

return inv_interp_func(erf(x))

@staticmethod

def drop_duplicates(x):

is_unique = np.zeros_like(x, dtype=bool)

is_unique[np.unique(x, return_index=True)[1]] = True

return x[is_unique]

2.3 RankGaussian处理

feature_names = ['cont1', 'cont2', 'cont3', 'cont4', 'cont5', 'cont6', 'cont7','cont8', 'cont9', 'cont10', 'cont11', 'cont12', 'cont13', 'cont14']

scaler_linear = GaussRankScaler(interp_kind='linear',)

for c in feature_names:

train_test[c+'_linear_grank'] = scaler_linear.fit_transform(train_test[c].values.reshape(-1,1))

gaussian_linear_feature_names = [c + '_linear_grank' for c in feature_names]

3. 各种模型对比

3.1 NN模型建模

from tensorflow.keras import regularizers

from sklearn.model_selection import KFold, StratifiedKFold

import tensorflow as tf

# import tensorflow_addons as tfa

import tensorflow.keras.backend as K

from tensorflow.keras.layers import *

from tensorflow.keras.models import *

from tensorflow.keras.optimizers import *

from tensorflow.keras.callbacks import *

from tensorflow.keras.layers import Input

3.2 用于训练的Loss

def RMSE_Loss(y_true, y_pred):

return tf.sqrt(tf.reduce_mean(tf.square(y_true - y_pred)))

3.3 训练集验证集合划分

tr = train_test.iloc[:train.shape[0],:].copy()

te = train_test.iloc[train.shape[0]:,:].copy()

kf = KFold(n_splits=5,random_state=48,shuffle=False)

cnt = 0

for trn_idx, test_idx in kf.split(tr,tr['target']):

X_tr_dnn_linear_gaussian,X_val_dnn_linear_gaussian = tr[gaussian_linear_feature_names].iloc[trn_idx],tr[gaussian_linear_feature_names].iloc[test_idx]

y_tr,y_val = tr['target'].iloc[trn_idx],train['target'].iloc[test_idx]

break

/home/inf/anaconda3/lib/python3.7/site-packages/sklearn/model_selection/_split.py:297: FutureWarning: Setting a random_state has no effect since shuffle is False. This will raise an error in 0.24. You should leave random_state to its default (None), or set shuffle=True.

FutureWarning

4. 模型对比

4.1 自定义Generator+SwapNoise

### 自定义训练的generator

from tensorflow.python.keras.utils.data_utils import Sequence

class DataGenerator(Sequence):

'Generates data for Keras'

def __init__(self, IDs, features, labels, batch_size=128, shuffle_prob=0.15, shuffle_type=1, shuffle = True):

'Initialization'

self.batch_size = batch_size

self.list_IDs = IDs

self.shuffle = shuffle

self.features = features

self.feature_len = features.shape[0]

self.shuffle_prob = shuffle_prob

self.labels = labels

self.shuffle_type = shuffle_type

self.on_epoch_end()

def __len__(self):

'Denotes the number of batches per epoch'

return int(np.floor(len(self.list_IDs) / self.batch_size))

def __getitem__(self, index):

'Generate one batch of data'

# Generate indexes of the batch

indexes = self.indexes[index*self.batch_size:(index+1)*self.batch_size]

# Find list of IDs

list_IDs_temp = [self.list_IDs[k] for k in indexes]

# Generate data

X, y = self.__data_generation(list_IDs_temp)

return X, y

def on_epoch_end(self):

'Updates indexes after each epoch'

self.indexes = np.arange(len(self.list_IDs))

if self.shuffle == True:

np.random.shuffle(self.indexes)

def __row_swap_noise(self, X1,prob = 0.15):

batch_num_value = len(X1)

num_value = self.feature_len

num_swap = int(batch_num_value * prob)

fea_num = X1.shape[1]

for i in range(fea_num):

swap_idx_batch = np.random.choice(batch_num_value, num_swap, replace=False)

swap_idx_all = np.random.choice(num_value, num_swap, replace=False)

X1[swap_idx_batch,i] = self.features[swap_idx_all,i]

return X1

def __batch_row_swap_noise(self, X1,X2, prob = 0.15):

num_value = len(X1)

num_swap = int(num_value * prob)

fea_num = X1.shape[1]

for i in range(fea_num):

swap_idx = np.random.choice(num_value, num_swap, replace=False)

X1[swap_idx,i] = X2[swap_idx,i]

return X1

def __data_generation(self, list_IDs_temp):

'Generates data containing batch_size samples' # X : (n_samples, *dim, n_channels)

# Initialization

ID1 = list_IDs_temp

# Original Features

X1 = self.features[ID1]

if self.shuffle_type == 1:

ID2= ID1.copy()

np.random.shuffle(ID2)

X2 = self.features[ID2]

X = self.__batch_row_swap_noise(X1.copy(), X2.copy(), prob=self.shuffle_prob)

elif self.shuffle_type == 2:

X = self.__row_swap_noise(X1.copy(), prob=self.shuffle_prob)

else:

X = X1

y = self.labels[ID1]

return X, y

4.2. MLP(Sigmoid) + Swapnoise(Batch):0.7101

class MLP_Model(tf.keras.Model):

def __init__(self):

super(MLP_Model, self).__init__()

self.dense1 =Dense(1024, activation='relu')

self.drop1 = Dropout(0.25)

self.dense2 =Dense(512, activation='relu')

self.drop2 = Dropout(0.25)

self.dense_out =Dense(1,activation='sigmoid')

def call(self, inputs):

min_target = 0

max_target = 10.26757

x1 = self.dense1(inputs)

x1 = self.drop1(x1)

x2 = self.dense2(x1)

x2 = self.drop2(x2)

outputs = self.dense_out(x2)

outputs = outputs * (max_target - min_target) + min_target

return outputs

training_generator = DataGenerator(IDs = list(range(X_tr_dnn_linear_gaussian.shape[0])), features=X_tr_dnn_linear_gaussian.values,\

labels = y_tr.values, shuffle_type=1, shuffle=True)

validation_generator = DataGenerator(IDs = list(range(X_val_dnn_linear_gaussian.shape[0])), features=X_val_dnn_linear_gaussian.values,\

labels = y_val.values, shuffle_type=0, shuffle=False)

K.clear_session()

model_weights = f'./models/model_swapnoise.h5'

model_mlp = MLP_Model()

checkpoint = ModelCheckpoint(model_weights, monitor='val_loss', verbose=0, save_best_only=True, mode='min',

save_weights_only=True)

plateau = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=10, verbose=1, min_delta=1e-4, mode='min')

early_stopping = EarlyStopping(monitor="val_loss", patience=20)

def RMSE(y_true, y_pred):

return tf.sqrt(tf.reduce_mean(tf.square(y_true - y_pred)))

adam = tf.optimizers.Adam(lr=1e-3 * 2)

model_mlp.compile(optimizer=adam, loss=RMSE)

history = model_mlp.fit_generator(generator=training_generator,

validation_data=validation_generator,

epochs = 2000,

use_multiprocessing=True,

callbacks= [plateau, checkpoint, early_stopping], verbose=2,

workers=10)

Epoch 1/2000

WARNING:tensorflow:Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Bad argument number for Name: 3, expecting 4

WARNING: Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Bad argument number for Name: 3, expecting 4

1875/1875 - 16s - loss: 0.7549 - val_loss: 0.7238

Epoch 2/2000

1875/1875 - 16s - loss: 0.7314 - val_loss: 0.7275

Epoch 3/2000

1875/1875 - 16s - loss: 0.7261 - val_loss: 0.7268

Epoch 4/2000

1875/1875 - 16s - loss: 0.7232 - val_loss: 0.7200

Epoch 5/2000

1875/1875 - 16s - loss: 0.7227 - val_loss: 0.7210

Epoch 6/2000

1875/1875 - 16s - loss: 0.7226 - val_loss: 0.7203

Epoch 7/2000

1875/1875 - 16s - loss: 0.7221 - val_loss: 0.7194

Epoch 8/2000

1875/1875 - 16s - loss: 0.7221 - val_loss: 0.7190

Epoch 9/2000

1875/1875 - 16s - loss: 0.7218 - val_loss: 0.7188

Epoch 10/2000

1875/1875 - 16s - loss: 0.7218 - val_loss: 0.7189

Epoch 11/2000

1875/1875 - 16s - loss: 0.7215 - val_loss: 0.7213

Epoch 12/2000

1875/1875 - 16s - loss: 0.7214 - val_loss: 0.7182

Epoch 13/2000

1875/1875 - 16s - loss: 0.7213 - val_loss: 0.7199

Epoch 14/2000

1875/1875 - 16s - loss: 0.7217 - val_loss: 0.7179

Epoch 15/2000

1875/1875 - 16s - loss: 0.7214 - val_loss: 0.7194

Epoch 16/2000

1875/1875 - 16s - loss: 0.7213 - val_loss: 0.7186

Epoch 17/2000

1875/1875 - 16s - loss: 0.7215 - val_loss: 0.7180

Epoch 18/2000

1875/1875 - 16s - loss: 0.7215 - val_loss: 0.7182

Epoch 19/2000

1875/1875 - 16s - loss: 0.7212 - val_loss: 0.7174

Epoch 20/2000

1875/1875 - 16s - loss: 0.7209 - val_loss: 0.7174

Epoch 21/2000

1875/1875 - 16s - loss: 0.7208 - val_loss: 0.7185

Epoch 22/2000

1875/1875 - 16s - loss: 0.7211 - val_loss: 0.7188

Epoch 23/2000

1875/1875 - 16s - loss: 0.7208 - val_loss: 0.7179

Epoch 24/2000

1875/1875 - 16s - loss: 0.7208 - val_loss: 0.7190

Epoch 25/2000

1875/1875 - 16s - loss: 0.7208 - val_loss: 0.7194

Epoch 26/2000

1875/1875 - 16s - loss: 0.7204 - val_loss: 0.7160

Epoch 27/2000

1875/1875 - 16s - loss: 0.7209 - val_loss: 0.7154

Epoch 28/2000

1875/1875 - 16s - loss: 0.7206 - val_loss: 0.7163

Epoch 29/2000

1875/1875 - 16s - loss: 0.7204 - val_loss: 0.7188

Epoch 30/2000

1875/1875 - 16s - loss: 0.7206 - val_loss: 0.7152

Epoch 31/2000

1875/1875 - 16s - loss: 0.7206 - val_loss: 0.7182

Epoch 32/2000

1875/1875 - 16s - loss: 0.7205 - val_loss: 0.7167

Epoch 33/2000

1875/1875 - 16s - loss: 0.7208 - val_loss: 0.7169

Epoch 34/2000

1875/1875 - 16s - loss: 0.7204 - val_loss: 0.7159

Epoch 35/2000

1875/1875 - 16s - loss: 0.7198 - val_loss: 0.7179

Epoch 36/2000

1875/1875 - 16s - loss: 0.7204 - val_loss: 0.7156

Epoch 37/2000

1875/1875 - 16s - loss: 0.7200 - val_loss: 0.7157

Epoch 38/2000

1875/1875 - 16s - loss: 0.7201 - val_loss: 0.7152

Epoch 39/2000

1875/1875 - 16s - loss: 0.7202 - val_loss: 0.7184

Epoch 40/2000

Epoch 00040: ReduceLROnPlateau reducing learning rate to 0.0010000000474974513.

1875/1875 - 16s - loss: 0.7204 - val_loss: 0.7159

Epoch 41/2000

1875/1875 - 16s - loss: 0.7192 - val_loss: 0.7150

Epoch 42/2000

1875/1875 - 16s - loss: 0.7184 - val_loss: 0.7153

Epoch 43/2000

1875/1875 - 16s - loss: 0.7183 - val_loss: 0.7160

Epoch 44/2000

1875/1875 - 16s - loss: 0.7184 - val_loss: 0.7140

Epoch 45/2000

1875/1875 - 16s - loss: 0.7183 - val_loss: 0.7139

Epoch 46/2000

1875/1875 - 16s - loss: 0.7182 - val_loss: 0.7146

Epoch 47/2000

1875/1875 - 16s - loss: 0.7177 - val_loss: 0.7146

Epoch 48/2000

1875/1875 - 16s - loss: 0.7179 - val_loss: 0.7158

Epoch 49/2000

1875/1875 - 16s - loss: 0.7179 - val_loss: 0.7141

Epoch 50/2000

1875/1875 - 16s - loss: 0.7180 - val_loss: 0.7136

Epoch 51/2000

1875/1875 - 16s - loss: 0.7176 - val_loss: 0.7128

Epoch 52/2000

1875/1875 - 16s - loss: 0.7180 - val_loss: 0.7135

Epoch 53/2000

1875/1875 - 16s - loss: 0.7175 - val_loss: 0.7135

Epoch 54/2000

1875/1875 - 16s - loss: 0.7177 - val_loss: 0.7152

Epoch 55/2000

1875/1875 - 16s - loss: 0.7179 - val_loss: 0.7133

Epoch 56/2000

1875/1875 - 16s - loss: 0.7178 - val_loss: 0.7136

Epoch 57/2000

1875/1875 - 16s - loss: 0.7175 - val_loss: 0.7158

Epoch 58/2000

1875/1875 - 16s - loss: 0.7177 - val_loss: 0.7127

Epoch 59/2000

1875/1875 - 16s - loss: 0.7172 - val_loss: 0.7135

Epoch 60/2000

1875/1875 - 16s - loss: 0.7176 - val_loss: 0.7141

Epoch 61/2000

1875/1875 - 16s - loss: 0.7174 - val_loss: 0.7133

Epoch 62/2000

1875/1875 - 16s - loss: 0.7173 - val_loss: 0.7122

Epoch 63/2000

1875/1875 - 16s - loss: 0.7175 - val_loss: 0.7131

Epoch 64/2000

1875/1875 - 16s - loss: 0.7173 - val_loss: 0.7138

Epoch 65/2000

1875/1875 - 16s - loss: 0.7172 - val_loss: 0.7135

Epoch 66/2000

1875/1875 - 16s - loss: 0.7174 - val_loss: 0.7132

Epoch 67/2000

1875/1875 - 16s - loss: 0.7170 - val_loss: 0.7126

Epoch 68/2000

1875/1875 - 16s - loss: 0.7170 - val_loss: 0.7128

Epoch 69/2000

1875/1875 - 16s - loss: 0.7171 - val_loss: 0.7148

Epoch 70/2000

1875/1875 - 16s - loss: 0.7171 - val_loss: 0.7150

Epoch 71/2000

1875/1875 - 16s - loss: 0.7173 - val_loss: 0.7129

Epoch 72/2000

Epoch 00072: ReduceLROnPlateau reducing learning rate to 0.0005000000237487257.

1875/1875 - 16s - loss: 0.7170 - val_loss: 0.7124

Epoch 73/2000

1875/1875 - 16s - loss: 0.7162 - val_loss: 0.7126

Epoch 74/2000

1875/1875 - 16s - loss: 0.7161 - val_loss: 0.7121

Epoch 75/2000

1875/1875 - 16s - loss: 0.7159 - val_loss: 0.7123

Epoch 76/2000

1875/1875 - 16s - loss: 0.7159 - val_loss: 0.7123

Epoch 77/2000

1875/1875 - 16s - loss: 0.7157 - val_loss: 0.7122

Epoch 78/2000

1875/1875 - 16s - loss: 0.7164 - val_loss: 0.7116

Epoch 79/2000

1875/1875 - 16s - loss: 0.7161 - val_loss: 0.7117

Epoch 80/2000

1875/1875 - 16s - loss: 0.7156 - val_loss: 0.7122

Epoch 81/2000

1875/1875 - 16s - loss: 0.7153 - val_loss: 0.7119

Epoch 82/2000

1875/1875 - 16s - loss: 0.7159 - val_loss: 0.7117

Epoch 83/2000

1875/1875 - 16s - loss: 0.7155 - val_loss: 0.7119

Epoch 84/2000

1875/1875 - 16s - loss: 0.7155 - val_loss: 0.7122

Epoch 85/2000

1875/1875 - 16s - loss: 0.7157 - val_loss: 0.7121

Epoch 86/2000

1875/1875 - 16s - loss: 0.7150 - val_loss: 0.7120

Epoch 87/2000

1875/1875 - 16s - loss: 0.7154 - val_loss: 0.7115

Epoch 88/2000

Epoch 00088: ReduceLROnPlateau reducing learning rate to 0.0002500000118743628.

1875/1875 - 16s - loss: 0.7154 - val_loss: 0.7117

Epoch 89/2000

1875/1875 - 16s - loss: 0.7146 - val_loss: 0.7115

Epoch 90/2000

1875/1875 - 16s - loss: 0.7150 - val_loss: 0.7111

Epoch 91/2000

1875/1875 - 16s - loss: 0.7148 - val_loss: 0.7111

Epoch 92/2000

1875/1875 - 16s - loss: 0.7146 - val_loss: 0.7109

Epoch 93/2000

1875/1875 - 16s - loss: 0.7146 - val_loss: 0.7109

Epoch 94/2000

1875/1875 - 16s - loss: 0.7149 - val_loss: 0.7108

Epoch 95/2000

1875/1875 - 16s - loss: 0.7148 - val_loss: 0.7116

Epoch 96/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7105

Epoch 97/2000

1875/1875 - 16s - loss: 0.7139 - val_loss: 0.7105

Epoch 98/2000

1875/1875 - 16s - loss: 0.7147 - val_loss: 0.7110

Epoch 99/2000

1875/1875 - 16s - loss: 0.7145 - val_loss: 0.7110

Epoch 100/2000

1875/1875 - 16s - loss: 0.7149 - val_loss: 0.7111

Epoch 101/2000

1875/1875 - 16s - loss: 0.7149 - val_loss: 0.7114

Epoch 102/2000

1875/1875 - 16s - loss: 0.7146 - val_loss: 0.7110

Epoch 103/2000

1875/1875 - 16s - loss: 0.7143 - val_loss: 0.7109

Epoch 104/2000

1875/1875 - 16s - loss: 0.7148 - val_loss: 0.7111

Epoch 105/2000

1875/1875 - 16s - loss: 0.7145 - val_loss: 0.7105

Epoch 106/2000

Epoch 00106: ReduceLROnPlateau reducing learning rate to 0.0001250000059371814.

1875/1875 - 16s - loss: 0.7145 - val_loss: 0.7109

Epoch 107/2000

1875/1875 - 16s - loss: 0.7143 - val_loss: 0.7108

Epoch 108/2000

1875/1875 - 16s - loss: 0.7145 - val_loss: 0.7109

Epoch 109/2000

1875/1875 - 16s - loss: 0.7143 - val_loss: 0.7105

Epoch 110/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7107

Epoch 111/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7107

Epoch 112/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7104

Epoch 113/2000

1875/1875 - 16s - loss: 0.7142 - val_loss: 0.7111

Epoch 114/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7104

Epoch 115/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7105

Epoch 116/2000

1875/1875 - 16s - loss: 0.7144 - val_loss: 0.7106

Epoch 117/2000

1875/1875 - 16s - loss: 0.7142 - val_loss: 0.7103

Epoch 118/2000

1875/1875 - 16s - loss: 0.7142 - val_loss: 0.7108

Epoch 119/2000

1875/1875 - 16s - loss: 0.7139 - val_loss: 0.7103

Epoch 120/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7105

Epoch 121/2000

1875/1875 - 16s - loss: 0.7141 - val_loss: 0.7103

Epoch 122/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7104

Epoch 123/2000

1875/1875 - 16s - loss: 0.7136 - val_loss: 0.7101

Epoch 124/2000

1875/1875 - 16s - loss: 0.7143 - val_loss: 0.7105

Epoch 125/2000

1875/1875 - 16s - loss: 0.7141 - val_loss: 0.7106

Epoch 126/2000

1875/1875 - 16s - loss: 0.7141 - val_loss: 0.7109

Epoch 127/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7105

Epoch 128/2000

1875/1875 - 16s - loss: 0.7138 - val_loss: 0.7109

Epoch 129/2000

1875/1875 - 16s - loss: 0.7139 - val_loss: 0.7103

Epoch 130/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7104

Epoch 131/2000

1875/1875 - 16s - loss: 0.7141 - val_loss: 0.7106

Epoch 132/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7109

Epoch 133/2000

Epoch 00133: ReduceLROnPlateau reducing learning rate to 6.25000029685907e-05.

1875/1875 - 16s - loss: 0.7138 - val_loss: 0.7102

Epoch 134/2000

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7103

Epoch 135/2000

1875/1875 - 16s - loss: 0.7138 - val_loss: 0.7102

Epoch 136/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7101

Epoch 137/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7101

Epoch 138/2000

1875/1875 - 16s - loss: 0.7139 - val_loss: 0.7103

Epoch 139/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7103

Epoch 140/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7101

Epoch 141/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7101

Epoch 142/2000

1875/1875 - 16s - loss: 0.7138 - val_loss: 0.7105

Epoch 143/2000

Epoch 00143: ReduceLROnPlateau reducing learning rate to 3.125000148429535e-05.

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7104

Epoch 144/2000

1875/1875 - 16s - loss: 0.7137 - val_loss: 0.7104

Epoch 145/2000

1875/1875 - 16s - loss: 0.7132 - val_loss: 0.7101

Epoch 151/2000

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7102

Epoch 152/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7103

Epoch 153/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7102

Epoch 154/2000

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7101

Epoch 155/2000

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7101

Epoch 156/2000

1875/1875 - 16s - loss: 0.7133 - val_loss: 0.7101

Epoch 157/2000

Epoch 00157: ReduceLROnPlateau reducing learning rate to 1.5625000742147677e-05.

1875/1875 - 16s - loss: 0.7136 - val_loss: 0.7103

Epoch 158/2000

1875/1875 - 16s - loss: 0.7132 - val_loss: 0.7102

Epoch 159/2000

1875/1875 - 16s - loss: 0.7133 - val_loss: 0.7102

Epoch 160/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7102

Epoch 161/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7101

Epoch 162/2000

1875/1875 - 16s - loss: 0.7132 - val_loss: 0.7101

Epoch 163/2000

1875/1875 - 16s - loss: 0.7134 - val_loss: 0.7101

Epoch 164/2000

1875/1875 - 16s - loss: 0.7131 - val_loss: 0.7101

Epoch 165/2000

1875/1875 - 16s - loss: 0.7135 - val_loss: 0.7101

Epoch 166/2000

1875/1875 - 16s - loss: 0.7136 - val_loss: 0.7102

Epoch 167/2000

Epoch 00167: ReduceLROnPlateau reducing learning rate to 7.812500371073838e-06.

1875/1875 - 16s - loss: 0.7133 - val_loss: 0.7101

4.3 MLP(Sigmoid):0.7131

training_generator2 = DataGenerator(IDs = list(range(X_tr_dnn_linear_gaussian.shape[0])), features=X_tr_dnn_linear_gaussian.values,\

labels = y_tr.values, shuffle_type=0, shuffle=False)

validation_generator2 = DataGenerator(IDs = list(range(X_val_dnn_linear_gaussian.shape[0])), features=X_val_dnn_linear_gaussian.values,\

labels = y_val.values, shuffle_type=0, shuffle=False)

K.clear_session()

model_weights = f'./models/model_swap_nonoise.h5'

model_mlp_base = MLP_Model()

checkpoint = ModelCheckpoint(model_weights, monitor='val_loss', verbose=0, save_best_only=True, mode='min',

save_weights_only=True)

plateau = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=10, verbose=1, min_delta=1e-4, mode='min')

early_stopping = EarlyStopping(monitor="val_loss", patience=20)

def RMSE(y_true, y_pred):

return tf.sqrt(tf.reduce_mean(tf.square(y_true - y_pred)))

adam = tf.optimizers.Adam(lr=1e-3 * 2)

model_mlp_base.compile(optimizer=adam, loss=RMSE)

history = model_mlp_base.fit_generator(generator=training_generator2,

validation_data=validation_generator2,

epochs = 2000,

use_multiprocessing=True,

callbacks= [plateau, checkpoint, early_stopping], verbose=2,

workers=10)

Epoch 1/2000

WARNING:tensorflow:Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Bad argument number for Name: 3, expecting 4

WARNING: Entity > could not be transformed and will be executed as-is. Please report this to the AutoGraph team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output. Cause: Bad argument number for Name: 3, expecting 4

1875/1875 - 16s - loss: 0.7477 - val_loss: 0.7232

Epoch 2/2000

1875/1875 - 16s - loss: 0.7249 - val_loss: 0.7196

Epoch 3/2000

1875/1875 - 16s - loss: 0.7194 - val_loss: 0.7180

Epoch 4/2000

1875/1875 - 16s - loss: 0.7168 - val_loss: 0.7178

Epoch 5/2000

1875/1875 - 16s - loss: 0.7159 - val_loss: 0.7172

Epoch 6/2000

1875/1875 - 16s - loss: 0.7154 - val_loss: 0.7177

Epoch 7/2000

1875/1875 - 16s - loss: 0.7150 - val_loss: 0.7166

Epoch 8/2000

1875/1875 - 16s - loss: 0.7145 - val_loss: 0.7165

Epoch 9/2000

1875/1875 - 16s - loss: 0.7140 - val_loss: 0.7159

Epoch 10/2000

1875/1875 - 16s - loss: 0.7136 - val_loss: 0.7162

Epoch 11/2000

1875/1875 - 16s - loss: 0.7131 - val_loss: 0.7192

Epoch 12/2000

1875/1875 - 16s - loss: 0.7130 - val_loss: 0.7160

Epoch 13/2000

1875/1875 - 16s - loss: 0.7122 - val_loss: 0.7144

Epoch 14/2000

1875/1875 - 16s - loss: 0.7119 - val_loss: 0.7157

Epoch 15/2000

1875/1875 - 16s - loss: 0.7117 - val_loss: 0.7152

Epoch 16/2000

1875/1875 - 16s - loss: 0.7114 - val_loss: 0.7148

Epoch 17/2000

1875/1875 - 16s - loss: 0.7110 - val_loss: 0.7153

Epoch 18/2000

1875/1875 - 16s - loss: 0.7111 - val_loss: 0.7146

Epoch 19/2000

1875/1875 - 16s - loss: 0.7107 - val_loss: 0.7143

Epoch 20/2000

1875/1875 - 16s - loss: 0.7102 - val_loss: 0.7138

Epoch 21/2000

1875/1875 - 16s - loss: 0.7099 - val_loss: 0.7147

Epoch 22/2000

1875/1875 - 16s - loss: 0.7099 - val_loss: 0.7155

Epoch 23/2000

1875/1875 - 16s - loss: 0.7093 - val_loss: 0.7152

Epoch 24/2000

1875/1875 - 16s - loss: 0.7090 - val_loss: 0.7162

Epoch 25/2000

1875/1875 - 16s - loss: 0.7087 - val_loss: 0.7140

Epoch 26/2000

1875/1875 - 16s - loss: 0.7087 - val_loss: 0.7151

Epoch 27/2000

1875/1875 - 16s - loss: 0.7081 - val_loss: 0.7136

Epoch 28/2000

1875/1875 - 16s - loss: 0.7077 - val_loss: 0.7146

Epoch 29/2000

1875/1875 - 16s - loss: 0.7074 - val_loss: 0.7150

Epoch 30/2000

1875/1875 - 16s - loss: 0.7075 - val_loss: 0.7149

Epoch 31/2000

1875/1875 - 16s - loss: 0.7075 - val_loss: 0.7141

Epoch 32/2000

1875/1875 - 16s - loss: 0.7070 - val_loss: 0.7146

Epoch 33/2000

1875/1875 - 16s - loss: 0.7067 - val_loss: 0.7164

Epoch 34/2000

1875/1875 - 16s - loss: 0.7062 - val_loss: 0.7150

Epoch 35/2000

1875/1875 - 16s - loss: 0.7059 - val_loss: 0.7147

Epoch 36/2000

1875/1875 - 16s - loss: 0.7058 - val_loss: 0.7145

Epoch 37/2000

Epoch 00037: ReduceLROnPlateau reducing learning rate to 0.0010000000474974513.

1875/1875 - 16s - loss: 0.7060 - val_loss: 0.7163

Epoch 38/2000

1875/1875 - 16s - loss: 0.7020 - val_loss: 0.7131

Epoch 39/2000

1875/1875 - 16s - loss: 0.7000 - val_loss: 0.7136

Epoch 40/2000

1875/1875 - 16s - loss: 0.6998 - val_loss: 0.7142

Epoch 41/2000

1875/1875 - 16s - loss: 0.6991 - val_loss: 0.7140

Epoch 42/2000

1875/1875 - 16s - loss: 0.6986 - val_loss: 0.7139

Epoch 43/2000

1875/1875 - 16s - loss: 0.6985 - val_loss: 0.7142

Epoch 44/2000

1875/1875 - 16s - loss: 0.6980 - val_loss: 0.7140

Epoch 45/2000

1875/1875 - 16s - loss: 0.6974 - val_loss: 0.7145

Epoch 46/2000

1875/1875 - 16s - loss: 0.6971 - val_loss: 0.7146

Epoch 47/2000

1875/1875 - 16s - loss: 0.6964 - val_loss: 0.7142

Epoch 48/2000

Epoch 00048: ReduceLROnPlateau reducing learning rate to 0.0005000000237487257.

1875/1875 - 16s - loss: 0.6965 - val_loss: 0.7142

Epoch 49/2000

1875/1875 - 16s - loss: 0.6936 - val_loss: 0.7142

Epoch 50/2000

1875/1875 - 16s - loss: 0.6930 - val_loss: 0.7146

Epoch 51/2000

1875/1875 - 16s - loss: 0.6927 - val_loss: 0.7143

Epoch 52/2000

1875/1875 - 16s - loss: 0.6918 - val_loss: 0.7147

Epoch 53/2000

1875/1875 - 16s - loss: 0.6916 - val_loss: 0.7147

Epoch 54/2000

1875/1875 - 16s - loss: 0.6907 - val_loss: 0.7147

Epoch 55/2000

1875/1875 - 16s - loss: 0.6906 - val_loss: 0.7147

Epoch 56/2000

1875/1875 - 16s - loss: 0.6907 - val_loss: 0.7151

Epoch 57/2000

1875/1875 - 16s - loss: 0.6898 - val_loss: 0.7150

Epoch 58/2000

Epoch 00058: ReduceLROnPlateau reducing learning rate to 0.0002500000118743628.

1875/1875 - 16s - loss: 0.6900 - val_loss: 0.7149

03

参考文章

1. https://www.kaggle.com/springmanndaniel/1st-place-turn-your-data-into-daeta

往期精彩回顾

本站qq群704220115,加入微信群请扫码:

评论