【深度学习】Ivy 开源框架,深度学习大一统时代到来?

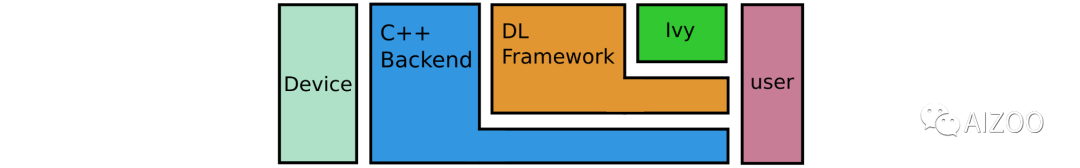

它来了,它带着统一主流深度学习框架的接口来了。最近,有一个开源的框架:IVY,它将几个主流的深度学习框架都做了一个统一的封装,包括 PyTorch、TensorFlow、MXNet、Jax 和 Numpy。下面来大致看一下这个框架吧。

代码地址:https://github.com/unifyai/ivy

先看看 IVY 官方是怎么定义的吧。

Ivy is a unified machine learning framework which maximizes the portability of machine learning codebases. Ivy wraps the functional APIs of existing frameworks. Framework-agnostic functions, libraries and layers can then be written using Ivy, with simultaneous support for all frameworks. Ivy currently supports Jax, TensorFlow, PyTorch, MXNet and Numpy.

翻译出来就是:

快速入门

pip install ivy-core来安装,然后你可以使用你喜欢的框架来训练一个模型,下面是一个示例:import ivy

class MyModel(ivy.Module):

def __init__(self):

self.linear0 = ivy.Linear(3, 64)

self.linear1 = ivy.Linear(64, 1)

ivy.Module.__init__(self)

def _forward(self, x):

x = ivy.relu(self.linear0(x))

return ivy.sigmoid(self.linear1(x))

ivy.set_framework('torch') # change to any framework!

model = MyModel()

optimizer = ivy.Adam(1e-4)

x_in = ivy.array([1., 2., 3.])

target = ivy.array([0.])

def loss_fn(v):

out = model(x_in, v=v)

return ivy.reduce_mean((out - target)**2)[0]

for step in range(100):

loss, grads = ivy.execute_with_gradients(loss_fn, model.v)

model.v = optimizer.step(model.v, grads)

print('step {} loss {}'.format(step, ivy.to_numpy(loss).item()))

print('Finished training!')

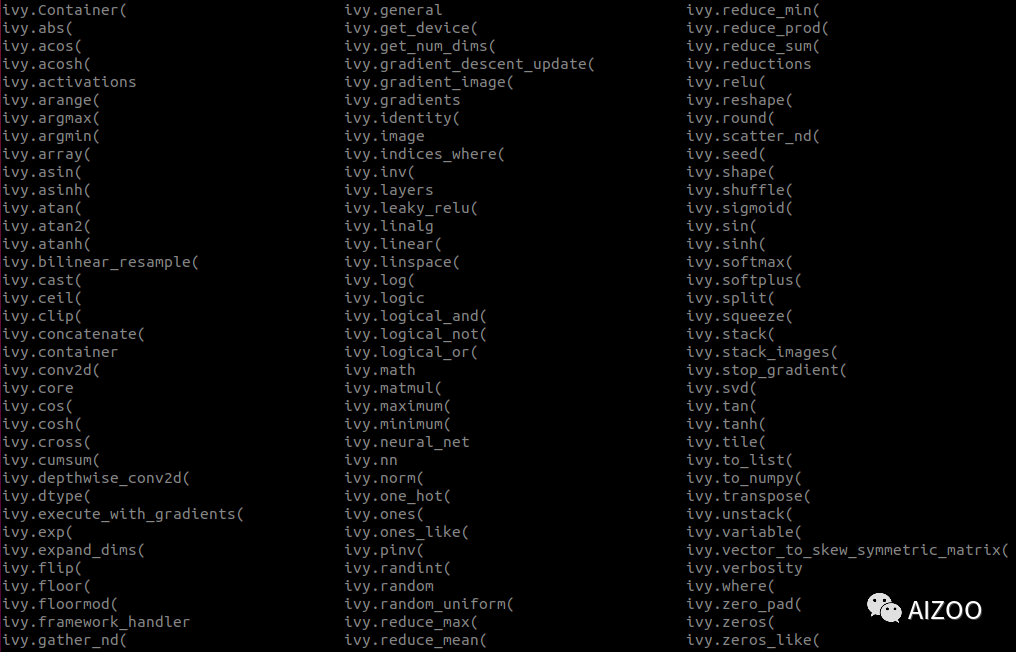

框架无关的函数

import jax.numpy as jnp

import tensorflow as tf

import numpy as np

import mxnet as mx

import torch

import ivy

jax_concatted = ivy.concatenate((jnp.ones((1,)), jnp.ones((1,))), -1)

tf_concatted = ivy.concatenate((tf.ones((1,)), tf.ones((1,))), -1)

np_concatted = ivy.concatenate((np.ones((1,)), np.ones((1,))), -1)

mx_concatted = ivy.concatenate((mx.nd.ones((1,)), mx.nd.ones((1,))), -1)

torch_concatted = ivy.concatenate((torch.ones((1,)), torch.ones((1,))), -1)

统一所有框架的目的何在?

ivy.clip,它很好的封装了其他框架的函数。

往期精彩回顾

适合初学者入门人工智能的路线及资料下载 (图文+视频)机器学习入门系列下载 中国大学慕课《机器学习》(黄海广主讲) 机器学习及深度学习笔记等资料打印 《统计学习方法》的代码复现专辑 AI基础下载 机器学习交流qq群955171419,加入微信群请扫码:

评论