Neo4j入门(四)批量更新节点属性

本文将用于介绍如何使用py2neo来实现Neo4j的批量更新节点属性。

单节点属性更新

首先我们先来看单个节点如何实现节点的属性,比如现有Neo4j图数据库中存在节点,其label为Test,属性有name=上海,我们需要为该节点增加属性enname=shanghai。

以下为Python示例代码:

# -*- coding: utf-8 -*-

from py2neo import Graph

from py2neo import NodeMatcher

# 连接Neo4j

url = "http://localhost:7474"

username = "neo4j"

password = "******"

graph = Graph(url, auth=(username, password))

print("neo4j info: {}".format(str(graph)))

# 查询节点

node_matcher = NodeMatcher(graph)

node = node_matcher.match('Test', name="上海").first()

# 新增enname属性

node["enname"] = "shanghai"

graph.push(node)

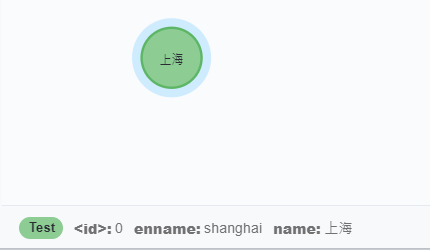

此时Neo4j图数据库中的该节点属性已经更新,见下图:

多节点单次更新

我们先利用Python代码,在Neo4j图数据库中创建10000个节点,代码如下:

# -*- coding: utf-8 -*-

from py2neo import Graph, Node, Subgraph

# 连接Neo4j

url = "http://localhost:7474"

username = "neo4j"

password = "******"

graph = Graph(url, auth=(username, password))

print("neo4j info: {}".format(str(graph)))

# 创建10000个节点

node_list = [Node("Test", name=f"上海_{i}") for i in range(10000)]

graph.create(Subgraph(nodes=node_list))

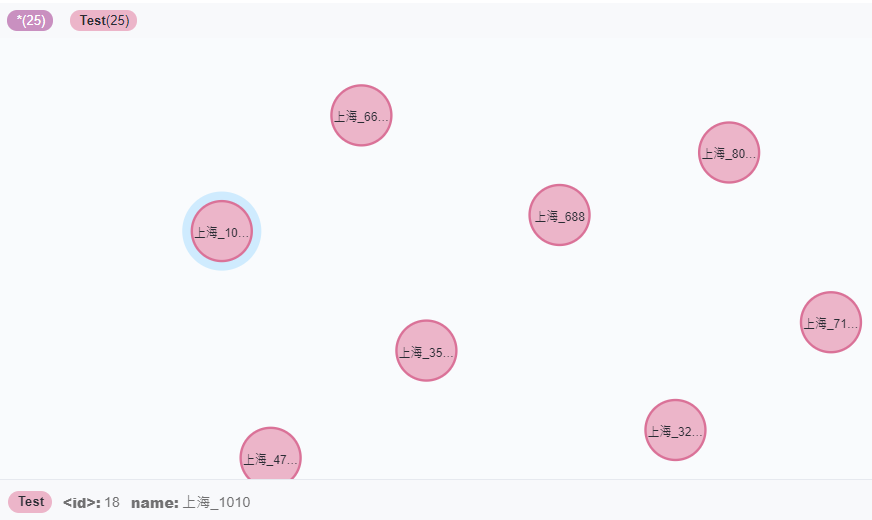

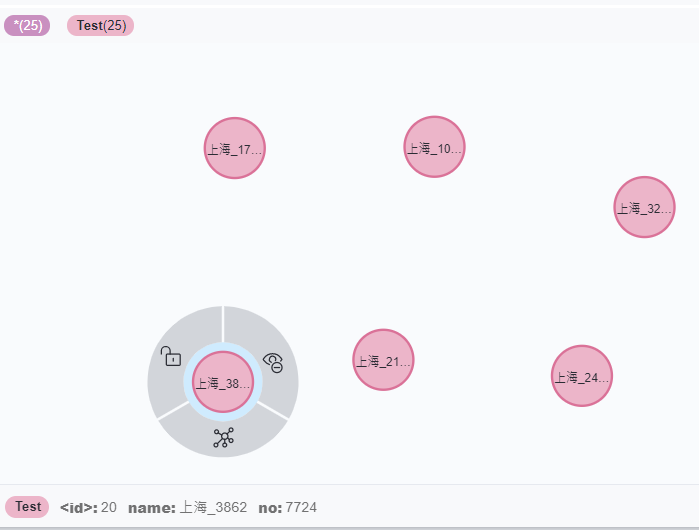

如下图所示:

我们对每个节点进行查询,再一个个为其新增

no属性,记录下10000个节点的属性更新的耗时。Python代码如下:# -*- coding: utf-8 -*-

import time

from py2neo import Graph, NodeMatcher

# 连接Neo4j

url = "http://localhost:7474"

username = "neo4j"

password = "******"

graph = Graph(url, auth=(username, password))

print("neo4j info: {}".format(str(graph)))

# 查询节点

node_list = []

for i in range(10000):

node_matcher = NodeMatcher(graph)

node = node_matcher.match('Test', name=f"上海_{i}").first()

node_list.append(node)

print("find nodes.")

# 一个个为节点新增no数据

s_time = time.time()

for i, node in enumerate(node_list):

node["no"] = i * 2

graph.push(node)

e_time = time.time()

print("cost time: {}".format(e_time - s_time))

运行结果如下:

cost time: 55.00530457496643

多节点批量更新

接下来,我们将节点属性进行批量更新。

# -*- coding: utf-8 -*-

import time

from py2neo import Graph, NodeMatcher, Subgraph

# 连接Neo4j

url = "http://localhost:7474"

username = "neo4j"

password = "******"

graph = Graph(url, auth=(username, password))

print("neo4j info: {}".format(str(graph)))

# 查询节点

node_list = []

for i in range(10000):

node_matcher = NodeMatcher(graph)

node = node_matcher.match('Test', name=f"上海_{i}").first()

node_list.append(node)

print("find nodes.")

print(len(node_list))

# 列表划分

def chunks(lst, n):

for i in range(0, len(lst), n):

yield lst[i:i + n]

# 批量更新

s_time = time.time()

for i, node in enumerate(node_list):

node["no"] = i * 2

for _ in chunks(node_list, 1000):

graph.push(Subgraph(nodes=_))

e_time = time.time()

print("cost time: {}".format(e_time - s_time))

运行结果是:

cost time: 15.243977546691895

可以看到,批量更新节点属性效率会高很多!当然,如果需要更新的批数比较多,还可以借助多线程或多进程来进一步提升性能。

评论