什么?" 万能的 "Transformer即将被踢下神坛...

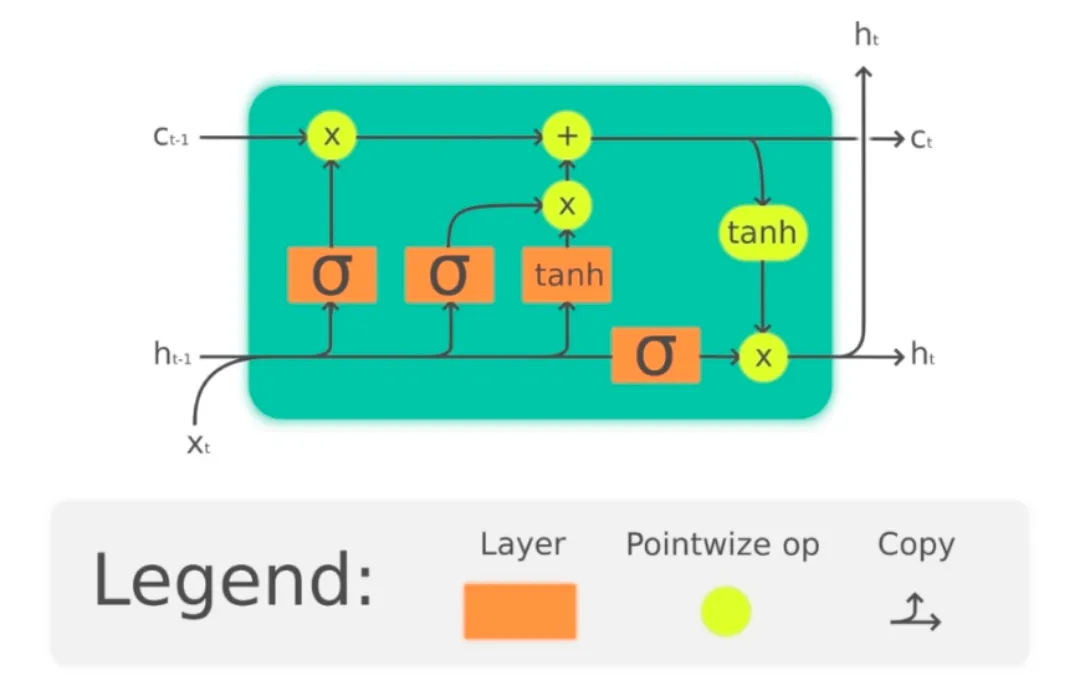

谷歌研究科学家 David Ha:Transformer 是新的 LSTM。

论文:Taming Transformers for High-Resolution Image Synthesis

链接:https://arxiv.org/pdf/2012.09841v1.pdf

论文:TransTrack: Multiple-Object Tracking with Transformer

链接:https://arxiv.org/pdf/2012.15460v1.pdf

论文:Compound Word Transformer: Learning to Compose Full-Song Music over Dynamic Directed Hypergraphs

链接:https://arxiv.org/pdf/2101.02402v1.pdf

论文:Dance Revolution: Long-Term Dance Generation with Music via Curriculum Learning

链接:https://arxiv.org/pdf/2006.06119v5.pdf

论文:Self-Attention Based Context-Aware 3D Object Detection

链接:https://arxiv.org/pdf/2101.02672v1.pdf

论文:PCT: Point Cloud Transformer

链接:https://arxiv.org/pdf/2012.09688v1.pdf

论文:Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Forecasting

链接:https://arxiv.org/pdf/1912.09363v3.pdf

论文:VinVL: Making Visual Representations Matter in Vision-Language Models

链接:https://arxiv.org/pdf/2101.00529v1.pdf

论文:End-to-end Lane Shape Prediction with Transformers

链接:https://arxiv.org/pdf/2011.04233v2.pdf

论文:Deformable DETR: Deformable Transformers for End-to-End Object Detection

链接:https://arxiv.org/pdf/2010.04159v2.pdf

本文部分素材来源于网络,如有侵权,联系删除。

实“鼠”不易 的2020年过去了,在大年三十儿晚上,真诚地祝大家新年快乐!

新的一年,七月在线将努力输出更多精品干货!最后,再次祝大家新年快乐,牛气冲天,牛牛牛~

特此,为大家准备了年度福利——特训好课 0.01元 秒杀