【深度学习】神经网络模型特征重要性可以查看了!!!

查看NN模型特征重要性的技巧

我们都知道树模型的特征重要性是非常容易绘制出来的,只需要直接调用树模型自带的API即可以得到在树模型中每个特征的重要性,那么对于神经网络我们该如何得到其特征重要性呢?

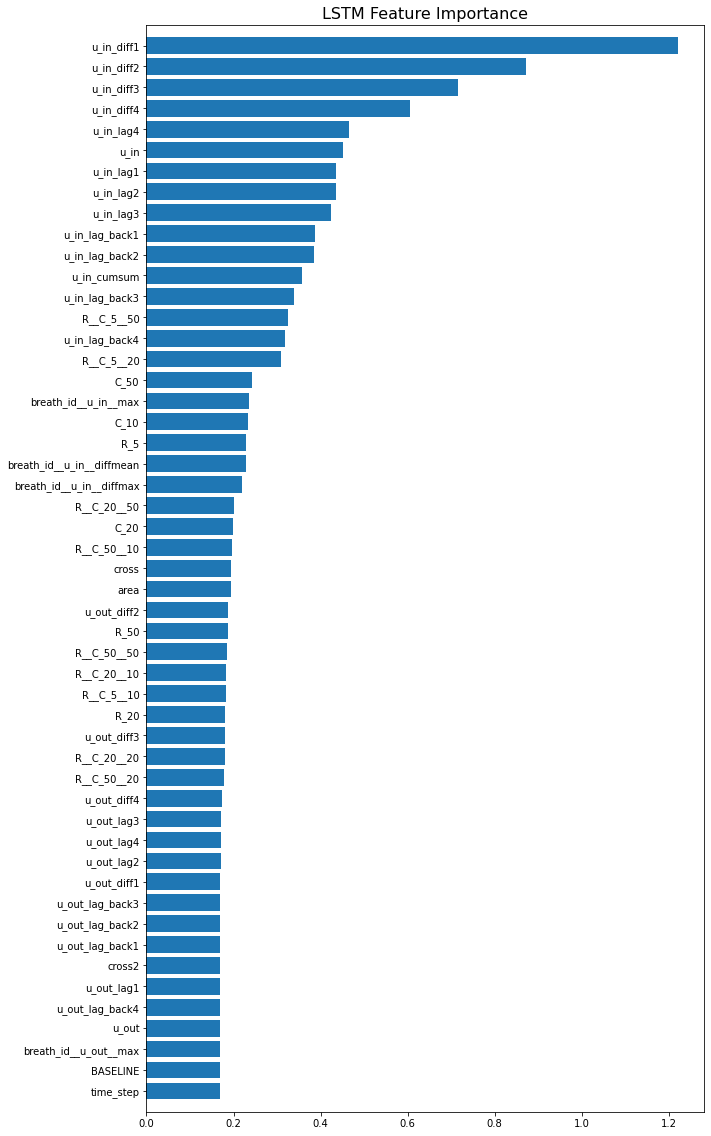

本篇文章我们就以LSTM为例,来介绍神经网络中模型特征重要性的一种获取方式。

基本思路

该策略的思想来源于:Permutation Feature Importance,我们以特征对于模型最终预测结果的变化来衡量特征的重要性。

实现步骤

NN模型特征重要性的获取步骤如下:

训练一个NN; 每次获取一个特征列,然后对其进行随机shuffle,使用模型对其进行预测并得到Loss; 记录每个特征列以及其对应的Loss; 每个Loss就是该特征对应的特征重要性,如果Loss越大,说明该特征对于NN模型越加重要;反之,则越加不重要。

代码摘自:https://www.kaggle.com/cdeotte/lstm-feature-importance/notebook

import matplotlib.pyplot as plt

from tqdm.notebook import tqdm

import tensorflow as tf

from tensorflow import keras

import tensorflow.keras.backend as K

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint

from tensorflow.keras.callbacks import LearningRateScheduler, ReduceLROnPlateau

from tensorflow.keras.optimizers.schedules import ExponentialDecay

from sklearn.metrics import mean_absolute_error as mae

from sklearn.preprocessing import RobustScaler, normalize

from sklearn.model_selection import train_test_split, GroupKFold, KFold

from IPython.display import display

COMPUTE_LSTM_IMPORTANCE = 1

ONE_FOLD_ONLY = 1

with gpu_strategy.scope():

kf = KFold(n_splits=NUM_FOLDS, shuffle=True, random_state=2021)

test_preds = []

for fold, (train_idx, test_idx) in enumerate(kf.split(train, targets)):

K.clear_session()

print('-'*15, '>', f'Fold {fold+1}', '<', '-'*15)

X_train, X_valid = train[train_idx], train[test_idx]

y_train, y_valid = targets[train_idx], targets[test_idx]

# 导入已经训练好的模型

model = keras.models.load_model('models/XXX.h5')

# 计算特征重要性

if COMPUTE_LSTM_IMPORTANCE:

results = []

print(' Computing LSTM feature importance...')

for k in tqdm(range(len(COLS))):

if k>0:

save_col = X_valid[:,:,k-1].copy()

np.random.shuffle(X_valid[:,:,k-1])

oof_preds = model.predict(X_valid, verbose=0).squeeze()

mae = np.mean(np.abs( oof_preds-y_valid ))

results.append({'feature':COLS[k],'mae':mae})

if k>0:

X_valid[:,:,k-1] = save_col

# 展示特征重要性

print()

df = pd.DataFrame(results)

df = df.sort_values('mae')

plt.figure(figsize=(10,20))

plt.barh(np.arange(len(COLS)),df.mae)

plt.yticks(np.arange(len(COLS)),df.feature.values)

plt.title('LSTM Feature Importance',size=16)

plt.ylim((-1,len(COLS)))

plt.show()

# SAVE LSTM FEATURE IMPORTANCE

df = df.sort_values('mae',ascending=False)

df.to_csv(f'lstm_feature_importance_fold_{fold}.csv',index=False)

# ONLY DO ONE FOLD

if ONE_FOLD_ONLY: break

https://www.kaggle.com/cdeotte/lstm-feature-importance/notebook Permutation Feature Importance

往期精彩回顾 本站qq群851320808,加入微信群请扫码:

评论