Alluxio源码编译适配CDH

一、源码编译

确保你安装了Java(JDK 8或更高版本)以及Maven3.3.9及以上

[root@song build]# git clone https://github.com/Alluxio/alluxio.git

[root@song build]# cd alluxio/

[root@song alluxio]# git checkout v2.4.1-1

[root@song alluxio]# mvn clean install -Phadoop-3 -Dhadoop.version=3.0.0-cdh6.3.2 -DskipTests

编译成功:

[INFO] Alluxio Under File System - Tencent Cloud COSN ..... SUCCESS [ 27.366 s]

[INFO] Alluxio UI ......................................... SUCCESS [05:49 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 35:53 min

[INFO] Finished at: 2021-01-26T21:38:17+08:00

[INFO] Final Memory: 213M/682M

[INFO] ------------------------------------------------------------------------

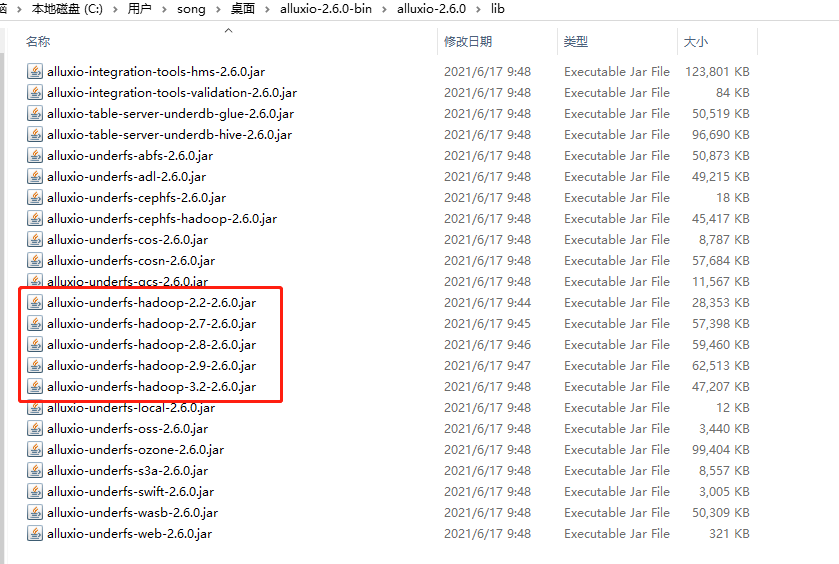

官方提供的二进制包是根据hadoop3.3进行编译的,里面包含了适配多个hdfs版本的包

自己编译的话,里面没有包含适配hdfs版本的包,需要自己在根据hdfs版本重新编译

如下图所示,官方提供的二级制包下包含了适配多个hdfs版本的包:

比如,你的大数据安装环境的hadoop版本是3.0.0-cdh6.3.2,因为官方的二进制包是根据apache hadoop3.3进行编译的,如果适配hadoop3.0.0-cdh6.3.2的话,可能会有兼容问题。故,需要根据自己的hadoop版本进行编译。

唯一的区别是lib目录下,少了很多适配hadoop版本的包,需要自己编译

二、编译适配多Hadoop版本

比如适配apache hadoop 2.8.0,则需要进行如下步骤:

编译生成

alluxio-shaded-hadoop-2.8.0.jar[song@k8s-node-02 Alluxio]$ cd shaded/hadoop/

[song@k8s-node-02 Alluxio]$ mvn -T 4C clean install -Dmaven.javadoc.skip=true -DskipTests \

-Dlicense.skip=true -Dcheckstyle.skip=true -Dfindbugs.skip=true \

-Pufs-hadoop-2 -Dufs.hadoop.version=2.8.0

[song@k8s-node-02 hadoop]$ ll target/

total 28836

-rw-rw-r-- 1 song song 29523360 Jul 7 09:42 alluxio-shaded-hadoop-2.8.0.jar

drwxrwxr-x 2 song song 28 Jul 7 09:41 maven-archiver

-rw-rw-r-- 1 song song 3244 Jul 7 09:41 original-alluxio-shaded-hadoop-2.8.0.jar如果不进行此步骤,则第2步maven会报找不到alluxio-shaded-hadoop-2.8.0.jar

编译生成

alluxio-underfs-hdfs jar[song@k8s-node-02 Alluxio]$ cd underfs/

[song@k8s-node-02 Alluxio]$ mvn -T 4C clean install -Dmaven.javadoc.skip=true -DskipTests \

-Dlicense.skip=true -Dcheckstyle.skip=true -Dfindbugs.skip=true \

-Pufs-hadoop-2 -Dufs.hadoop.version=2.8.0

[song@k8s-node-02 underfs]$ ll hdfs/target/

total 59688

-rw-rw-r-- 1 song song 26432 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2.jar

-rw-rw-r-- 1 song song 61061280 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2-jar-with-dependencies.jar

-rw-rw-r-- 1 song song 26533 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2-sources.jar

drwxrwxr-x 4 song song 37 Jul 8 11:14 classes

drwxrwxr-x 3 song song 25 Jul 8 11:14 generated-sources

drwxrwxr-x 3 song song 30 Jul 8 11:14 generated-test-sources

drwxrwxr-x 2 song song 28 Jul 8 11:14 maven-archiver

drwxrwxr-x 3 song song 35 Jul 8 11:13 maven-status

drwxrwxr-x 3 song song 45 Jul 8 11:14 test-classes

[song@k8s-node-02 underfs]$ cd hdfs/target/

[song@k8s-node-02 target]$ cp alluxio-underfs-hdfs-2.5.0-RC2-jar-with-dependencies.jar alluxio-underfs-hdfs-2.8.0-2.5.0-RC2.jar

[song@k8s-node-02 target]$ ll

total 119320

-rw-rw-r-- 1 song song 26432 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2.jar

-rw-rw-r-- 1 song song 61061280 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2-jar-with-dependencies.jar

-rw-rw-r-- 1 song song 26533 Jul 8 11:14 alluxio-underfs-hdfs-2.5.0-RC2-sources.jar

-rw-rw-r-- 1 song song 61061280 Jul 8 11:22 alluxio-underfs-hdfs-2.8.0-2.5.0-RC2.jar复制

alluxio-underfs-hdfs-2.8.0-2.5.0-RC2.jar到第一步源码编译的 lib目录下即可。

三、HA安装

1、大数据集群信息

namenode节点:

dn75,dn76

zookeeper节点:

dn75,dn76,dn78

datanode/nodemaneger节点:

nn41,dn42-dn55,dn63-dn67,nn71-nn74

2、Alluxio集群规划

master:

与zookerper节点相同:dn75,dn76,dn78

worker节点:

与datanode/nodemanager节点相同:nn41,dn42-dn55,dn63-dn67,nn71-nn74

3、安装

前置条件: dn75节点hadoop用户已经做免密登录,且具有sudo免密权限

3.1 上传安装包

将编译好的Alluxio包上传到dn75节点上,如下:

[hadoop@dn75 app]$ ls

alluxio pssh

[hadoop@dn75 app]$ pwd

/home/hadoop/app

3.2 分发安装包

将dn75上的安装包使用pscp命令分发到各主机节点:

[hadoop@dn75 pssh]$ pssh -h other mkdir /home/hadoop/app

[hadoop@dn75 app]$ pscp -r -h pssh/other alluxio/ /home/hadoop/app/

3.3 修改配置文件

修改

alluxio-site.properties[hadoop@dn75 conf]$ cp alluxio-site.properties.template alluxio-site.properties

[hadoop@dn75 conf]$ vim alluxio-site.properties配置如下:

# Common properties

# alluxio.master.hostname=localhost

alluxio.master.mount.table.root.ufs=hdfs://nameservice1/user/hadoop/alluxio

# ha zk properties

alluxio.zookeeper.enabled=true

alluxio.zookeeper.address=dn75:2181,dn76:2181,dn78:2181

alluxio.master.journal.type=UFS

alluxio.master.journal.folder=hdfs://nameservice1/user/hadoop/alluxio/journal

alluxio.zookeeper.session.timeout=120s

alluxio.zookeeper.leader.connection.error.policy=SESSION

# hdfs properties

alluxio.underfs.hdfs.configuration=/etc/hadoop/conf/hdfs-site.xml:/etc/hadoop/conf/core-site.xml

# Worker properties

# alluxio.worker.ramdisk.size=1GB

# alluxio.worker.tieredstore.levels=1

alluxio.worker.tieredstore.level0.alias=MEM

alluxio.worker.tieredstore.level0.dirs.mediumtype=MEM,HDD,HDD

alluxio.worker.tieredstore.level0.dirs.path=/mnt/ramdisk,/data2/alluxio/data,/data3/alluxio/data

alluxio.worker.tieredstore.level0.dirs.quota=50GB,1024GB,1024GB配置

master[hadoop@dn75 conf]$ vim masters

dn75

dn76

dn78配置

workers[hadoop@dn75 conf]$ vim workers

nn41

dn42

dn43

dn44

dn45

dn46

dn47

dn48

dn49

dn50

dn51

dn52

dn53

dn54

dn55

dn63

dn64

dn65

dn66

dn67

nn71

nn72

nn73

nn74配置环境变量(可选)

[hadoop@dn75 conf]$ vim alluxio-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

3.4 分发配置文件到各节点

[hadoop@dn75 alluxio]$ ./bin/alluxio copyDir conf/

RSYNC'ing /home/hadoop/app/alluxio/conf to masters...

dn75

dn76

dn78

RSYNC'ing /home/hadoop/app/alluxio/conf to workers...

nn41

dn42

dn43

dn44

dn45

dn46

dn47

dn48

dn49

dn50

dn51

dn52

dn53

dn54

dn55

dn63

dn64

dn65

dn66

dn67

nn71

nn72

nn73

nn74

3.5 配置master节点alluxio.master.hostname

[hadoop@dn75 alluxio]$ cat conf/alluxio-site.properties

# Common properties

alluxio.master.hostname=dn75

[hadoop@dn76 alluxio]$ cat conf/alluxio-site.properties

# Common properties

alluxio.master.hostname=dn76

[hadoop@dn78 alluxio]$ cat conf/alluxio-site.properties

# Common properties

alluxio.master.hostname=dn78

4、启动

4.1 格式化Alluxio

[hadoop@dn75 alluxio]$ ./bin/alluxio format

4.2 启动Alluxio

[hadoop@dn75 alluxio]$ ./bin/alluxio-start.sh all SudoMount

四、挂载HDFS目录

1、主目录挂载

alluxio.master.mount.table.root.ufs=hdfs://<NAMENODE>:<PORT>

alluxio.master.mount.table.root.option.alluxio.underfs.version=<HADOOP VERSION>

2、子目录挂载

$ ./bin/alluxio fs mount \

--option alluxio.underfs.version=2.8.0 \

/mnt/hdfs27 hdfs://namenode2:8020/