基于SpringBoot+WebMagic实现一个的爬虫框架

阅读本文大概需要 7 分钟。

来自:www.jianshu.com/p/cfead4b3e34e

本文提供的源代码可以作为java爬虫项目的脚手架。

1.添加maven依赖

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>hyzx</groupId><artifactId>qbasic-crawler</artifactId><version>1.0.0</version><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>1.5.21.RELEASE</version><relativePath/> <!-- lookup parent from repository --></parent><properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><maven.test.skip>true</maven.test.skip><java.version>1.8</java.version><maven.compiler.plugin.version>3.8.1</maven.compiler.plugin.version><maven.resources.plugin.version>3.1.0</maven.resources.plugin.version><mysql.connector.version>5.1.47</mysql.connector.version><druid.spring.boot.starter.version>1.1.17</druid.spring.boot.starter.version><mybatis.spring.boot.starter.version>1.3.4</mybatis.spring.boot.starter.version><fastjson.version>1.2.58</fastjson.version><commons.lang3.version>3.9</commons.lang3.version><joda.time.version>2.10.2</joda.time.version><webmagic.core.version>0.7.3</webmagic.core.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-devtools</artifactId><scope>runtime</scope><optional>true</optional></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-configuration-processor</artifactId><optional>true</optional></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>${mysql.connector.version}</version></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId><version>${druid.spring.boot.starter.version}</version></dependency><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId><version>${mybatis.spring.boot.starter.version}</version></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>${fastjson.version}</version></dependency><dependency><groupId>org.apache.commons</groupId><artifactId>commons-lang3</artifactId><version>${commons.lang3.version}</version></dependency><dependency><groupId>joda-time</groupId><artifactId>joda-time</artifactId><version>${joda.time.version}</version></dependency><dependency><groupId>us.codecraft</groupId><artifactId>webmagic-core</artifactId><version>${webmagic.core.version}</version><exclusions><exclusion><groupId>org.slf4j</groupId><artifactId>slf4j-log4j12</artifactId></exclusion></exclusions></dependency></dependencies><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>${maven.compiler.plugin.version}</version><configuration><source>${java.version}</source><target>${java.version}</target><encoding>${project.build.sourceEncoding}</encoding></configuration></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-resources-plugin</artifactId><version>${maven.resources.plugin.version}</version><configuration><encoding>${project.build.sourceEncoding}</encoding></configuration></plugin><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId><configuration><fork>true</fork><addResources>true</addResources></configuration><executions><execution><goals><goal>repackage</goal></goals></execution></executions></plugin></plugins></build><repositories><repository><id>public</id><name>aliyun nexus</name><url>http://maven.aliyun.com/nexus/content/groups/public/</url><releases><enabled>true</enabled></releases></repository></repositories><pluginRepositories><pluginRepository><id>public</id><name>aliyun nexus</name><url>http://maven.aliyun.com/nexus/content/groups/public/</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></pluginRepository></pluginRepositories></project>

2.项目配置文件 application.properties

# mysql数据源配置spring.datasource.name=mysqlspring.datasource.type=com.alibaba.druid.pool.DruidDataSourcespring.datasource.driver-class-name=com.mysql.jdbc.Driverspring.datasource.url=jdbc:mysql://192.168.0.63:3306/gjhzjl?useUnicode=true&characterEncoding=utf8&useSSL=false&allowMultiQueries=truespring.datasource.username=rootspring.datasource.password=root# druid数据库连接池配置spring.datasource.druid.initial-size=5spring.datasource.druid.min-idle=5spring.datasource.druid.max-active=10spring.datasource.druid.max-wait=60000spring.datasource.druid.validation-query=SELECT 1 FROM DUALspring.datasource.druid.test-on-borrow=falsespring.datasource.druid.test-on-return=falsespring.datasource.druid.test-while-idle=truespring.datasource.druid.time-between-eviction-runs-millis=60000spring.datasource.druid.min-evictable-idle-time-millis=300000spring.datasource.druid.max-evictable-idle-time-millis=600000# mybatis配置mybatis.mapperLocations=classpath:mapper/**/*.xml

3.数据库表结构

CREATE TABLE `cms_content` (`contentId` varchar(40) NOT NULL COMMENT '内容ID',`title` varchar(150) NOT NULL COMMENT '标题',`content` longtext COMMENT '文章内容',`releaseDate` datetime NOT NULL COMMENT '发布日期',PRIMARY KEY (`contentId`)) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='CMS内容表';

4.实体类

import java.util.Date;public class CmsContentPO {private String contentId;private String title;private String content;private Date releaseDate;public String getContentId() {return contentId;}public void setContentId(String contentId) {this.contentId = contentId;}public String getTitle() {return title;}public void setTitle(String title) {this.title = title;}public String getContent() {return content;}public void setContent(String content) {this.content = content;}public Date getReleaseDate() {return releaseDate;}public void setReleaseDate(Date releaseDate) {this.releaseDate = releaseDate;}}

5.mapper接口

public interface CrawlerMapper {int addCmsContent(CmsContentPO record);}

6.CrawlerMapper.xml文件

<?xml version="1.0" encoding="UTF-8"?><!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd"><mapper namespace="com.hyzx.qbasic.dao.CrawlerMapper"><insert id="addCmsContent" parameterType="com.hyzx.qbasic.model.CmsContentPO">insert into cms_content (contentId,title,releaseDate,content)values (#{contentId,jdbcType=VARCHAR},#{title,jdbcType=VARCHAR},#{releaseDate,jdbcType=TIMESTAMP},#{content,jdbcType=LONGVARCHAR})</insert></mapper>

7.知乎页面内容处理类ZhihuPageProcessor

@Componentpublic class ZhihuPageProcessor implements PageProcessor {private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);public void process(Page page) {page.addTargetRequests(page.getHtml().links().regex("https://www\\.zhihu\\.com/question/\\d+/answer/\\d+.*").all());page.putField("title", page.getHtml().xpath("//h1[@class='QuestionHeader-title']/text()").toString());page.putField("answer", page.getHtml().xpath("//div[@class='QuestionAnswer-content']/tidyText()").toString());if (page.getResultItems().get("title") == null) {// 如果是列表页,跳过此页,pipeline不进行后续处理page.setSkip(true);}}public Site getSite() {return site;}}

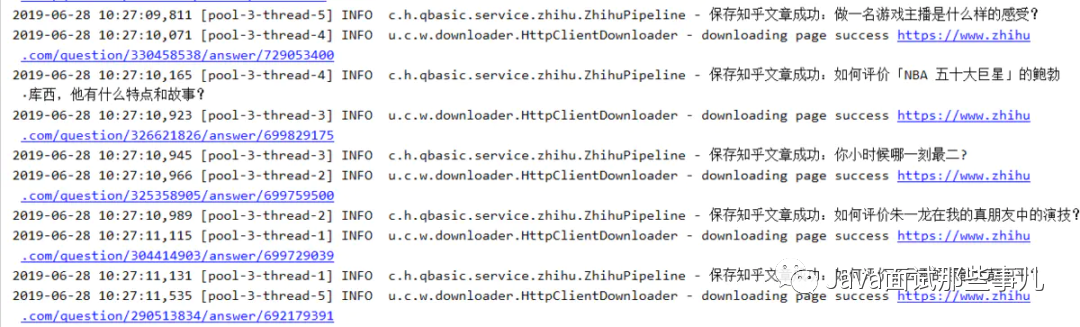

8.知乎数据处理类ZhihuPipeline

@Componentpublic class ZhihuPipeline implements Pipeline {private static final Logger LOGGER = LoggerFactory.getLogger(ZhihuPipeline.class);private CrawlerMapper crawlerMapper;public void process(ResultItems resultItems, Task task) {String title = resultItems.get("title");String answer = resultItems.get("answer");CmsContentPO contentPO = new CmsContentPO();contentPO.setContentId(UUID.randomUUID().toString());contentPO.setTitle(title);contentPO.setReleaseDate(new Date());contentPO.setContent(answer);try {boolean success = crawlerMapper.addCmsContent(contentPO) > 0;LOGGER.info("保存知乎文章成功:{}", title);} catch (Exception ex) {LOGGER.error("保存知乎文章失败", ex);}}}

9.知乎爬虫任务类ZhihuTask

@Componentpublic class ZhihuTask {private static final Logger LOGGER = LoggerFactory.getLogger(ZhihuPipeline.class);private ZhihuPipeline zhihuPipeline;private ZhihuPageProcessor zhihuPageProcessor;private ScheduledExecutorService timer = Executors.newSingleThreadScheduledExecutor();public void crawl() {// 定时任务,每10分钟爬取一次timer.scheduleWithFixedDelay(() -> {Thread.currentThread().setName("zhihuCrawlerThread");try {Spider.create(zhihuPageProcessor)// 从https://www.zhihu.com/explore开始抓.addUrl("https://www.zhihu.com/explore")// 抓取到的数据存数据库.addPipeline(zhihuPipeline)// 开启2个线程抓取.thread(2)// 异步启动爬虫.start();} catch (Exception ex) {LOGGER.error("定时抓取知乎数据线程执行异常", ex);}}, 0, 10, TimeUnit.MINUTES);}}

10.Spring boot程序启动类

@SpringBootApplication(basePackages = "com.hyzx.qbasic.dao")public class Application implements CommandLineRunner {private ZhihuTask zhihuTask;public static void main(String[] args) throws IOException {SpringApplication.run(Application.class, args);}public void run(String... strings) throws Exception {// 爬取知乎数据zhihuTask.crawl();}}

推荐阅读:

最近面试BAT,整理一份面试资料《Java面试BATJ通关手册》,覆盖了Java核心技术、JVM、Java并发、SSM、微服务、数据库、数据结构等等。

朕已阅

评论