揭秘容器启动缓慢的罪魁祸首

随着dockershim进入淘汰时段,Containerd项目的使用迈入议程,在替换期间,观测到容器启动异常,通过本文,让我们深入containerd底层运作,排查问题根源。

随着dockershim进入淘汰时段,Containerd项目的使用迈入议程,在替换期间,观测到容器启动异常,通过本文,让我们深入containerd底层运作,排查问题根源。

编辑|zouyee

近期内部进行Containerd替换dockershim测试,我们观测到业务容器在启动后需要很长时间才能正常运行。其中

init容器在一秒内完成,但是容器有时需要超过一分钟才开始执行。

查看 kubelet 日志,发现当节点刚启动时,PLEG的Relisting方法(通常每秒运行一次)需要超过30秒才能完成。但是,过来了几分钟后,这个现象就消失了,最终"Relisting"方法按预期的每秒一次的频率运行。

这个观察结果表明,在节点刚启动时可能存在一些问题,导致容器执行延迟,但随着节点运行,这个问题会自动消失,容器最终都正常运行。

kubelet_init[1680]: I0602 05:48:06.350695 1679 generic.go:191] "GenericPLEG: Relisting"

kubelet_init[1680]: I0602 05:48:07.352436 1679 generic.go:191] "GenericPLEG: Relisting"

kubelet_init[1680]: I0602 05:48:49.669827 1679 generic.go:191] "GenericPLEG: Relisting"

kubelet_init[1680]: I0602 05:48:50.672822 1679 generic.go:191] "GenericPLEG: Relisting"

kubelet_init[1680]: I0602 05:48:51.676857 1679 generic.go:191] "GenericPLEG: Relisting"

其中containerd的配置config.toml如下所示:

version = 2

root = "/var/lib/containerd"state = "/run/containerd"

[grpc]

address = "/run/containerd/containerd.sock"

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d:/etc/docker/certs.d"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"conf_dir = "/etc/cni/net.d"

NOTE:

Containerd:1.6.21,K8s:1.19, Kernel:5.15.0

技术背景

dockershim与CRI

2022年05月,

Kubernetes 1.24正式发布

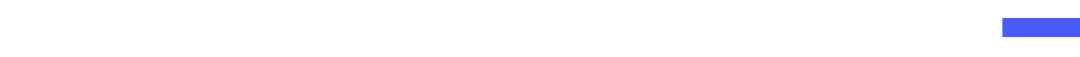

,比较引人注意是在这个版本中正式将dockershim 组件从 kubelet 中移除。从这个版本开始,用户使用Kubelet时可以优先选择containerd 或 CRI-O作为容器运行时,其中Containerd架构演变如下所示,如需了解更多,参看Containerd深度剖析-runtime篇

PLEG

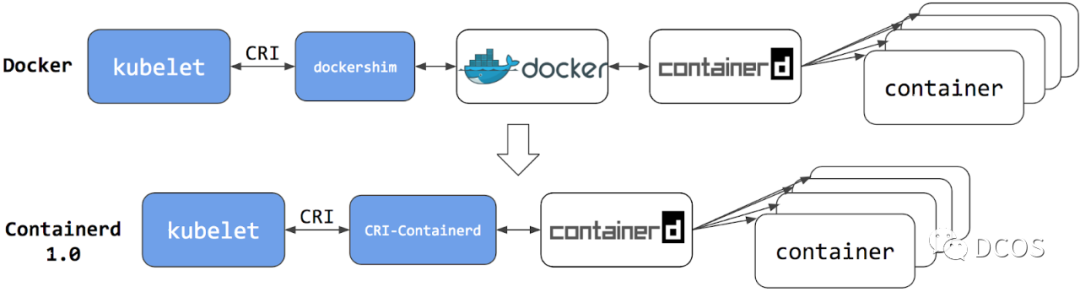

PLEG (Pod Lifecycle Event Generator),即Pod 生命周期事件生成器。在 Kubernetes 中(分布式架构),每个节点上都运行着一个守护进程 Kubelet 来管理节点上的容器,适时调整容器的实际状态以匹配 spec 中定义的状态(最终一致性)。具体来说,Kubelet 需要对pod两个地方的变更做出及时的操作:

Pod spec 中定义的状态

容器运行时的状态

对于 Pod来说,Kubelet 会从多个数据来源(api、file以及http) watch Pod spec 中的变化。对于容器来说,Kubelet 会定期轮询容器运行时,以获取所有容器的最新状态。随着 Pod 和容器数量的增加,轮询会产生较大开销,带来的周期性大量并发请求会导致较高的 CPU 使用率峰值,降低节点性能,从而降低节点的可靠性。为了降低 Pod 的管理开销,提升 Kubelet 的性能和可扩展性,引入了 PLEG,改进了之前的工作方式:

减少空闲期间的不必要工作(例如 Pod 的定义和容器的状态没有发生更改)。

减少获取容器状态的并发请求数量。

注意:

为了进一步降低损耗,社区推出了基于event实现的PLEG,当然也需要CRI运行时支持

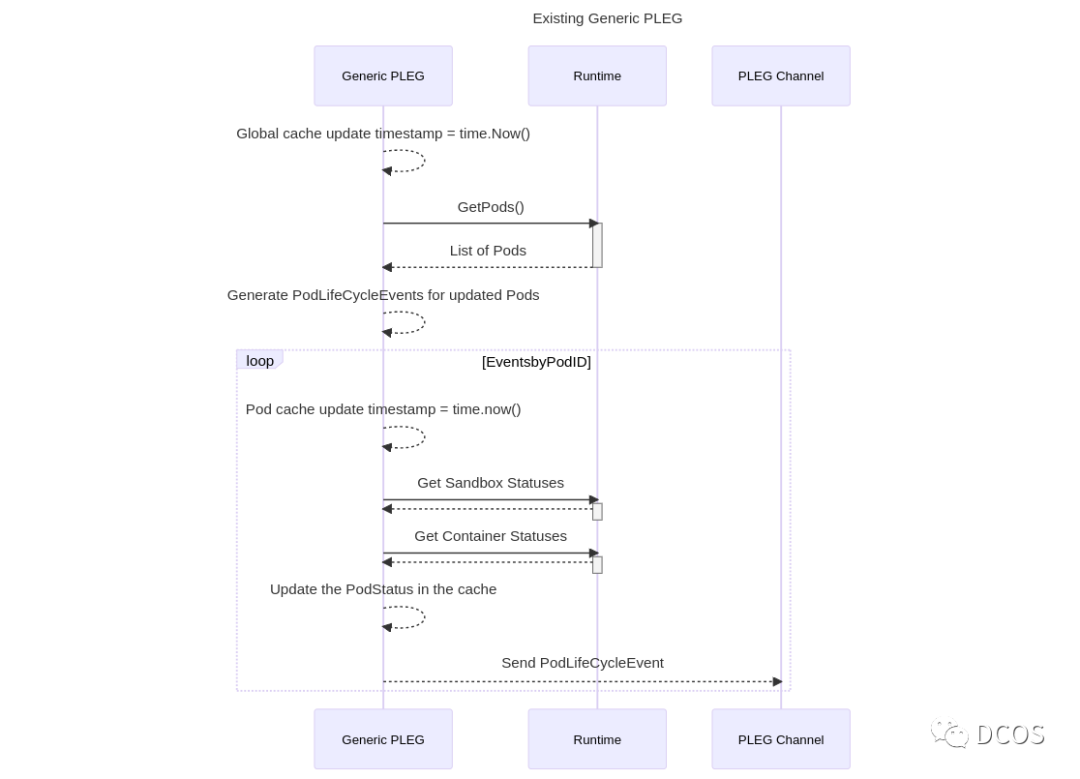

ImagePull

ctr image pull

对应的步骤如下:

resolve用户需要下载的镜像

从registry pull镜像,把镜像层内容和config保存进content服务,把镜像相关的元数据保存进images元数据服务

unpack进snapshot服务

注意

:这里的content、images等服务都是指containerd提供的gRPC服务,在解压每个layer时,containerd会临时挂载/卸载所有父快照。

问题排除

基于上述的技术背景,可以知道

GenericPLEG:Relisting

调用了 containerd 的 CRI(Container Runtime Interface)来获取所有正在运行的容器列表。

// relist queries the container runtime for list of pods/containers, compare

// with the internal pods/containers, and generates events accordingly.

func (g *GenericPLEG) relist() {

klog.V(5).Infof("GenericPLEG: Relisting")

if lastRelistTime := g.getRelistTime(); !lastRelistTime.IsZero() {

metrics.PLEGRelistInterval.Observe(metrics.SinceInSeconds(lastRelistTime))

}

timestamp := g.clock.Now()

defer func() {

metrics.PLEGRelistDuration.Observe(metrics.SinceInSeconds(timestamp))

}()

// Get all the pods.

podList, err := g.runtime.GetPods(true)

那么相应的,我们可以排查containerd日志,其日志表明containerd 本身或者 shim 在停止容器时遇到了问题。

containerd[2206]: {"error":"failed to stop container: failed to delete task: context deadline exceeded:

unknown","level":"error","msg":"failed to handle container TaskExit event \u0026TaskExit{ContainerID:8f71eadd772ffde7f9537998844aa3f7a8a4f15ec182a5943ef0d60f242b1929,ID:8f71eadd772ffde7f9537998844aa3f7a8a4f15ec182a5943ef0d60f242b1929,Pid:3658,ExitStatus:0,ExitedAt:2023-06-15 06:39:36.566238047 +0000 UTC,XXX_unrecognized:[],}","time":"2023-06-15T06:39:46.568049942Z"}

因此获取在 goroutine dump日志,从中可以发现一个 goroutine 正在等待 Delete 调用。

goroutine 1654 [select]:

github.com/containerd/ttrpc.(*Client).dispatch(0xc001ae1780, {0x56190174ac08?, 0xc000ae0180}, 0xc000ae05a0, 0xc0001183c0)

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/client.go:163 +0x2ad

github.com/containerd/ttrpc.defaultClientInterceptor({0x56190174ac08?, 0xc000ae0180?}, 0x5619023eb940?, 0xc000f982a0?, 0x6993c8a9f?, 0x5619023eb940?)

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/interceptor.go:49 +0x26

github.com/containerd/ttrpc.(*Client).Call(0xc001ae1780, {0x56190174ac08, 0xc000ae0180}, {0x561900ea8b7f, 0x17}, {0x561900e8851e, 0x6}, {0x56190167d8a0?, 0xc000e6a880?}, {0x56190167d9e0, ...})

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/client.go:132 +0x3ac

github.com/containerd/containerd/runtime/v2/task.(*taskClient).Delete(0xc00011a578, {0x56190174ac08, 0xc000ae0180}, 0x5618fff5830f?)

/go/src/github.com/containerd/containerd/runtime/v2/task/shim.pb.go:3609 +0x98

github.com/containerd/containerd/runtime/v2.(*shimTask).delete(0xc00165e870, {0x56190174ac08, 0xc000ae0180}, 0xc0009ab988)

/go/src/github.com/containerd/containerd/runtime/v2/shim.go:275 +0xc2

github.com/containerd/containerd/runtime/v2.(*TaskManager).Delete(0xc00040a0f8, {0x56190174ac08, 0xc000ae0180}, {0xc0013aaa40?, 0x40?})

/go/src/github.com/containerd/containerd/runtime/v2/manager.go:419 +0x8c

github.com/containerd/containerd/services/tasks.(*local).Delete(0xc00070a5a0, {0x56190174ac08, 0xc000ae0180}, 0xc000d7cd50, {0x561901620fe0?, 0xc0009abab8?, 0x5618ffbcf13f?})

/go/src/github.com/containerd/containerd/services/tasks/local.go:291 +0x11a

github.com/containerd/containerd.(*task).Delete(0xc0006ba440, {0x56190174ac08, 0xc000ae0180}, {0xc000d08570, 0x2, 0x56190174b1f0?})

/go/src/github.com/containerd/containerd/task.go:332 +0x355

github.com/containerd/containerd/pkg/cri/server.handleContainerExit({0x56190174ac08, 0xc000ae0180}, 0xc000ae0120, {{{0xc0013aaa40, 0x40}, {0xc000dfd7a0, 0x52}, {0xc001a8e240, 0x40}, 0xc001240380, ...}, ...})

/go/src/github.com/containerd/containerd/pkg/cri/server/events.go:387 +0x202

github.com/containerd/containerd/pkg/cri/server.(*eventMonitor).startContainerExitMonitor.func1.1(0xc000089240, 0xc000ae0120)

/go/src/github.com/containerd/containerd/pkg/cri/server/events.go:190 +0x310

github.com/containerd/containerd/pkg/cri/server.(*eventMonitor).startContainerExitMonitor.func1()

/go/src/github.com/containerd/containerd/pkg/cri/server/events.go:198 +0x37f

created by github.com/containerd/containerd/pkg/cri/server.(*eventMonitor).startContainerExitMonitor

/go/src/github.com/containerd/containerd/pkg/cri/server/events.go:162 +0x12b

在

containerd-shim-runc-v2

中可以发现一个 goroutine 挂在了

umount

系统调用上

goroutine 104 [syscall]:

syscall.Syscall(0x7c?, 0xc000161800?, 0xc0000c2200?, 0x7d?)

/usr/local/go/src/syscall/syscall_linux.go:68 +0x27

golang.org/x/sys/unix.Unmount({0xc0000c2200?, 0x0?}, 0xc00029c448?)

/go/src/github.com/containerd/containerd/vendor/golang.org/x/sys/unix/zsyscall_linux.go:1680 +0x7d

github.com/containerd/containerd/mount.unmount({0xc0000c2200, 0x7c}, 0x9ed280?)

/go/src/github.com/containerd/containerd/mount/mount_linux.go:155 +0x8c

github.com/containerd/containerd/mount.UnmountAll({0xc0000c2200, 0x7c}, 0xc000065080?)

/go/src/github.com/containerd/containerd/mount/mount_linux.go:185 +0x8c

github.com/containerd/containerd/pkg/process.(*Init).delete(0xc0000d2c60, {0x9f1460, 0xc000065080})

/go/src/github.com/containerd/containerd/pkg/process/init.go:312 +0x13a

github.com/containerd/containerd/pkg/process.(*stoppedState).Delete(0xc000012ab8, {0x9f1460?, 0xc000065080?})

/go/src/github.com/containerd/containerd/pkg/process/init_state.go:396 +0x2e

github.com/containerd/containerd/pkg/process.(*Init).Delete(0xc00002d180?, {0x9f1460?, 0xc000065080?})

/go/src/github.com/containerd/containerd/pkg/process/init.go:288 +0xb6

github.com/containerd/containerd/runtime/v2/runc.(*Container).Delete(0xc000132380?, {0x9f1460, 0xc000065080}, 0xc0003071c0)

/go/src/github.com/containerd/containerd/runtime/v2/runc/container.go:390 +0x62

github.com/containerd/containerd/runtime/v2/runc/task.(*service).Delete(0xc000132380, {0x9f1460, 0xc000065080}, 0xc0003071c0)

/go/src/github.com/containerd/containerd/runtime/v2/runc/task/service.go:216 +0x75

github.com/containerd/containerd/runtime/v2/task.RegisterTaskService.func4({0x9f1460, 0xc000065080}, 0xc00002ee00)

/go/src/github.com/containerd/containerd/runtime/v2/task/shim.pb.go:3477 +0x98

github.com/containerd/ttrpc.defaultServerInterceptor({0x9f1460?, 0xc000065080?}, 0x17?, 0xc000134760?, 0x6?)

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/interceptor.go:45 +0x26

github.com/containerd/ttrpc.(*serviceSet).dispatch(0xc000118650, {0x9f1460, 0xc000065080}, {0xc00002aba0, 0x17}, {0xc00027e22a, 0x6}, {0xc000180280, 0x42, 0x50})

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/services.go:95 +0x1be

github.com/containerd/ttrpc.(*serviceSet).call(0xd0e330?, {0x9f1460?, 0xc000065080?}, {0xc00002aba0?, 0x84eac0?}, {0xc00027e22a?, 0xc000308000?}, {0xc000180280, 0x42, 0x50})

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/services.go:64 +0x71

github.com/containerd/ttrpc.(*serverConn).run.func2(0x1b)

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/server.go:439 +0xe5

created by github.com/containerd/ttrpc.(*serverConn).run

/go/src/github.com/containerd/containerd/vendor/github.com/containerd/ttrpc/server.go:435 +0x6b1

这时候,我们的排查思路可以回到containerd.log,通过查看containerd.log,可以发现

UpdateContainerResources

请求,在等待 bbolt 锁:

goroutine 1723 [semacquire]:

sync.runtime_SemacquireMutex(0xc001a78300?, 0x9?, 0xc001500c00?)

/usr/local/go/src/runtime/sema.go:77 +0x25

sync.(*Mutex).lockSlow(0xc0002f9178)

/usr/local/go/src/sync/mutex.go:171 +0x165

sync.(*Mutex).Lock(...)

/usr/local/go/src/sync/mutex.go:90

go.etcd.io/bbolt.(*DB).beginRWTx(0xc0002f8fc0)

/go/src/github.com/containerd/containerd/vendor/go.etcd.io/bbolt/db.go:640 +0x77

go.etcd.io/bbolt.(*DB).Begin(0xc001739a70?, 0x87?)

/go/src/github.com/containerd/containerd/vendor/go.etcd.io/bbolt/db.go:589 +0x1d

go.etcd.io/bbolt.(*DB).Update(0x20?, 0xc000b44be0)

/go/src/github.com/containerd/containerd/vendor/go.etcd.io/bbolt/db.go:725 +0x3e

github.com/containerd/containerd/metadata.(*DB).Update(0xc0001e92d0, 0x56190174ac40?)

/go/src/github.com/containerd/containerd/metadata/db.go:246 +0x9e

github.com/containerd/containerd/services/containers.(*local).withStoreUpdate(0xc0003e8840, {0x56190174ac40?, 0xc0017390b0?}, 0x0?)

/go/src/github.com/containerd/containerd/services/containers/local.go:212 +0x3c

github.com/containerd/containerd/services/containers.(*local).Update(0xc0003e8840, {0x56190174ac40, 0xc0017390b0}, 0xc000eb0540, {0xedc1ca928?, 0x0?, 0xc0017399b0?})

/go/src/github.com/containerd/containerd/services/containers/local.go:156 +0x24e

github.com/containerd/containerd.(*remoteContainers).Update(_, {_, _}, {{0xc00136b280, 0x40}, 0xc0017398f0, {0xc0016cc280, 0x96}, {{0xc00145a9f0, 0x15}, ...}, ...}, ...)

/go/src/github.com/containerd/containerd/containerstore.go:129 +0x2ce

github.com/containerd/containerd.(*container).Update(0xc0006e4a80, {0x56190174ac40, 0xc0017390b0}, {0xc00040a0f0, 0x1, 0xc0016f0101?})

/go/src/github.com/containerd/containerd/container.go:318 +0x214

github.com/containerd/containerd/pkg/cri/server.updateContainerSpec({0x56190174ac40, 0xc0017390b0}, {0x561901754a58, 0xc0006e4a80}, 0xc0016f4b80)

而这部分代码的实现位于 containerd/pkg/cri/server/container_update_resources.go

func (c *criService) UpdateContainerResources(ctx context.Context, r *runtime.UpdateContainerResourcesRequest) (retRes *runtime.UpdateContainerResourcesResponse, retErr error) {

container, err := c.containerStore.Get(r.GetContainerId())

if err != nil {

return nil, fmt.Errorf("failed to find container: %w", err)

}

// Update resources in status update transaction, so that:

// 1) There won't be race condition with container start.

// 2) There won't be concurrent resource update to the same container.

if err := container.Status.UpdateSync(func(status containerstore.Status) (containerstore.Status, error) {

return c.updateContainerResources(ctx, container, r, status)

}); err != nil {

return nil, fmt.Errorf("failed to update resources: %w", err)

}

return &runtime.UpdateContainerResourcesResponse{}, nil

UpdateContainerResources

持有容器状态的锁,而

ListContainers

需要使用该锁,这就导致 PLEG (Pod Lifecycle Event Generator)花费很长时间。

goroutine 1665 [semacquire]:

sync.runtime_SemacquireMutex(0xe69?, 0xae?, 0x1768c1d3f53d5f79?)

/usr/local/go/src/runtime/sema.go:77 +0x25

sync.(*RWMutex).RLock(...)

/usr/local/go/src/sync/rwmutex.go:71

github.com/containerd/containerd/pkg/cri/store/container.(*statusStorage).Get(0xc00058a880?)

/go/src/github.com/containerd/containerd/pkg/cri/store/container/status.go:205 +0x9c

github.com/containerd/containerd/pkg/cri/server.toCRIContainer({{{0xc00136b280, 0x40}, {0xc00186b6d0, 0x4d}, {0xc001a8e6c0, 0x40}, 0xc0007129a0, {0xc00050eb40, 0x47}, {0xc000dfcd80, ...}, ...}, ...})

/go/src/github.com/containerd/containerd/pkg/cri/server/container_list.go:48 +0x3f

github.com/containerd/containerd/pkg/cri/server.(*criService).ListContainers(0xc000548400, {0xc0006027b0?, 0x561900e89376?}, 0xc000ef6130)

/go/src/github.com/containerd/containerd/pkg/cri/server/container_list.go:37 +0x205

github.com/containerd/containerd/pkg/cri/server.(*instrumentedService).ListContainers(0xc000708168, {0x56190174ac40, 0xc0006027b0}, 0xc000ef6130)

/go/src/github.com/containerd/containerd/pkg/cri/server/instrumented_service.go:515 +0x1d3

UpdateContainerResource

持有 bbolt 数据库的锁,其正在尝试将数据同步到存储中,因此我们猜测此时主机I/O存在压力,如果启用cgroup v2,可以通过查看io pressure文件(后续我们介绍一下PSI),或者通过iostat命令

goroutine 1268 [syscall]:

syscall.Syscall(0x0?, 0x0?, 0xc000984000?, 0x686?)

/usr/local/go/src/syscall/syscall_linux.go:68 +0x27

syscall.Fsync(0x0?)

/usr/local/go/src/syscall/zsyscall_linux_amd64.go:423 +0x2a

internal/poll.(*FD).Fsync.func1(...)

/usr/local/go/src/internal/poll/fd_fsync_posix.go:18

internal/poll.ignoringEINTR(...)

/usr/local/go/src/internal/poll/fd_posix.go:74

internal/poll.(*FD).Fsync(0x0?)

/usr/local/go/src/internal/poll/fd_fsync_posix.go:17 +0xf4

os.(*File).Sync(0xc00011a6b8)

/usr/local/go/src/os/file_posix.go:168 +0x4e

github.com/containerd/containerd/content/local.(*writer).Commit(0xc0008c7880, {0x56190174ac40, 0xc0006028a0}, 0x181ebe95, {0xc001476780, 0x47}, {0x0, 0x0, 0x5619023eb940?})

/go/src/github.com/containerd/containerd/content/local/writer.go:95 +0x24e

github.com/containerd/containerd/metadata.(*namespacedWriter).commit(0xc0006f5320, {0x56190174ac40, 0xc0006028a0}, 0x0?, 0x181ebe95, {0xc001476780, 0x47}, {0x0, 0x0, 0x0})

/go/src/github.com/containerd/containerd/metadata/content.go:641 +0x5bf

github.com/containerd/containerd/metadata.(*namespacedWriter).Commit.func1(0x0?)

/go/src/github.com/containerd/containerd/metadata/content.go:578 +0x8f

go.etcd.io/bbolt.(*DB).Update(0x0?, 0xc000bb01e0)

/go/src/github.com/containerd/containerd/vendor/go.etcd.io/bbolt/db.go:741 +0x82

github.com/containerd/containerd/metadata.(*DB).Update(0xc0001e92d0, 0x5619014f3000?)

/go/src/github.com/containerd/containerd/metadata/db.go:246 +0x9e

github.com/containerd/containerd/metadata.update({0x56190174ac40?, 0xc0006028a0?}, {0x561901738b90, 0xc0001e92d0}, 0xc000

为了验证上述排查思路,我们换了一台节点,移除dockershim,重启机器后,发现大量image pull(因为containerd存储的image与docker是不一致,所有kubelet启动容器时需要拉取镜像),这时候通过iostat发现IO非常高,原因是什么?

对于频繁的镜像 pull和unpack,导致磁盘争用的问题,正如技术背景中提到的ImagePull的过程,虽然ImagePull 不会强制刷新所有脏页到磁盘,但 umount 会这样做。

那么如何解决这个问题?

我们只需要避免umount时的强制刷新,即使有再多镜像拉取,这种现象也能得到缓解。

问题修复

我们在社区找到了相应的修复方案,PR#8676 为overlay插件添加mount option,以使用 volatile,该特性需要overlay内核存储的支持, https://docs.kernel.org/filesystems/overlayfs.html#volatile-mount。

注:volatile 可以实现跳过 sync 调用,这样umount 不会因为同步到磁盘而出现卡顿。

由于笔者时间、视野、认知有限,本文难免出现错误、疏漏等问题,期待各位读者朋友、业界专家指正交流,上述排障信息已修改为社区内容。

参考文献

1.https://pkg.go.dev/k8s.io/kubernetes/pkg/kubelet/pleg

2.https://github.com/containerd/containerd/issues/2886

3.

[overlay] add configurable mount options to overlay snapshotter

4.Containers slow to start after init container finishes