Ambari 使用 Knox 进行 LDAP 身份认证

Knox有如下两种认证方式:

- ShiroProvider 对于LDAP/AD身份验证,使用用户名和密码。没有SPNEGO/Kerberos支持。

- HadoopAuth 对于SPNEGO/Kerberos身份验证,使用委派令牌。没有LDAP/AD支持。

下面我们主要介绍下LDAP认证方式:

一 配置LDAP认证

用ambari安装的knox,默认安装目录是 /usr/hdp/current/knox-server 。默认cluster-name是default,对应的拓扑配置文件是:/usr/hdp/current/knox-server/conf/topologies/default.xml

有两种方式创建LDAP服务器,一是手工安装OpenLDAP;二是使用Knox自带的Demo LDAP:

- 如果要手工安装OpenLDAP,参考 Centos7 下 OpenLDAP 安装。

- 如果要使用Knox自带的DemoLDAP服务器,则在Ambari中前往 Services -> Knox -> Service Actions -> Start Demo LDAP。

下面的测试使用手工部署OpenLDAP,并在LDAP上创建了一个测试用户test(dn: cn=test,ou=users,dc=hdp,dc=com),该用户的密码是test。初学者可以安装一个JXplorer来链接LDAP服务器查看其中的数据。

为了修改Knox的默认集群拓扑文件(default.xml),前往 Services -> Knox -> Configs -> Advanced topology,将第一个xml的第一个provider元素替换为下列内容:

<topology>

<gateway>

<provider>

<role>authentication</role>

<name>ShiroProvider</name>

<enabled>true</enabled>

<param>

<name>sessionTimeout</name>

<value>30</value>

</param>

<param>

<name>main.ldapRealm</name>

<value>org.apache.hadoop.gateway.shirorealm.KnoxLdapRealm</value>

</param>

<param>

<name>main.ldapRealm.userDnTemplate</name>

<value>uid={0},ou=users,dc=hdp,dc=com</value>

</param>

<param>

<name>main.ldapRealm.contextFactory.url</name>

<value>ldap://{{knox_host_name}}</value>

</param>

<param>

<name>main.ldapRealm.contextFactory.authenticationMechanism</name>

<value>simple</value>

</param>

<param>

<name>urls./**</name>

<value>authcBasic</value>

</param>

</provider>

......

</topology>

相对于默认配置,只修改了两个参数:

<param>

<name>main.ldapRealm.userDnTemplate</name>

<value>>cn={0},ou=users,dc=hdp,dc=com</value>

</param>

<param>

<name>main.ldapRealm.contextFactory.url</name>

<value>ldap://freeipa.testing.com</value>

</param>

- main.ldapRealm.userDnTemplate

You would use LDAP configuration as documented above to authenticate against Active Directory as well.

Some Active Directory specific things to keep in mind:

Typical AD main.ldapRealm.userDnTemplate value looks slightly different, such as

cn={0},cn=users,DC=lab,DC=sample,dc=com

Please compare this with a typical Apache DS main.ldapRealm.userDnTemplate value and make note of the difference:

uid={0},ou=people,dc=hadoop,dc=apache,dc=org

If your AD is configured to authenticate based on just the cn and password and does not require user DN, you do not have to specify value for main.ldapRealm.userDnTemplate.

- main.ldapRealm.contextFactory.url

你自己ldap的url,LDAP没有启用TLS,使用默认端口389。

我使用apacheds的ldap, ldap://ldap_host:10389

在ambari界面中点击Save按钮保存,并通过橙黄色按钮重启相关服务。之后会发现/usr/hdp/current/knox-server/conf/topologies/目录下的default.xml修改更新了。

二 验证Knox网关

作为一个代理网关,Knox将所有支持代理的RESTful服务和页面进行了一层地址映射

https://knox.apache.org/books/knox-1-6-0/user-guide.html#URL+Mapping

Service HA配置文档:

https://knox.apache.org/books/knox-1-6-0/user-guide.html#Default+Service+HA+support

2.1 Yarn RESTfull

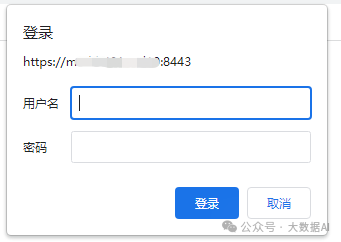

现在我们来测试一下YARN的RESTful服务,打开浏览器输入https://knox_host_name:8443/gateway/default/resourcemanager/v1/cluster/apps。这是YARN查看集群运行任务的RESTful服务接口。如图下图所示,Knox网关要求进行登录认证。

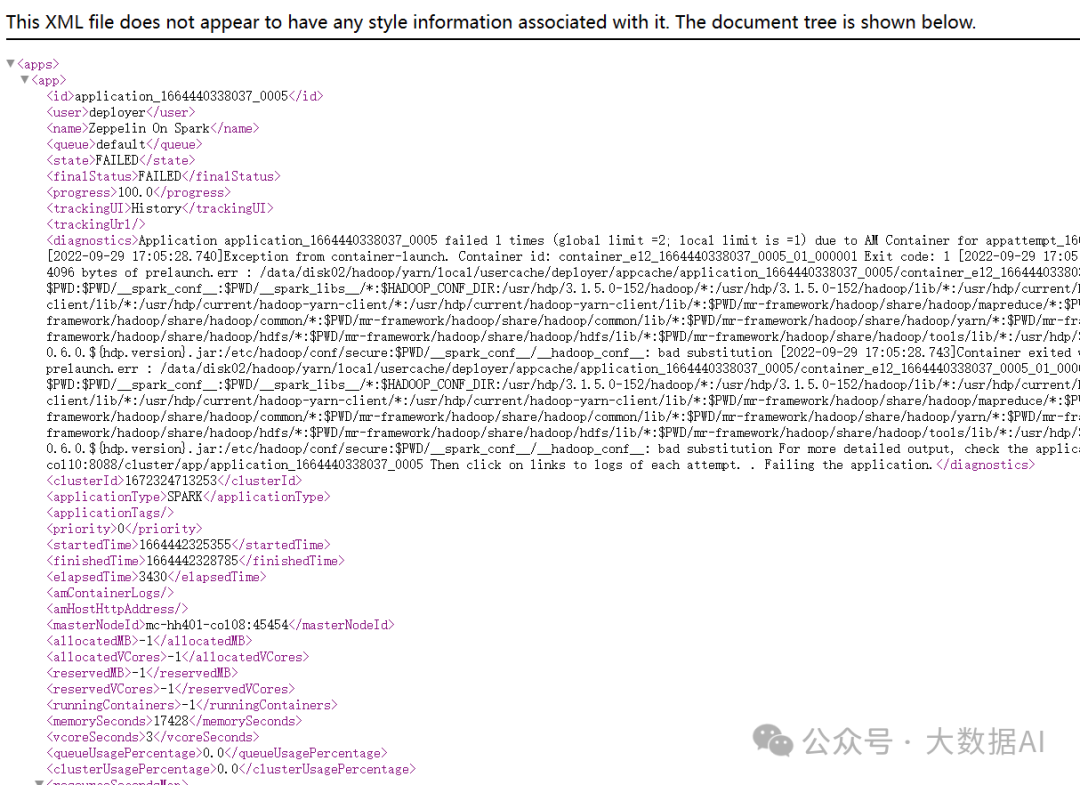

输入用户名和密码进行登录认证,认证通过之后我们如愿访问到数据了,如图下图所示。

2.2 Yarn UI

接下来我们尝试访问YARN的Web UI控制台,Knox网关的默认配置中只代理了RESTful接口,所以我们需要修改它的配置文件,添加想要代理的Web UI控制台。打开Ambari找到Knox网关的配置页面,选择Advanced topology配置项,在末尾增加YARN UI的配置,保存之后需要重启Knox网关服务。

YARNUI HA配置:

<provider>

<role>ha</role>

<name>HaProvider</name>

<enabled>true</enabled>

<param>

<name>WEBHDFS</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>HDFSUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>YARNUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

</provider>

<service>

<role>YARNUI</role>

<url>http://hdp.test.col10:8088</url>

<url>http://hdp.test.col09:8088</url>

</service>

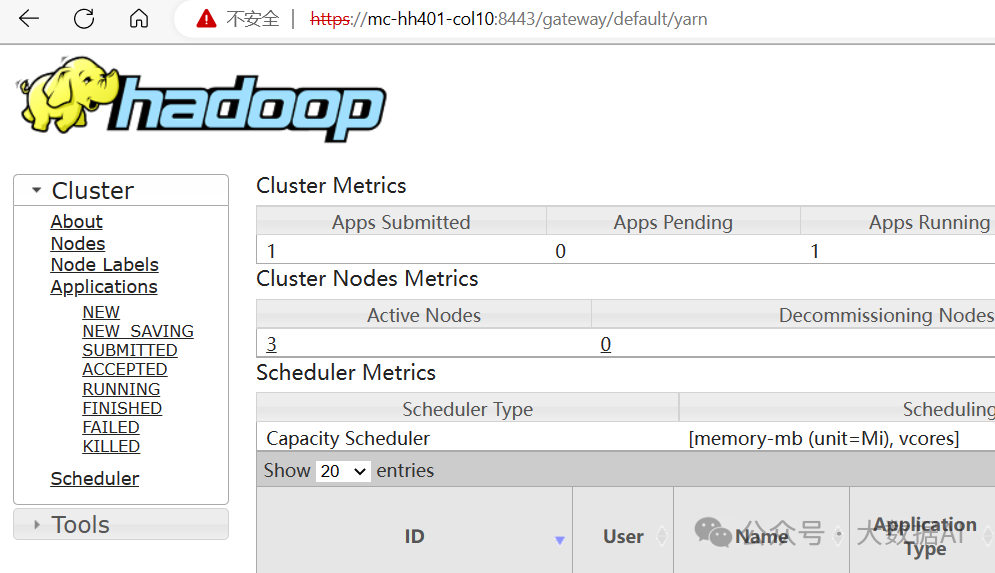

现在我们再次打开浏览器输入https://knox_host_name:8443/gateway/default/yarn,就能看到YARN的Web UI管理控制台了。

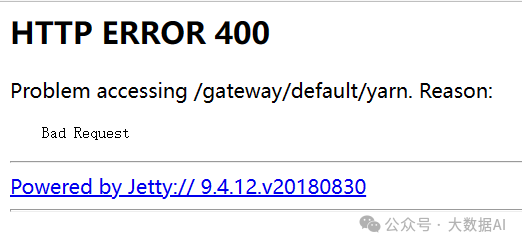

报错如下:

查看Knox服务日志,报错如下:

2023-01-04 17:20:28,759 INFO knox.gateway (AclsAuthorizationFilter.java:doFilter(104)) - Access Granted: true

2023-01-04 17:20:28,760 ERROR knox.gateway (GatewayDispatchFilter.java:isDispatchAllowed(155)) - The dispatch to http://hdp.test.col09:8088/cluster was disallowed because it fails the dispatch whitelist validation. See documentation for dispatch whitelisting.

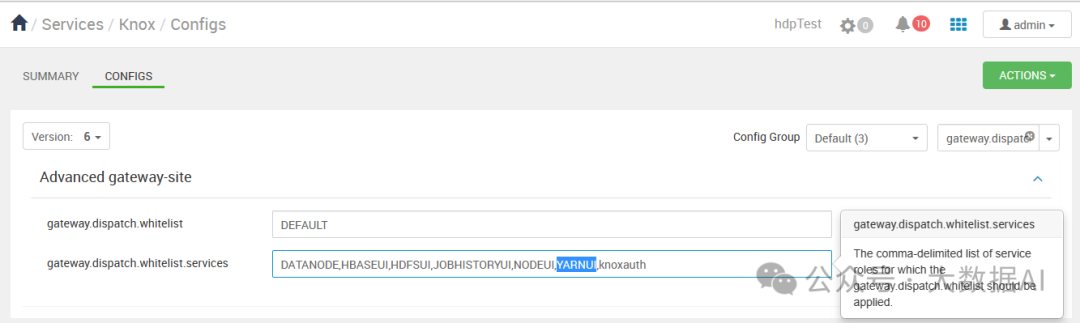

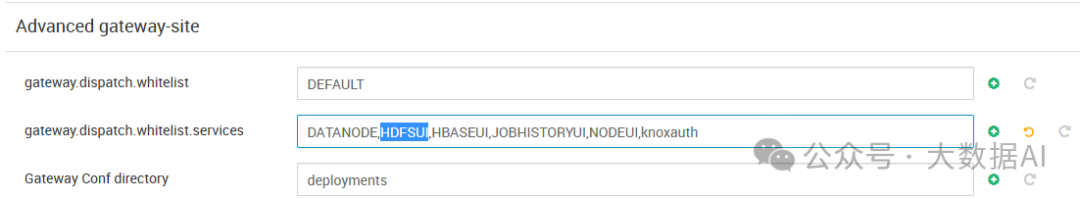

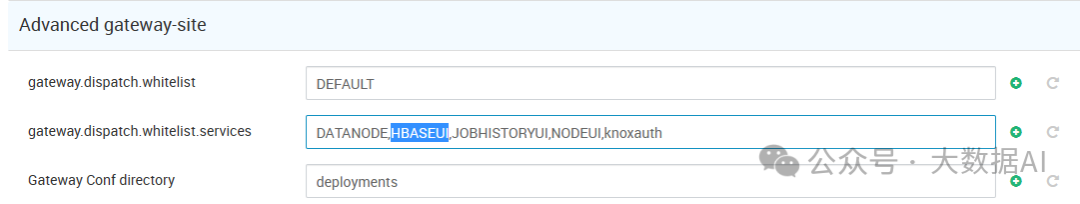

需要修改一下gateway.dispatch.whitelist.services属性,内容里删掉YARNUI,如果不删除,则会报错:

再次访问 https://knox_host_name:8443/gateway/default/yarn ,输入用户名和密码后,访问正常

2.3 Hdfs RESTfull

配置如下:

<provider>

<role>ha</role>

<name>HaProvider</name>

<enabled>true</enabled>

<param>

<name>WEBHDFS</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>HDFSUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>YARNUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

</provider>

<service>

<role>WEBHDFS</role>

<url>http://hdp.test.col09:50070/webhdfs</url>

<url>http://hdp.test.col10:50070/webhdfs</url>

</service>

测试:

curl -i -k -u gust:gust-password -X GET \

'https://localhost:8443/gateway/default/webhdfs/v1/?op=LISTSTATUS'

2.4 HDFSUI

配置如下:

<provider>

<role>ha</role>

<name>HaProvider</name>

<enabled>true</enabled>

<param>

<name>WEBHDFS</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>HDFSUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>YARNUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

</provider>

<service>

<role>HDFSUI</role>

<url>http://hdp.test.col09:50070</url>

<url>http://hdp.test.col10:50070</url>

</service>

需要修改一下gateway.dispatch.whitelist.services属性,内容里删掉HDFSUI,如果不删除,则会报错:

测试:

访问https://hdp.test.col10:8443/gateway/default/hdfs 输入用户名和密码后,后台报如下错误:

Caused by: java.io.IOException: java.io.IOException: Service connectivity error.

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.failoverRequest(DefaultHaDispatch.java:125)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.executeRequest(DefaultHaDispatch.java:94)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.failoverRequest(DefaultHaDispatch.java:119)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.executeRequest(DefaultHaDispatch.java:94)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.failoverRequest(DefaultHaDispatch.java:119)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.executeRequest(DefaultHaDispatch.java:94)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.failoverRequest(DefaultHaDispatch.java:119)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.executeRequest(DefaultHaDispatch.java:94)

at org.apache.knox.gateway.dispatch.DefaultDispatch.doGet(DefaultDispatch.java:278)

at org.apache.knox.gateway.dispatch.GatewayDispatchFilter$GetAdapter.doMethod(GatewayDispatchFilter.java:170)

at org.apache.knox.gateway.dispatch.GatewayDispatchFilter.doFilter(GatewayDispatchFilter.java:122)

at org.apache.knox.gateway.filter.AbstractGatewayFilter.doFilter(AbstractGatewayFilter.java:61)

at org.apache.knox.gateway.GatewayFilter$Holder.doFilter(GatewayFilter.java:372)

at org.apache.knox.gateway.GatewayFilter$Chain.doFilter(GatewayFilter.java:272)

at org.apache.knox.gateway.filter.AclsAuthorizationFilter.doFilter(AclsAuthorizationFilter.java:108)

at org.apache.knox.gateway.GatewayFilter$Holder.doFilter(GatewayFilter.java:372)

at org.apache.knox.gateway.GatewayFilter$Chain.doFilter(GatewayFilter.java:272)

at org.apache.knox.gateway.identityasserter.common.filter.AbstractIdentityAssertionFilter.doFilterInternal(AbstractIdentityAssertionFilter.java:196)

at org.apache.knox.gateway.identityasserter.common.filter.AbstractIdentityAssertionFilter.continueChainAsPrincipal(AbstractIdentityAssertionFilter.java:153)

at org.apache.knox.gateway.identityasserter.common.filter.CommonIdentityAssertionFilter.doFilter(CommonIdentityAssertionFilter.java:90)

at org.apache.knox.gateway.GatewayFilter$Holder.doFilter(GatewayFilter.java:372)

at org.apache.knox.gateway.GatewayFilter$Chain.doFilter(GatewayFilter.java:272)

at org.apache.knox.gateway.filter.rewrite.api.UrlRewriteServletFilter.doFilter(UrlRewriteServletFilter.java:60)

at org.apache.knox.gateway.filter.AbstractGatewayFilter.doFilter(AbstractGatewayFilter.java:61)

at org.apache.knox.gateway.GatewayFilter$Holder.doFilter(GatewayFilter.java:372)

at org.apache.knox.gateway.GatewayFilter$Chain.doFilter(GatewayFilter.java:272)

at org.apache.knox.gateway.filter.ShiroSubjectIdentityAdapter$CallableChain$1.run(ShiroSubjectIdentityAdapter.java:91)

at org.apache.knox.gateway.filter.ShiroSubjectIdentityAdapter$CallableChain$1.run(ShiroSubjectIdentityAdapter.java:88)

... 82 more

Caused by: java.io.IOException: Service connectivity error.

at org.apache.knox.gateway.dispatch.DefaultDispatch.executeOutboundRequest(DefaultDispatch.java:148)

at org.apache.knox.gateway.ha.dispatch.DefaultHaDispatch.executeRequest(DefaultHaDispatch.java:90)

... 108 more

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI||||access|uri|/gateway/default/hdfs|unavailable|Request method: GET

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||authentication|uri|/gateway/default/hdfs|success|

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||authentication|uri|/gateway/default/hdfs|success|Groups: []

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||authorization|uri|/gateway/default/hdfs|success|

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||dispatch|uri|https://hdp.test.col10:8443/gateway/default/hdfs?user.name=test|unavailable|Request method: GET

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||dispatch|uri|https://hdp.test.col10:8443/gateway/default/hdfs?user.name=test|failure|

23/01/10 14:47:28 ||91d91611-27bc-4c0f-84f0-ece1497d461e|audit|10.252.xx.xx|HDFSUI|test|||access|uri|/gateway/default/hdfs|failure|

问题原因:HDP 3.1.0 - Knox proxy HDFSUI - HTTP 401 Error

this seems to be a known bug in Knox-1.0 used in HDP-3.1. One solution is to modify the URL used to access HDFS UI:

https://<knox-host>:8443/gateway/default/hdfs?host=http://<namenode-host>:50070

Admittedly not a great solution but it worked for me. Another solution which I haven't tried is to insert the following line in the HDFSUI service definition in Knox topology file.

<version>2.7.0</version>

This will use an older, bug-free version of HDFSUI service. More details here.

增加配置如下:

<service>

<role>HDFSUI</role>

<version>2.7.0</version>

<url>http://hdp.test.col09:50070</url>

<url>http://hdp.test.col10:50070</url>

</service>

再次访问https://hdp.test.col10:8443/gateway/default/hdfs,可以正常访问。

后台日志正常:

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI||||access|uri|/gateway/default/hdfs|unavailable|Request method: GET

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI|test|||authentication|uri|/gateway/default/hdfs|success|

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI|test|||authentication|uri|/gateway/default/hdfs|success|Groups: []

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI|test|||authorization|uri|/gateway/default/hdfs|success|

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI|test|||dispatch|uri|http://hdp.test.col09:50070/?user.name=test|unavailable|Request method: GET

23/01/10 15:18:23 ||e042d675-f027-4175-9dbf-12fcbe7d4a23|audit|10.252.169.246|HDFSUI|test|||dispatch|uri|http://hdp.test.col09:50070/?user.name=test|success|Response status: 200

23/01/10 15:18:23 |||audit|10.252.169.246|HDFSUI|test|||access|uri|/gateway/default/hdfs|success|Response status: 200

2.5 HBASEUI

配置如下:

<provider>

<role>ha</role>

<name>HaProvider</name>

<enabled>true</enabled>

<param>

<name>WEBHDFS</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>HDFSUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>YARNUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

<param>

<name>HBASEUI</name>

<value>maxFailoverAttempts=3;failoverSleep=1000;enabled=true</value>

</param>

</provider>

<service>

<role>HBASEUI</role>

<url>http://hdp.test.col10:16010</url>

<url>http://hdp.test.col09:16010</url>

</service>

需要修改一下gateway.dispatch.whitelist.services属性,内容里删掉HBASEUI,如果不删除,则会报错:

访问https://hdp.test.col10:8443/gateway/default/hbase/webui 输入用户名密码即可跳到HBASE UI界面