备份和迁移 Kubernetes 利器:Velero

你是否在运维kubernetes集群中有过这样的经历:

⼀个新⼈把某个namespace点击删除,导致这下⾯所有的资源全部丢失,只能⼀步⼀步的重新部署。新搭建集群,为了保证环境尽可能⼀致,只能从⽼集群拿出来yaml⽂件在新集群中疯狂apply。令⼈抓狂的瞬间随之⽽来的就是浪费⼤好⻘春的搬砖时光。

现在已经开源了很多集群资源对象备份的⼯具,把这些⼯具利⽤起来让你的⼯作事半功倍,不在苦逼加班。

1

集群备份⽐较

1.etcd备份

法精确到恢复某⼀资源对象,⼀般使⽤快照的形式进⾏备份和恢复。

# 备份date;CACERT="/opt/kubernetes/ssl/ca.pem"CERT="/opt/kubernetes/ssl/server.pem"EKY="/opt/kubernetes/ssl/server-key.pem"ENDPOINTS="192.168.1.36:2379"ETCDCTL_API=3 etcdctl \--cacert="${CACERT}" --cert="${CERT}" --key="${EKY}" \--endpoints=${ENDPOINTS} \snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db# 备份保留30天find /data/etcd_backup_dir/ -name *.db -mtime +30 -exec rm -f {} \;

# 恢复ETCDCTL_API=3 etcdctl snapshot restore /data/etcd_backup_dir/etcd-snapshot20191222.db \--name etcd-0 \--initial-cluster "etcd-0=https://192.168.1.36:2380,etcd1=https://192.168.1.37:2380,etcd-2=https://192.168.1.38:2380" \--initial-cluster-token etcd-cluster \--initial-advertise-peer-urls https://192.168.1.36:2380 \--data-dir=/var/lib/etcd/default.etcd

移就很有⽤了。现在开源⼯具有很多都提供了这样的功能,⽐如Velero, PX-Backup,Kasten。

velero:

Velero is an open source tool to safely backup and restore, perform disaster recovery, andmigrate Kubernetes cluster resources and persistent volumes.

Built from the ground up for Kubernetes, PX-Backup delivers enterprise-grade applicationand data protection with fast recovery at the click of a button

urpose-built for Kubernetes, Kasten K10 provides enterprise operations teams an easy-touse, scalable, and secure system for backup/restore, disaster recovery, and mobility ofKubernetes applications.

2

velero

官⽅介绍的velero提到了以上三个功能,主要就是备份恢复和迁移。

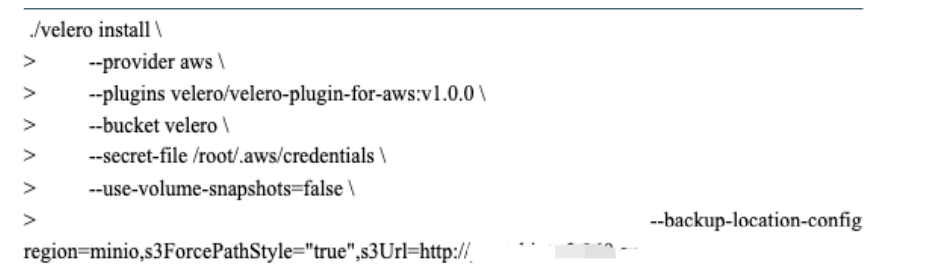

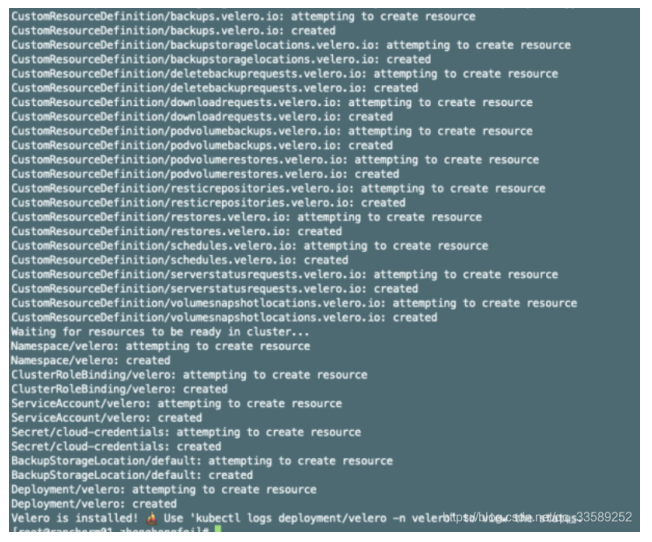

可以看到创建了很多crd,并最终在veleronamespace下将应⽤跑起来了。其实从crd的命名上就可以 看出他⼤概有哪些⽤途了。

2.定时备份

对于运维⼈员来说,对外提供⼀个集群的稳定性保证是必不可少的,这就需要我们开启定时备份功能。通过命令⾏能够开始定时任务,指定那么分区,保留多少时间的备份数据,每隔多⻓时间进⾏备份⼀次。

Examples:# Create a backup every 6 hoursvelero create schedule NAME --schedule="0 */6 * * *"# Create a backup every 6 hours with the @every notationvelero create schedule NAME --schedule="@every 6h"# Create a daily backup of the web namespacevelero create schedule NAME --schedule="@every 24h" --include-namespaces web# Create a weekly backup, each living for 90 days (2160 hours)velero create schedule NAME --schedule="@every 168h" --ttl 2160h0m0s

velero create schedule 360cloud --schedule="@every 24h" --ttl 2160h0m0sSchedule "360cloud" created successfully.~]# kubectl get schedules --all-namespacesNAMESPACE NAME AGEvelero 360cloud 40s~]# kubectl get schedules -n velero 360cloud -o yamlapiVersion: velero.io/v1kind: Schedulemetadata:generation: 3name: 360cloudnamespace: veleroresourceVersion: "18164238"selfLink: /apis/velero.io/v1/namespaces/velero/schedules/360clouduid: 7c04af34-1529-4b48-a3d1-d2f5e98de328spec:schedule: '@every 24h'template:hooks: {}includedNamespaces:'*'ttl: 2160h0m0sstatus:lastBackup: "2021-03-07T08:18:49Z"phase: Enabled

3.集群迁移备份

对于我们要迁移部分的资源对象,可能并没有进⾏定时备份,可能有了定时备份,但是想要最新的数据。那么备份⼀个⼀次性的数据⽤来迁移就好了。

velero backup create test01 --include-namespaces defaultBackup request "test01" submitted successfully.Run `velero backup describe test01` or `velero backup logs test01` for moredetails.[root@xxxxx ~]# velero backup describe test01Name: test01Namespace: veleroLabels: velero.io/storage-location=defaultAnnotations: velero.io/source-cluster-k8s-gitversion=v1.19.7velero.io/source-cluster-k8s-major-version=1velero.io/source-cluster-k8s-minor-version=19Phase: InProgressErrors: 0Warnings: 0Namespaces:Included: defaultExcluded: <none>Resources:Included: *Excluded: <none>Cluster-scoped: autoLabel selector: <none>Storage Location: defaultVelero-Native Snapshot PVs: autoTTL: 720h0m0sHooks: <none>Backup Format Version: 1.1.0Started: 2021-03-07 16:44:52 +0800 CSTCompleted: <n/a>Expiration: 2021-04-06 16:44:52 +0800 CSTVelero-Native Snapshots: <none included>

[root@xxxxx ~]# velero restore create --from-backup test01Restore request "test01-20210307164809" submitted successfully.Run `velero restore describe test01-20210307164809` or `velero restore logstest01-20210307164809` for more details.[root@xxxxx ~]# kuebctl ^C[root@xxxxx ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-6799fc88d8-4bnfg 0/1 ContainerCreating 0 6snginx-6799fc88d8-cq82j 0/1 ContainerCreating 0 6snginx-6799fc88d8-f6qsx 0/1 ContainerCreating 0 6snginx-6799fc88d8-gq2xt 0/1 ContainerCreating 0 6snginx-6799fc88d8-j5fc7 0/1 ContainerCreating 0 6snginx-6799fc88d8-kvvx6 0/1 ContainerCreating 0 5snginx-6799fc88d8-pccc4 0/1 ContainerCreating 0 5snginx-6799fc88d8-q2fnt 0/1 ContainerCreating 0 4snginx-6799fc88d8-r9dqn 0/1 ContainerCreating 0 4snginx-6799fc88d8-zqv6v 0/1 ContainerCreating 0 4s

s3中的存储记录:

3

PVC的备份迁移

velero install --use-restic

apiVersion: v1kind: Podmetadata:annotations:: mypvcname: rbd-testspec:containers:name: web-serverimage: nginxvolumeMounts:name: mypvcmountPath: /var/lib/www/htmlvolumes:name: mypvcpersistentVolumeClaim:claimName: rbd-pvc-zhfreadOnly: false

可以通过 opt-in , opt-out 的形式,为pod添加注解来进⾏选择需要备份的pod中的volume。

velero backup create testpvc05 --snapshot-volumes=true --include-namespacesdefaultBackup request "testpvc05" submitted successfully.Run `velero backup describe testpvc05` or `velero backup logs testpvc05` formore details.[root@xxxx ceph]# velero backup describe testpvc05Name: testpvc05Namespace: veleroLabels: velero.io/storage-location=defaultAnnotations: velero.io/source-cluster-k8s-gitversion=v1.19.7velero.io/source-cluster-k8s-major-version=1velero.io/source-cluster-k8s-minor-version=19Phase: CompletedErrors: 0Warnings: 0Namespaces:Included: defaultExcluded: <none>Resources:Included: *Excluded: <none>Cluster-scoped: autoLabel selector: <none>Storage Location: defaultVelero-Native Snapshot PVs: trueTTL: 720h0m0sHooks: <none>Backup Format Version: 1.1.0Started: 2021-03-10 15:11:26 +0800 CSTCompleted: 2021-03-10 15:11:36 +0800 CSTExpiration: 2021-04-09 15:11:26 +0800 CSTTotal items to be backed up: 92Items backed up: 92Velero-Native Snapshots: <none included>Restic Backups (specify --details for more information):Completed: 1

[root ceph]# kubectl delete pod rbd-testpod "rbd-test" deletedkubectl delete pvc[root ceph]# kubectl delete pvc rbd-pvc-zhfpersistentvolumeclaim "rbd-pvc-zhf" deleted

[root@xxxxx ceph]# velero restore create testpvc05 --restore-volumes=true--from-backup testpvc05Restore request "testpvc05" submitted successfully.Run `velero restore describe testpvc05` or `velero restore logs testpvc05` formore details.[root@xxxxxx ceph]#[root@xxxxxx ceph]# kuebctl^C[root@xxxxxx ceph]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-6799fc88d8-4bnfg 1/1 Running 0 2d22hrbd-test 0/1 Init:0/1 0 6s

数据恢复显示

[]total 20drwx------ 2 root root 16384 Mar 10 06:31 lost+found-rw-r--r-- 1 root root 13 Mar 10 07:11 zheng.txt[]/var/lib/www/html/zheng.txtzhenghongfei[]

4

HOOK

metadata:name: nginx-deploymentnamespace: nginx-examplespec:replicas:selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxannotations:: fsfreeze: '["/sbin/fsfreeze", "--freeze","/var/log/nginx"]': fsfreeze: '["/sbin/fsfreeze", "--unfreeze","/var/log/nginx"]'

引导使⽤前置和后置挂钩冻结⽂件系统。冻结⽂件系统有助于确保所有挂起的磁盘IO操作在拍摄快照之 前已经完成。

当然我们可以使⽤这种⽅式执⾏备份mysql或其他的⽂件,但是只建议使⽤⼩⽂件会备份恢复,针对于 pod进⾏备份恢复。

5

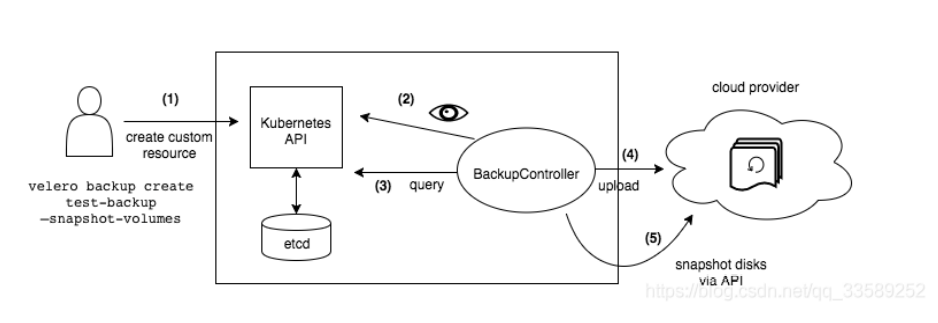

探究备份实现

collector := &itemCollector{log: log,backupRequest: backupRequest,discoveryHelper: kb.discoveryHelper,dynamicFactory: kb.dynamicFactory,cohabitatingResources: cohabitatingResources(),dir: tempDir,}items := collector.getAllItems()

调⽤函数

func (kb *kubernetesBackupper) backupItem(log logrus.FieldLogger, grschema.GroupResource, itemBackupper *itemBackupper, unstructured*unstructured.Unstructured, preferredGVR schema.GroupVersionResource) bool {backedUpItem, err := itemBackupper.backupItem(log, unstructured, gr,preferredGVR)if aggregate, ok := err.(kubeerrs.Aggregate); ok {log.WithField("name", unstructured.GetName()).Infof("%d errors encounteredbackup up item", len(aggregate.Errors()))// log each error separately so we get error location info in the log, andan// accurate count of errorsfor _, err = range aggregate.Errors() {log.WithError(err).WithField("name",unstructured.GetName()).Error("Error backing up item")}return false}if err != nil {log.WithError(err).WithField("name", unstructured.GetName()).Error("Errorbacking up item")return false}return backedUpItem}

client, err :=ib.dynamicFactory.ClientForGroupVersionResource(gvr.GroupVersion(), resource,additionalItem.Namespace)if err != nil {return nil, err}item, err := client.Get(additionalItem.Name, metav1.GetOptions{})

log.Debugf("Resource %s/%s, version= %s, preferredVersion=%s",groupResource.String(), name, version, preferredVersion)if version == preferredVersion {if namespace != "" {filePath = filepath.Join(velerov1api.ResourcesDir,groupResource.String(), velerov1api.NamespaceScopedDir, namespace,name+".json")PX-Backupkanisterhttps://github.com/vmware-tanzu/velerohttps://portworx.com/https://www.kasten.io/https://github.com/kanisterio/kanisterhttps://duyanghao.github.io/kubernetes-ha-and-bur/https://blog.kubernauts.io/backup-and-restore-of-kubernetes-applications-using-heptios-velerowith-restic-and-rook-ceph-as-2e8df15b1487} else {filePath = filepath.Join(velerov1api.ResourcesDir,groupResource.String(), velerov1api.ClusterScopedDir, name+".json")}hdr = &tar.Header{Name: filePath,Size: int64(len(itemBytes)),Typeflag: tar.TypeReg,Mode: 0755,ModTime: time.Now(),}if err := ib.tarWriter.WriteHeader(hdr); err != nil {return false, errors.WithStack(err)}if _, err := ib.tarWriter.Write(itemBytes); err != nil {return false, errors.WithStack(err)}}

5

其他的备份工具

PX-Backup 需要交费的产品,⼈⺠币玩家可以更加强⼤。kanister更倾向于数据上的存储和恢复,⽐如etcd的snap,mongo等。

参考链接:

https://github.com/vmware-tanzu/velero

https://portworx.com/

https://www.kasten.io/

https://github.com/kanisterio/kanister

https://duyanghao.github.io/kubernetes-ha-and-bur/

https://blog.kubernauts.io/backup-and-restore-of-kubernetes-applications-using-heptios-velerowith-restic-and-rook-ceph-as-2e8df15b1487

- END -

公众号后台回复「加群」加入一线高级工程师技术交流群,一起交流进步。

推荐阅读 让运维简单高效,轻松搞定运维管理平台 Kubernetes 1.21正式发布 | 主要变化解读 搭建一套完整的企业级 K8s 集群(v1.20,二进制方式) 记一次 Kubernetes 机器内核问题排查 Shell 脚本进阶,经典用法及其案例 Kubernetes 集群网络从懵圈到熟悉 记一次 Linux服务器被入侵后的排查思路 5个面试的关键技巧,助你拿到想要的offer!

点亮,服务器三年不宕机