Camera:相机这些实用技巧,赶紧学起来吧!

特别说明

本文仅涉及到 Android API 的使用以及封装,还没有涉及到专业音视频领域

正文部分

使用到相机大家都不陌生,很多应用都会使用到 Camera 硬件,一般是用于拍照或者录制视频,这都是很常见的功能。

一般来大家都是怎么使用的呢?说如果没有 UI 的限制或逻辑的限制,只是单纯的拍照或录制视频,我们其实可以通过 intent 的方式直接启动系统的拍照或录制 Activity 就能完成,这是最方便的方式。也就不需要此文了。

但是总有那么一些应用,那么一些需求,要么是需要自定义的 相机UI页面,要么是业务逻辑需要 ,一般我们就需要自己实现 Camera 的逻辑,比如显示预览页面,对回调的数据帧进行人脸判断? 进行图片识别? 或者需要自定义拍照与录制逻辑单击拍照长按录制? 或者是对特效/滤镜视频的录制?

既然离不开 Camera API 的使用,那么本文就从应用的角度出发如何使用 Camera API 实现想要的效果。

那么需要具体到使用哪一种 Camera 呢?这个大家也能听说过,我们目前有三种 API 可以使用,分别是 Camera , Camera2 , CameraX ??? 谷歌是不是傻,为什么要增加我们开发者的工作量,搞这么复杂我怎么用嘛!

一、Camera的前世今生

其实主要是为了兼容性与安全性考虑,这里简单的总结一下:

Camera API 是允许应用程序直接与相机硬件交互的旧版 android.hardware.Camera 类在底层通过驱动程序直接访问相机硬件。

它提供了基本的控制与图片捕捉能力,但是这种方式具有一定的限制和设备兼容性问题。所以 Android 团队决定在 Android 5.0 引入全新的相机 API ,即Camera2 API。

Camera2 API引入了一种新的架构,应用程序通过 CameraManager 与系统 「相机服务」 进行通信,并使用CameraDevice、CameraCaptureSession等对象来控制相机功能。这种方式提供了更精细的控制和更高的性能,并解决了旧版API存在的一些限制。

其实这样很好,直接操作变为通过服务通信,并且它提供更精细的控制和更高的性能。到此的话应该没什么问题,为什么还要推出 CameraX 呢?

尽管 Camera2 API 提供了很多优势,但使用它仍然需要编写大量的代码来处理各种情况和设备兼容性。为了简化相机开发流程并提高跨设备兼容性,Google 推出了 CameraX 库

而 CameraX 库则是在 Camera2 API 的基础上进行封装和简化,以提供更一致和易用的相机接口。它抽象了底层的相机相关逻辑和设备差异,使开发者能够以统一的方式编写相机代码,而无需关心特定设备兼容性的细节。它提供了更高级别的API,使开发者能够更轻松地实现相机功能,同时保持跨设备兼容性。

所以其实我们用 Camera2 能实现的效果都能通过 CameraX 更简单的与方便的实现,所以个人也是比较推荐使用 CameraX 。

下面就把三种 Camera API 的如何使用与如何封装都写一遍。

二、Camera1 的使用与封装

一般来说我们使用 Camera1 的时候,我们都是使用 SurfaceView 或 TextureView 来承载预览画面。他们在此场景下的区别就是 TextureView 可以用一些动画实现自适应,裁剪布局等效果。

这里我们简单的 SurfaceView 来演示如何使用:

先创建 SurfaceView 并设置监听,然后添加到我们指定的容器中:

public View initCamera(Context context) {

mSurfaceView = new SurfaceView(context);

mContext = context;

mSurfaceView.setLayoutParams(new ViewGroup.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT));

mSurfaceHolder = mSurfaceView.getHolder();

mSurfaceHolder.addCallback(new CustomCallBack());

mSurfaceHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

return mSurfaceView;

}

在 SurfaceView 的回调中,我们初始化 Camera

private class CustomCallBack implements SurfaceHolder.Callback {

@Override

public void surfaceCreated(SurfaceHolder holder) {

initCamera();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

releaseAllCamera();

}

}

private void initCamera() {

if (mCamera != null) {

releaseAllCamera();

}

//打开摄像头

try {

mCamera = Camera.open();

} catch (Exception e) {

e.printStackTrace();

releaseAllCamera();

}

if (mCamera == null)

return;

//设置摄像头参数

setCameraParams();

try {

mCamera.setDisplayOrientation(90); //设置拍摄方向为90度(竖屏)

mCamera.setPreviewDisplay(mSurfaceHolder);

mCamera.startPreview();

mCamera.unlock();

} catch (IllegalStateException e) {

e.printStackTrace();

} catch (RuntimeException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

这里需要额外处理预览的方向与预览尺寸,代码太多了,在下面的工具类中放出。

上面的代码只是最简单的使用,关于切换前后镜头,镜像展示,预览方向,旋转角度,回调处理等等一系列的操作使用起来很是麻烦,所以这里贴一个自用的工具类统一管理他们。

先定义一个接口回调:

public interface CameraListener {

/**

* 当打开时执行

*

* @param camera 相机实例

* @param cameraId 相机ID

* @param displayOrientation 相机预览旋转角度

* @param isMirror 是否镜像显示

*/

void onCameraOpened(Camera camera, int cameraId, int displayOrientation, boolean isMirror);

/**

* 预览数据回调

*

* @param data 预览数据

* @param camera 相机实例

*/

void onPreview(byte[] data, Camera camera);

/**

* 当相机关闭时执行

*/

void onCameraClosed();

/**

* 当出现异常时执行

*

* @param e 相机相关异常

*/

void onCameraError(Exception e);

/**

* 属性变化时调用

*

* @param cameraID 相机ID

* @param displayOrientation 相机旋转方向

*/

void onCameraConfigurationChanged(int cameraID, int displayOrientation);

}

然后就是我们的工具类,这里直接贴出:

public class CameraHelper implements Camera.PreviewCallback {

private Camera mCamera;

private int mCameraId;

private Point previewViewSize;

private View previewDisplayView;

private Camera.Size previewSize;

private Point specificPreviewSize;

private int displayOrientation = 0;

private int rotation;

private int additionalRotation;

private boolean isMirror = false;

private Integer specificCameraId = null;

private CameraListener cameraListener; //自定义监听回调

private CameraHelper(Builder builder) {

previewDisplayView = builder.previewDisplayView;

specificCameraId = builder.specificCameraId;

cameraListener = builder.cameraListener;

rotation = builder.rotation;

additionalRotation = builder.additionalRotation;

previewViewSize = builder.previewViewSize;

specificPreviewSize = builder.previewSize;

if (builder.previewDisplayView instanceof TextureView) {

isMirror = builder.isMirror;

} else if (isMirror) {

throw new RuntimeException("mirror is effective only when the preview is on a textureView");

}

}

public void init() {

if (previewDisplayView instanceof TextureView) {

((TextureView) this.previewDisplayView).setSurfaceTextureListener(textureListener);

} else if (previewDisplayView instanceof SurfaceView) {

((SurfaceView) previewDisplayView).getHolder().addCallback(surfaceCallback);

}

if (isMirror) {

previewDisplayView.setScaleX(-1);

}

}

public String start() {

String firstSize = null;

String finalSize = null;

synchronized (this) {

if (mCamera != null) {

return null;

}

//相机数量为2则打开1,1则打开0,相机ID 1为前置,0为后置

mCameraId = Camera.getNumberOfCameras() - 1;

//若指定了相机ID且该相机存在,则打开指定的相机

if (specificCameraId != null && specificCameraId <= mCameraId) {

mCameraId = specificCameraId;

}

//没有相机

if (mCameraId == -1) {

if (cameraListener != null) {

cameraListener.onCameraError(new Exception("camera not found"));

}

return null;

}

//开启相机

if (mCamera == null) {

mCamera = Camera.open(mCameraId);

}

//获取预览方向

displayOrientation = getCameraOri(rotation);

mCamera.setDisplayOrientation(displayOrientation);

try {

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewFormat(ImageFormat.NV21);

//预览大小设置

previewSize = parameters.getPreviewSize();

firstSize = previewSize.width + " - " + previewSize.height;

List<Camera.Size> supportedPreviewSizes = parameters.getSupportedPreviewSizes();

if (supportedPreviewSizes != null && supportedPreviewSizes.size() > 0) {

previewSize = getBestSupportedSize(supportedPreviewSizes, previewViewSize);

finalSize = previewSize.width + " - " + previewSize.height;

}

YYLogUtils.w("Base Preview Size ,Width:" + previewSize.width + " height:" + previewSize.height);

parameters.setPreviewSize(previewSize.width, previewSize.height);

//对焦模式设置

List<String> supportedFocusModes = parameters.getSupportedFocusModes();

if (supportedFocusModes != null && supportedFocusModes.size() > 0) {

if (supportedFocusModes.contains(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE)) {

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

} else if (supportedFocusModes.contains(Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO)) {

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO);

} else if (supportedFocusModes.contains(Camera.Parameters.FOCUS_MODE_AUTO)) {

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

}

}

//Camera 配置完成,设置回去

mCamera.setParameters(parameters);

//绑定到 TextureView 或 SurfaceView

if (previewDisplayView instanceof TextureView) {

mCamera.setPreviewTexture(((TextureView) previewDisplayView).getSurfaceTexture());

} else {

mCamera.setPreviewDisplay(((SurfaceView) previewDisplayView).getHolder());

}

//启动预览并设置预览回调

mCamera.setPreviewCallback(this);

mCamera.startPreview();

if (cameraListener != null) {

cameraListener.onCameraOpened(mCamera, mCameraId, displayOrientation, isMirror);

}

} catch (Exception e) {

if (cameraListener != null) {

cameraListener.onCameraError(e);

}

}

}

return "firstSize :" + firstSize + " finalSize:" + finalSize;

}

private int getCameraOri(int rotation) {

int degrees = rotation * 90;

switch (rotation) {

case Surface.ROTATION_0:

degrees = 0;

break;

case Surface.ROTATION_90:

degrees = 90;

break;

case Surface.ROTATION_180:

degrees = 180;

break;

case Surface.ROTATION_270:

degrees = 270;

break;

default:

break;

}

additionalRotation /= 90;

additionalRotation *= 90;

degrees += additionalRotation;

int result;

Camera.CameraInfo info = new Camera.CameraInfo();

Camera.getCameraInfo(mCameraId, info);

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

result = (info.orientation + degrees) % 360;

result = (360 - result) % 360;

} else {

result = (info.orientation - degrees + 360) % 360;

}

return result;

}

public void stop() {

synchronized (this) {

if (mCamera == null) {

return;

}

mCamera.setPreviewCallback(null);

mCamera.stopPreview();

mCamera.release();

mCamera = null;

if (cameraListener != null) {

cameraListener.onCameraClosed();

}

}

}

public boolean isStopped() {

synchronized (this) {

return mCamera == null;

}

}

public void release() {

synchronized (this) {

stop();

previewDisplayView = null;

specificCameraId = null;

cameraListener = null;

previewViewSize = null;

specificPreviewSize = null;

previewSize = null;

}

}

/**

* 根据 Camera 获取支持的宽高,获取到最适合的预览宽高

*/

private Camera.Size getBestSupportedSize(List<Camera.Size> sizes, Point previewViewSize) {

if (sizes == null || sizes.size() == 0) {

return mCamera.getParameters().getPreviewSize();

}

Camera.Size[] tempSizes = sizes.toArray(new Camera.Size[0]);

Arrays.sort(tempSizes, new Comparator<Camera.Size>() {

@Override

public int compare(Camera.Size o1, Camera.Size o2) {

if (o1.width > o2.width) {

return -1;

} else if (o1.width == o2.width) {

return o1.height > o2.height ? -1 : 1;

} else {

return 1;

}

}

});

sizes = Arrays.asList(tempSizes);

Camera.Size bestSize = sizes.get(0);

float previewViewRatio;

if (previewViewSize != null) {

previewViewRatio = (float) previewViewSize.x / (float) previewViewSize.y;

} else {

previewViewRatio = (float) bestSize.width / (float) bestSize.height;

}

if (previewViewRatio > 1) {

previewViewRatio = 1 / previewViewRatio;

}

boolean isNormalRotate = (additionalRotation % 180 == 0);

for (Camera.Size s : sizes) {

if (specificPreviewSize != null && specificPreviewSize.x == s.width && specificPreviewSize.y == s.height) {

return s;

}

if (isNormalRotate) {

if (Math.abs((s.height / (float) s.width) - previewViewRatio) < Math.abs(bestSize.height / (float) bestSize.width - previewViewRatio)) {

bestSize = s;

}

} else {

if (Math.abs((s.width / (float) s.height) - previewViewRatio) < Math.abs(bestSize.width / (float) bestSize.height - previewViewRatio)) {

bestSize = s;

}

}

}

return bestSize;

}

public List<Camera.Size> getSupportedPreviewSizes() {

if (mCamera == null) {

return null;

}

return mCamera.getParameters().getSupportedPreviewSizes();

}

public List<Camera.Size> getSupportedPictureSizes() {

if (mCamera == null) {

return null;

}

return mCamera.getParameters().getSupportedPictureSizes();

}

@Override

public void onPreviewFrame(byte[] nv21, Camera camera) {

if (cameraListener != null && nv21 != null) {

cameraListener.onPreview(nv21, camera);

}

}

/**

* TextureView 的监听回调

*/

private TextureView.SurfaceTextureListener textureListener = new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surfaceTexture, int width, int height) {

// start();

if (mCamera != null) {

try {

mCamera.setPreviewTexture(surfaceTexture);

} catch (IOException e) {

e.printStackTrace();

}

}

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture surfaceTexture, int width, int height) {

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture surfaceTexture) {

stop();

return false;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture surfaceTexture) {

}

};

/**

* SurfaceView 的监听回调

*/

private SurfaceHolder.Callback surfaceCallback = new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(SurfaceHolder holder) {

if (mCamera != null) {

try {

mCamera.setPreviewDisplay(holder);

} catch (IOException e) {

e.printStackTrace();

}

}

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

stop();

}

};

/**

* 切换摄像头方向

*/

public void changeDisplayOrientation(int rotation) {

if (mCamera != null) {

this.rotation = rotation;

displayOrientation = getCameraOri(rotation);

mCamera.setDisplayOrientation(displayOrientation);

if (cameraListener != null) {

cameraListener.onCameraConfigurationChanged(mCameraId, displayOrientation);

}

}

}

/**

* 翻转前后摄像镜头

*/

public boolean switchCamera() {

if (Camera.getNumberOfCameras() < 2) {

return false;

}

// cameraId ,0为后置,1为前置

specificCameraId = 1 - mCameraId;

stop();

start();

return true;

}

/**

* 使用构建者模式创建,配置Camera

*/

public static final class Builder {

//预览显示的view,目前仅支持surfaceView和textureView

private View previewDisplayView;

//是否镜像显示,只支持textureView

private boolean isMirror;

//指定的相机ID

private Integer specificCameraId;

//事件回调

private CameraListener cameraListener;

//屏幕的长宽,在选择最佳相机比例时用到

private Point previewViewSize;

//屏幕的方向,一般传入getWindowManager().getDefaultDisplay().getRotation()的值即可

private int rotation;

//指定的预览宽高,若系统支持则会以这个预览宽高进行预览

private Point previewSize;

//额外的旋转角度(用于适配一些定制设备)

private int additionalRotation;

public Builder() {

}

//必须要绑定到SurfaceView或者TextureView上

public Builder previewOn(View view) {

if (view instanceof SurfaceView || view instanceof TextureView) {

previewDisplayView = view;

return this;

} else {

throw new RuntimeException("you must preview on a textureView or a surfaceView");

}

}

public Builder isMirror(boolean mirror) {

isMirror = mirror;

return this;

}

public Builder previewSize(Point point) {

previewSize = point;

return this;

}

public Builder previewViewSize(Point point) {

previewViewSize = point;

return this;

}

public Builder rotation(int rotation) {

rotation = rotation;

return this;

}

public Builder additionalRotation(int rotation) {

additionalRotation = rotation;

return this;

}

public Builder specificCameraId(Integer id) {

specificCameraId = id;

return this;

}

public Builder cameraListener(CameraListener listener) {

cameraListener = listener;

return this;

}

public CameraHelper build() {

if (previewViewSize == null) {

throw new RuntimeException("previewViewSize is null, now use default previewSize");

}

if (cameraListener == null) {

throw new RuntimeException("cameraListener is null, callback will not be called");

}

if (previewDisplayView == null) {

throw new RuntimeException("you must preview on a textureView or a surfaceView");

}

//build的时候顺便执行初始化

CameraHelper cameraHelper = new CameraHelper(this);

cameraHelper.init();

return cameraHelper;

}

}

}

我们使用起来就很简单了:

还是初始化 SurfaceView 并且添加到指定的布局容器中:

public View initCamera(Context context) {

mSurfaceView = new SurfaceView(context);

mContext = context;

mSurfaceView.setLayoutParams(new ViewGroup.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT));

mSurfaceView.getViewTreeObserver().addOnGlobalLayoutListener(new ViewTreeObserver.OnGlobalLayoutListener() {

@Override

public void onGlobalLayout() {

mSurfaceView.post(() -> {

setupCameraHelper();

});

mSurfaceView.getViewTreeObserver().removeOnGlobalLayoutListener(this);

}

});

return mSurfaceView;

}

private void setupCameraHelper() {

cameraHelper = new CameraHelper.Builder()

.previewViewSize(new Point(mSurfaceView.getMeasuredWidth(), mSurfaceView.getMeasuredHeight()))

.rotation(((Activity) mContext).getWindowManager().getDefaultDisplay().getRotation())

.specificCameraId(Camera.CameraInfo.CAMERA_FACING_BACK)

.isMirror(false)

.previewOn(mSurfaceView) //预览容器 推荐TextureView

.cameraListener(mCameraListener) //设置自定义的监听器

.build();

cameraHelper.start();

}

所有的逻辑就在回调中处理:

//自定义监听

private CameraListener mCameraListener = new CameraListener() {

@Override

public void onCameraOpened(Camera camera, int cameraId, int displayOrientation, boolean isMirror) {

YYLogUtils.w("CameraListener - onCameraOpened");

//你可以使用 MediaRecorder 去录制视频

mMediaRecorder = new MediaRecorder();

...

}

@Override

public void onPreview(byte[] data, Camera camera) {

// nv21 数据

// 你也可以用 MediaCodec 自己编码去录制视频

}

@Override

public void onCameraClosed() {

YYLogUtils.w("CameraListener - onCameraClosed");

}

@Override

public void onCameraError(Exception e) {

YYLogUtils.w("CameraListener - onCameraError");

}

@Override

public void onCameraConfigurationChanged(int cameraID, int displayOrientation) {

YYLogUtils.w("CameraListener - onCameraConfigurationChanged");

}

};

停止与释放的逻辑

@Override

public void releaseAllCamera() {

cameraHelper.stop();

cameraHelper.release();

}

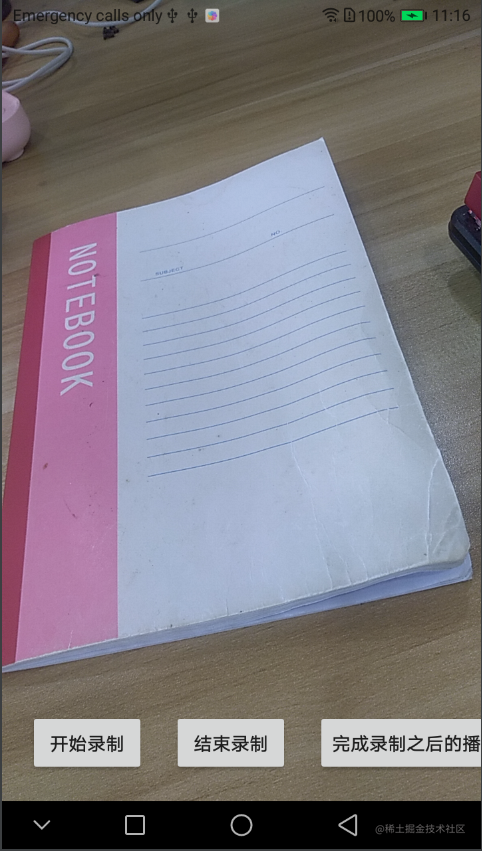

效果:

细啊,真的是太细了,代码太详细了。

三、Camera2 的使用与封装

Camera2 的使用是通过服务获取的,使用起来相对步骤多一些。

这里我们使用 TextureView 来承载画面:

public View initCamera(Context context) {

mTextureView = new TextureView(context);

mContext = context;

mTextureView.setLayoutParams(new ViewGroup.LayoutParams(720, 1280));

mTextureView.setSurfaceTextureListener(mSurfaceTextureListener);

return mTextureView;

}

同样的逻辑,我们在 TextureView 的回调中启动 Camera2 :

private TextureView.SurfaceTextureListener mSurfaceTextureListener = new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture texture, int width, int height) {

// 当TextureView可用时,打开摄像头

YYLogUtils.w("当TextureView可用时,width:" + width + " height:" + height);

openCamera(width, height);

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture texture, int width, int height) {

// 当TextureView尺寸改变时,更新预览尺寸

configureTransform(width, height);

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture texture) {

// 当TextureView销毁时,释放资源

return true;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture texture) {

// 监听纹理更新事件

}

};

private void openCamera(int width, int height) {

// 获取相机管理器

mCameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

// 设置自定义的线程处理

HandlerThread handlerThread = new HandlerThread("Camera2Manager");

handlerThread.start();

mBgHandler = new Handler(handlerThread.getLooper());

try {

//获取到相机信息并赋值

getCameraListCameraCharacteristics(width,height);

YYLogUtils.w("打开的摄像头id:" + mCurrentCameraId);

// 打开摄像头

mCameraManager.openCamera(mCurrentCameraId, new CameraDevice.StateCallback() {

@Override

public void onOpened(CameraDevice cameraDevice) {

// 当摄像头打开时,创建预览会话

mCameraDevice = cameraDevice;

createCameraPreviewSession(mBgHandler, mTextureView.getWidth(), mTextureView.getHeight());

}

@Override

public void onDisconnected(CameraDevice cameraDevice) {

if (mCameraDevice != null) {

mCameraDevice.close();

mCameraDevice = null;

}

}

@Override

public void onError(CameraDevice cameraDevice, int error) {

if (mCameraDevice != null) {

mCameraDevice.close();

mCameraDevice = null;

}

}

}, mBgHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

这里我们使用的 mBgHandler 我们使用的主线程,其实在子线程中更好,这里只用作展示而已(具体的后面工具类会给出)。

我们继续往下走,打开相机之后需要创建预览的会话,创建预览Surface,并且在预览会话回调中发起预览请求:

/**

* 创建相机预览会话

*/

private void createCameraPreviewSession(Handler handler, int width, int height) {

try {

// 获取SurfaceTexture并设置默认缓冲区大小

SurfaceTexture texture = mTextureView.getSurfaceTexture();

texture.setDefaultBufferSize(mTextureView.getWidth(), mTextureView.getHeight());

// 创建预览Surface

Surface surface = new Surface(texture);

// 创建CaptureRequest.Builder并设置预览Surface为目标

mPreviewRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mPreviewRequestBuilder.addTarget(surface);

// 创建ImageReader并设置回调

YYLogUtils.w("创建ImageReader并设置回调,width:" + width + " height:" + height);

// mImageReader = ImageReader.newInstance(mTextureView.getWidth(), mTextureView.getHeight(), ImageFormat.JPEG, 1);

mImageReader = ImageReader.newInstance(width, height, ImageFormat.YUV_420_888, 2);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, handler);

// 将ImageReader的Surface添加到CaptureRequest.Builder中

Surface readerSurface = mImageReader.getSurface();

mPreviewRequestBuilder.addTarget(readerSurface);

// 创建预览会话

mCameraDevice.createCaptureSession(Arrays.asList(surface, readerSurface), mSessionCallback, handler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private CameraCaptureSession.StateCallback mSessionCallback = new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

// 预览会话已创建成功,开始预览

mPreviewSession = cameraCaptureSession;

updatePreview();

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {

ToastUtils.INSTANCE.makeText(mContext, "Failed to create camera preview session");

}

};

private void updatePreview() {

try {

// 设置自动对焦模式

mPreviewRequestBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

// 构建预览请求

mPreviewRequest = mPreviewRequestBuilder.build();

// 发送预览请求

mPreviewSession.setRepeatingRequest(mPreviewRequest, null, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private ImageReader.OnImageAvailableListener mOnImageAvailableListener = new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

// YUV_420 格式

Image image = reader.acquireLatestImage();

saveImage(bytes);

image.close();

}

};

可以看到,Camera2 的基本使用比起 Camera1 来说复杂了不少,这里还是省略了一些旋转角度,预览方向,最佳尺寸等逻辑的代码(后面工具类会给出)。所以难怪谷歌要出 CameraX,自己都看不下去了呗。

当然,我们封装一下之后使用起来也是很简单的,看我的工具类。

这里分别不同的实例提供,一种是基本的预览,一种是AllSize的裁剪模式,一种是提供了 ImageReader 帧回调的终极模式。

这里真的不能全部贴出来,不然代码太多,大家可以看文章底部的源码查看。所以这里贴出的是关键代码:

由于 Camera2 的很多操作都推荐在子线程处理,这里先定义子线程的 Loop 与 Handler :

public abstract class BaseMessageLoop {

private volatile MsgHandlerThread mHandlerThread;

private volatile Handler mHandler;

private String mName;

public BaseMessageLoop(Context context, String name) {

mName = name;

}

public MsgHandlerThread getHandlerThread() {

return mHandlerThread;

}

public Handler getHandler() {

return mHandler;

}

public void Run() {

Quit();

//LogUtil.v(TAG, mName + " HandlerThread Run");

synchronized (this) {

mHandlerThread = new MsgHandlerThread(mName);

mHandlerThread.start();

mHandler = new Handler(mHandlerThread.getLooper(), mHandlerThread);

}

}

public void Quit() {

//LogUtil.v(TAG, mName + " HandlerThread Quit");

synchronized (this) {

if (mHandlerThread != null) {

mHandlerThread.quit();

}

if (mHandler != null) {

mHandler.removeCallbacks(mHandlerThread);

}

mHandlerThread = null;

mHandler = null;

}

}

...

}

基本的预览提供:

public class BaseCommonCameraProvider extends BaseCameraProvider {

protected Activity mContext;

protected String mCameraId;

protected Handler mCameraHandler;

private final BaseMessageLoop mThread;

protected CameraDevice mCameraDevice;

protected CameraCaptureSession session;

protected AspectTextureView[] mTextureViews;

protected CameraManager cameraManager;

protected OnCameraInfoListener mCameraInfoListener;

public interface OnCameraInfoListener {

void getBestSize(Size outputSizes);

void onFrameCannback(Image image);

void initEncode();

void onSurfaceTextureAvailable(SurfaceTexture surfaceTexture, int width, int height);

}

public void setCameraInfoListener(OnCameraInfoListener cameraInfoListener) {

this.mCameraInfoListener = cameraInfoListener;

}

protected BaseCommonCameraProvider(Activity mContext) {

this.mContext = mContext;

mThread = new BaseMessageLoop(mContext, "camera") {

@Override

protected boolean recvHandleMessage(Message msg) {

return false;

}

};

mThread.Run();

mCameraHandler = mThread.getHandler();

cameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

}

protected String getCameraId(boolean useFront) {

try {

for (String cameraId : cameraManager.getCameraIdList()) {

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(cameraId);

int cameraFacing = characteristics.get(CameraCharacteristics.LENS_FACING);

if (useFront) {

if (cameraFacing == CameraCharacteristics.LENS_FACING_FRONT) {

return cameraId;

}

} else {

if (cameraFacing == CameraCharacteristics.LENS_FACING_BACK) {

return cameraId;

}

}

}

} catch (CameraAccessException e) {

e.printStackTrace();

}

return null;

}

protected Size getCameraBestOutputSizes(String cameraId, Class clz) {

try {

//拿到支持的全部Size,并从大到小排序

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(cameraId);

StreamConfigurationMap configs = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

List<Size> sizes = Arrays.asList(configs.getOutputSizes(clz));

Collections.sort(sizes, new Comparator<Size>() {

@Override

public int compare(Size o1, Size o2) {

return o1.getWidth() * o1.getHeight() - o2.getWidth() * o2.getHeight();

}

});

Collections.reverse(sizes);

YYLogUtils.w("all_sizes:" + sizes);

//去除一些不合适的预览尺寸

List<Size> suitableSizes = new ArrayList();

for (int i = 0; i < sizes.size(); i++) {

Size option = sizes.get(i);

if (textureViewSize.getWidth() > textureViewSize.getHeight()) {

if (option.getWidth() >= textureViewSize.getWidth() && option.getHeight() >= textureViewSize.getHeight()) {

suitableSizes.add(option);

}

} else {

if (option.getWidth() >= textureViewSize.getHeight() && option.getHeight() >= textureViewSize.getWidth()) {

suitableSizes.add(option);

}

}

}

YYLogUtils.w("suitableSizes:" + suitableSizes);

//获取最小占用的Size

if (!suitableSizes.isEmpty()) {

return suitableSizes.get(suitableSizes.size() - 1);

} else {

//异常情况下只能找默认的了

return sizes.get(0);

}

} catch (CameraAccessException e) {

e.printStackTrace();

}

return null;

}

protected List<Size> getCameraAllSizes(String cameraId, int format) {

try {

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(cameraId);

StreamConfigurationMap configs = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

return Arrays.asList(configs.getOutputSizes(format));

} catch (CameraAccessException e) {

e.printStackTrace();

}

return null;

}

protected void releaseCameraDevice(CameraDevice cameraDevice) {

if (cameraDevice != null) {

cameraDevice.close();

cameraDevice = null;

}

}

protected void releaseCameraSession(CameraCaptureSession session) {

if (session != null) {

session.close();

session = null;

}

}

protected void releaseCamera() {

releaseCameraDevice(mCameraDevice);

releaseCameraSession(session);

}

}

对内部的一些监听与回调做了抽取,内部对切换镜头,支持的Size选择,等做了一些实现,这样简单的就能实现预览的操作。

我们常用的应该是可以裁切拉伸的 TextureView , 这里单独对它做一个预览的处理:

/**

* 只用于预览,没有帧回调

*/

public class Camera2AllSizeProvider extends BaseCommonCameraProvider {

private CaptureRequest.Builder mPreviewBuilder;

private Size outputSize;

public Camera2AllSizeProvider(Activity mContext) {

super(mContext);

Point displaySize = new Point();

mContext.getWindowManager().getDefaultDisplay().getSize(displaySize);

screenSize = new Size(displaySize.x, displaySize.y);

}

private void initCamera() {

mCameraId = getCameraId(false);//默认使用后置相机

//获取指定相机的输出尺寸列表,降序排序

outputSize = getCameraBestOutputSizes(mCameraId, SurfaceTexture.class);

//初始化预览尺寸

previewSize = outputSize;

YYLogUtils.w("previewSize,width:" + previewSize.getWidth() + "height:" + previewSize.getHeight());

if (mCameraInfoListener != null) {

mCameraInfoListener.getBestSize(outputSize);

}

}

int index = 0;

/**

* 关联并初始化TextTure

*/

public void initTexture(AspectTextureView... textureViews) {

mTextureViews = textureViews;

int size = textureViews.length;

for (AspectTextureView aspectTextureView : textureViews) {

aspectTextureView.setSurfaceTextureListener(new Camera2SimpleInterface.SimpleSurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surface, int width, int height) {

textureViewSize = new Size(width, height);

YYLogUtils.w("textureViewSize,width:" + textureViewSize.getWidth() + "height:" + textureViewSize.getHeight());

YYLogUtils.w("screenSize,width:" + screenSize.getWidth() + "height:" + screenSize.getHeight());

if (++index == size) {

initCamera();

openCamera();

}

}

});

}

}

@SuppressLint("MissingPermission")

private void openCamera() {

try {

cameraManager.openCamera(mCameraId, mStateCallback, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private final CameraDevice.StateCallback mStateCallback = new Camera2SimpleInterface.SimpleCameraDeviceStateCallback() {

@Override

public void onOpened(CameraDevice camera) {

mCameraDevice = camera;

MediaCodecList allMediaCodecLists = new MediaCodecList(-1);

MediaCodecInfo avcCodecInfo = null;

for (MediaCodecInfo mediaCodecInfo : allMediaCodecLists.getCodecInfos()) {

if (mediaCodecInfo.isEncoder()) {

String[] supportTypes = mediaCodecInfo.getSupportedTypes();

for (String supportType : supportTypes) {

if (supportType.equals(MediaFormat.MIMETYPE_VIDEO_AVC)) {

avcCodecInfo = mediaCodecInfo;

Log.d("TAG", "编码器名称:" + mediaCodecInfo.getName() + " " + supportType);

MediaCodecInfo.CodecCapabilities codecCapabilities = avcCodecInfo.getCapabilitiesForType(MediaFormat.MIMETYPE_VIDEO_AVC);

int[] colorFormats = codecCapabilities.colorFormats;

for (int colorFormat : colorFormats) {

switch (colorFormat) {

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV411Planar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV411PackedPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Planar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedSemiPlanar:

Log.d("TAG", "支持的格式::" + colorFormat);

break;

}

}

}

}

}

}

//根据什么Size来展示PreView

startPreviewSession(previewSize);

}

};

public void startPreviewSession(Size size) {

YYLogUtils.w("startPreviewSession 真正的Size,width:" + size.getWidth() + " height:" + size.getHeight());

try {

releaseCameraSession(session);

mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

List<Surface> outputs = new ArrayList<>();

for (AspectTextureView aspectTextureView : mTextureViews) {

//设置预览大小与展示的裁剪模式

aspectTextureView.setScaleType(AspectInterface.ScaleType.FIT_CENTER);

aspectTextureView.setSize(size.getHeight(), size.getWidth());

SurfaceTexture surfaceTexture = aspectTextureView.getSurfaceTexture();

surfaceTexture.setDefaultBufferSize(size.getWidth(), size.getHeight());

Surface previewSurface = new Surface(surfaceTexture);

mPreviewBuilder.addTarget(previewSurface);

outputs.add(previewSurface);

}

mCameraDevice.createCaptureSession(outputs, mStateCallBack, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private final CameraCaptureSession.StateCallback mStateCallBack = new Camera2SimpleInterface.SimpleStateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

try {

Camera2AllSizeProvider.this.session = session;

//设置拍照前持续自动对焦

mPreviewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

CaptureRequest request = mPreviewBuilder.build();

session.setRepeatingRequest(request, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

};

public void closeCamera() {

releaseCamera();

if (mCameraDevice != null) {

mCameraDevice.close();

}

}

}

对于预览的回调,我们可以再做一个单独的实例提供:

/**

* 在预览的基础上加入ImageReader的帧回调,可以用于编码H264视频流等操作

*/

public class Camera2ImageReaderProvider extends BaseCommonCameraProvider {

private CaptureRequest.Builder mPreviewBuilder;

protected ImageReader mImageReader;

private Size outputSize;

public Camera2ImageReaderProvider(Activity mContext) {

super(mContext);

Point displaySize = new Point();

mContext.getWindowManager().getDefaultDisplay().getSize(displaySize);

screenSize = new Size(displaySize.x, displaySize.y);

YYLogUtils.w("screenSize,width:" + screenSize.getWidth() + "height:" + screenSize.getHeight(), "Camera2ImageReaderProvider");

}

private void initCamera() {

mCameraId = getCameraId(false);//默认使用后置相机

//获取指定相机的输出尺寸列表,降序排序

outputSize = getCameraBestOutputSizes(mCameraId, SurfaceTexture.class);

//初始化预览尺寸

previewSize = outputSize;

YYLogUtils.w("previewSize,width:" + previewSize.getWidth() + "height:" + previewSize.getHeight(), "Camera2ImageReaderProvider");

if (mCameraInfoListener != null) {

mCameraInfoListener.getBestSize(outputSize);

}

}

int index = 0;

/**

* 关联并初始化TextTure

*/

public void initTexture(AspectTextureView... textureViews) {

mTextureViews = textureViews;

int size = textureViews.length;

for (AspectTextureView aspectTextureView : textureViews) {

aspectTextureView.setSurfaceTextureListener(new Camera2SimpleInterface.SimpleSurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surfaceTexture, int width, int height) {

textureViewSize = new Size(width, height);

YYLogUtils.w("textureViewSize,width:" + textureViewSize.getWidth() + "height:" + textureViewSize.getHeight(), "Camera2ImageReaderProvider");

if (mCameraInfoListener != null) {

mCameraInfoListener.onSurfaceTextureAvailable(surfaceTexture, width, height);

}

if (++index == size) {

initCamera();

openCamera();

}

}

});

}

}

//初始化编码格式

public void initEncord() {

if (mCameraInfoListener != null) {

mCameraInfoListener.initEncode();

}

}

@SuppressLint("MissingPermission")

private void openCamera() {

try {

cameraManager.openCamera(mCameraId, mStateCallback, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private final CameraDevice.StateCallback mStateCallback = new Camera2SimpleInterface.SimpleCameraDeviceStateCallback() {

@Override

public void onOpened(CameraDevice camera) {

mCameraDevice = camera;

initEncord();

MediaCodecList allMediaCodecLists = new MediaCodecList(-1);

MediaCodecInfo avcCodecInfo = null;

for (MediaCodecInfo mediaCodecInfo : allMediaCodecLists.getCodecInfos()) {

if (mediaCodecInfo.isEncoder()) {

String[] supportTypes = mediaCodecInfo.getSupportedTypes();

for (String supportType : supportTypes) {

if (supportType.equals(MediaFormat.MIMETYPE_VIDEO_AVC)) {

avcCodecInfo = mediaCodecInfo;

Log.d("TAG", "编码器名称:" + mediaCodecInfo.getName() + " " + supportType);

MediaCodecInfo.CodecCapabilities codecCapabilities = avcCodecInfo.getCapabilitiesForType(MediaFormat.MIMETYPE_VIDEO_AVC);

int[] colorFormats = codecCapabilities.colorFormats;

for (int colorFormat : colorFormats) {

switch (colorFormat) {

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV411Planar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV411PackedPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Planar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar:

case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedSemiPlanar:

Log.d("TAG", "支持的格式::" + colorFormat);

break;

}

}

}

}

}

}

//根据什么Size来展示PreView

startPreviewSession(previewSize);

}

};

public void startPreviewSession(Size size) {

YYLogUtils.w("真正的预览Size,width:" + size.getWidth() + " height:" + size.getHeight(), "Camera2ImageReaderProvider");

try {

releaseCameraSession(session);

mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

List<Surface> outputs = new ArrayList<>();

for (AspectTextureView aspectTextureView : mTextureViews) {

//设置预览大小与展示的裁剪模式

aspectTextureView.setScaleType(AspectInterface.ScaleType.FIT_CENTER);

aspectTextureView.setSize(size.getHeight(), size.getWidth());

SurfaceTexture surfaceTexture = aspectTextureView.getSurfaceTexture();

surfaceTexture.setDefaultBufferSize(size.getWidth(), size.getHeight());

Surface previewSurface = new Surface(surfaceTexture);

mPreviewBuilder.addTarget(previewSurface);

outputs.add(previewSurface);

}

//这里的回调监听

mImageReader = ImageReader.newInstance(size.getWidth(), size.getHeight(), ImageFormat.YUV_420_888, 10);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, mCameraHandler);

Surface readerSurface = mImageReader.getSurface();

mPreviewBuilder.addTarget(readerSurface);

outputs.add(readerSurface);

mCameraDevice.createCaptureSession(outputs, mStateCallBack, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private ImageReader.OnImageAvailableListener mOnImageAvailableListener = new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

Image image = reader.acquireLatestImage();

if (image == null) {

return;

}

if (mCameraInfoListener != null) {

mCameraInfoListener.onFrameCannback(image);

}

image.close();

}

};

private final CameraCaptureSession.StateCallback mStateCallBack = new Camera2SimpleInterface.SimpleStateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

try {

Camera2ImageReaderProvider.this.session = session;

//设置拍照前持续自动对焦

mPreviewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

// mPreviewBuilder.set(CaptureRequest.JPEG_ORIENTATION, 90);

CaptureRequest request = mPreviewBuilder.build();

session.setRepeatingRequest(request, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

};

public void closeCamera() {

releaseCamera();

if (mImageReader != null) {

mImageReader.close();

}

if (mCameraDevice != null) {

mCameraDevice.close();

}

}

}

关于 Camera2 的尺寸,其实我们要了解的是三种尺寸,当前屏幕尺寸,当前 textureView 尺寸,以及当前预览的尺寸。

比较容易混淆的就是 textureView 尺寸和预览的尺寸,一个是显示控件的尺寸,一个是 Camera 支持的预览尺寸,他们的大小可能相同,但更多的可能是不同,宽高比例也可能不同 ,所以我们才需要居中裁剪或居中展示的方式来预览画面,上文关于这三种尺寸的定义与使用都有详细的注释。

上文的代码中还省略了一些非关键的类与回调,有兴趣可以去源码中查看。(本文结尾有链接)

「如何使用?」 定义完成之后我们就可以来一个简单的使用示例:

这里以 H264 的编码为例:

fun setupCamera(activity: Activity, container: ViewGroup) {

file = File(CommUtils.getContext().externalCacheDir, "${System.currentTimeMillis()}-record.h264")

if (!file.exists()) {

file.createNewFile()

}

if (!file.isDirectory) {

outputStream = FileOutputStream(file, true)

}

val textureView = AspectTextureView(activity)

textureView.layoutParams = ViewGroup.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT)

mCamera2Provider = Camera2ImageReaderProvider(activity)

mCamera2Provider?.initTexture(textureView)

mCamera2Provider?.setCameraInfoListener(object :

BaseCommonCameraProvider.OnCameraInfoListener {

override fun getBestSize(outputSizes: Size?) {

mPreviewSize = outputSizes

}

override fun onFrameCannback(image: Image) {

if (isRecording) {

// 使用C库获取到I420格式,对应 COLOR_FormatYUV420Planar

val yuvFrame = yuvUtils.convertToI420(image)

// 与MediaFormat的编码格式宽高对应

val yuvFrameRotate = yuvUtils.rotate(yuvFrame, 90)

// 用于测试RGB图片的回调预览

bitmap = Bitmap.createBitmap(yuvFrameRotate.width, yuvFrameRotate.height, Bitmap.Config.ARGB_8888)

yuvUtils.yuv420ToArgb(yuvFrameRotate, bitmap!!)

mBitmapCallback?.invoke(bitmap)

// 旋转90度之后的I420格式添加到同步队列

val bytesFromImageAsType = yuvFrameRotate.asArray()

//使用Java工具类转换Image对象为NV21格式,对应 COLOR_FormatYUV420Flexible

// val bytesFromImageAsType = getBytesFromImageAsType(image, Camera2ImageUtils.YUV420SP)

originVideoDataList.offer(bytesFromImageAsType)

}

}

override fun initEncode() {

mediaCodecEncodeToH264()

}

override fun onSurfaceTextureAvailable(surfaceTexture: SurfaceTexture?, width: Int, height: Int) {

this@VideoH264RecoderUtils.surfaceTexture = surfaceTexture

}

})

container.addView(textureView)

}

由于这里返回的是 YUV420 ,所以这里我们需要转换为 I420 或 NV21 格式。这里我分别展示了 C 库转为 I420(YUV420) , Java 库转换为 NV21(YUV420SP) 格式。

然后我们就能把 I420 与 NV21 这两种我们常见的格式编码为 H264 文件,而怎么编码反倒不是今天的主题了,只能说方式太多了,这里先略过。

只需要这一个 setupCamera 方法,就能完成绑定,我们在 Activity 中使用即可一行代码设置进去就可以了。

class RecoderVideo1Activity : BaseActivity() {

override fun init() {

val flContainer = findViewById<FrameLayout>(R.id.fl_container)

val videoRecodeUtils = VideoH264RecoderUtils()

videoRecodeUtils.setupCamera(this, container)

}

}

效果:

四、CameraX 的使用与封装

相比前面两种 API 的使用,CameraX 的使用就简单多了,网上也很多 CameraX 的使用教程,这里我们就快速过一下。

我们初始化 PreviewView 对象,然后添加到我们指定的容器中。

public View initCamera(Context context) {

mPreviewView = new PreviewView(context);

mContext = context;

mPreviewView.setScaleType(PreviewView.ScaleType.FIT_CENTER);

mPreviewView.setLayoutParams(new ViewGroup.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT));

mPreviewView.getViewTreeObserver().addOnGlobalLayoutListener(new ViewTreeObserver.OnGlobalLayoutListener() {

@Override

public void onGlobalLayout() {

if (mPreviewView.isShown()) {

startCamera();

}

mPreviewView.getViewTreeObserver().removeOnGlobalLayoutListener(this);

}

});

return mPreviewView;

}

其次我们就可以启动相机并绑定到生命周期。

private void startCamera() {

//获取屏幕的分辨率

DisplayMetrics displayMetrics = new DisplayMetrics();

mPreviewView.getDisplay().getRealMetrics(displayMetrics);

//获取宽高比

int screenAspectRatio = aspectRatio(displayMetrics.widthPixels, displayMetrics.heightPixels);

int rotation = mPreviewView.getDisplay().getRotation();

ListenableFuture<ProcessCameraProvider> cameraProviderFuture = ProcessCameraProvider.getInstance(mContext);

cameraProviderFuture.addListener(() -> {

try {

//获取相机信息

mCameraProvider = cameraProviderFuture.get();

//镜头选择

mLensFacing = getLensFacing();

mCameraSelector = new CameraSelector.Builder().requireLensFacing(mLensFacing).build();

//预览对象

Preview.Builder previewBuilder = new Preview.Builder()

.setTargetAspectRatio(screenAspectRatio)

.setTargetRotation(rotation);

Preview preview = previewBuilder.build();

preview.setSurfaceProvider(mPreviewView.getSurfaceProvider());

//录制视频对象

mVideoCapture = new VideoCapture.Builder()

.setTargetAspectRatio(screenAspectRatio) //设置高宽比

.setAudioRecordSource(MediaRecorder.AudioSource.MIC) //设置音频源麦克风

.setTargetRotation(rotation)

//视频帧率

.setVideoFrameRate(30)

//bit率

.setBitRate(3 * 1024 * 1024)

.build();

// ImageAnalysis imageAnalysis = new ImageAnalysis.Builder()

// .setTargetAspectRatio(screenAspectRatio)

// .setTargetRotation(rotation)

// .setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST)

// .build();

//

// // 在每一帧上应用颜色矩阵

// imageAnalysis.setAnalyzer(Executors.newSingleThreadExecutor(), new MyAnalyzer(mContext));

//开启CameraX

mCameraProvider.unbindAll();

if (mContext instanceof FragmentActivity) {

FragmentActivity fragmentActivity = (FragmentActivity) mContext;

mCameraProvider.bindToLifecycle(fragmentActivity, mCameraSelector, preview, mVideoCapture/*,imageAnalysis*/);

}

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}, ContextCompat.getMainExecutor(mContext));

}

这里我们使用了四个用例,预览,拍照,录制,分析。但是我们注释了分析用例,因为录制与分析这两个用例是不能同时使用的。

录制视频的话,我们就能直接使用录制用例的方法就能录制,这是最简单的。

public void startCameraRecord() {

if (mVideoCapture == null) return;

VideoCapture.OutputFileOptions outputFileOptions = new VideoCapture.OutputFileOptions.Builder(getOutFile()).build();

mVideoCapture.startRecording(outputFileOptions, mExecutorService, new VideoCapture.OnVideoSavedCallback() {

@Override

public void onVideoSaved(@NonNull VideoCapture.OutputFileResults outputFileResults) {

YYLogUtils.w("视频保存成功,outputFileResults:" + outputFileResults.getSavedUri());

if (mCameraCallback != null) mCameraCallback.takeSuccess();

}

@Override

public void onError(int videoCaptureError, @NonNull String message, @Nullable Throwable cause) {

YYLogUtils.e(message);

}

});

}

内部一些镜头选择,比例选择等代码省略了,在下面的工具类中会给出。

可以看到虽然 CameraX 的使用已经是够简单的了,但是由于都是一些重复的代码,我们还是可以对其做一些封装,代码如下:

class CameraXController {

private var mCameraProvider: ProcessCameraProvider? = null

private var mLensFacing = 0

private var mCameraSelector: CameraSelector? = null

private var mVideoCapture: VideoCapture? = null

private var mCameraCallback: ICameraCallback? = null

private val mExecutorService = Executors.newSingleThreadExecutor()

//初始化 CameraX 相关配置

fun setUpCamera(context: Context, surfaceProvider: Preview.SurfaceProvider) {

//获取屏幕的分辨率与宽高比

val displayMetrics = context.resources.displayMetrics

val screenAspectRatio = aspectRatio(displayMetrics.widthPixels, displayMetrics.heightPixels)

val cameraProviderFuture = ProcessCameraProvider.getInstance(context)

cameraProviderFuture.addListener({

mCameraProvider = cameraProviderFuture.get()

//镜头选择

mLensFacing = lensFacing

mCameraSelector = CameraSelector.Builder().requireLensFacing(mLensFacing).build()

//预览对象

val preview: Preview = Preview.Builder()

.setTargetAspectRatio(screenAspectRatio)

.build()

preview.setSurfaceProvider(surfaceProvider)

//录制视频对象

mVideoCapture = VideoCapture.Builder()

.setTargetAspectRatio(screenAspectRatio)

.setAudioRecordSource(MediaRecorder.AudioSource.MIC) //设置音频源麦克风

//视频帧率

.setVideoFrameRate(30)

//bit率

.setBitRate(3 * 1024 * 1024)

.build()

//绑定到页面

mCameraProvider?.unbindAll()

val camera = mCameraProvider?.bindToLifecycle(

context as LifecycleOwner,

mCameraSelector!!,

mVideoCapture,

preview

)

val cameraInfo = camera?.cameraInfo

val cameraControl = camera?.cameraControl

}, ContextCompat.getMainExecutor(context))

}

//根据屏幕宽高比设置预览比例为4:3还是16:9

private fun aspectRatio(widthPixels: Int, heightPixels: Int): Int {

val previewRatio = Math.max(widthPixels, heightPixels).toDouble() / Math.min(widthPixels, heightPixels).toDouble()

return if (Math.abs(previewRatio - 4.0 / 3.0) <= Math.abs(previewRatio - 16.0 / 9.0)) {

AspectRatio.RATIO_4_3

} else {

AspectRatio.RATIO_16_9

}

}

//优先选择哪一个摄像头镜头

private val lensFacing: Int

private get() {

if (hasBackCamera()) {

return CameraSelector.LENS_FACING_BACK

}

return if (hasFrontCamera()) {

CameraSelector.LENS_FACING_FRONT

} else -1

}

//是否有后摄像头

private fun hasBackCamera(): Boolean {

if (mCameraProvider == null) {

return false

}

try {

return mCameraProvider!!.hasCamera(CameraSelector.DEFAULT_BACK_CAMERA)

} catch (e: CameraInfoUnavailableException) {

e.printStackTrace()

}

return false

}

//是否有前摄像头

private fun hasFrontCamera(): Boolean {

if (mCameraProvider == null) {

return false

}

try {

return mCameraProvider!!.hasCamera(CameraSelector.DEFAULT_BACK_CAMERA)

} catch (e: CameraInfoUnavailableException) {

e.printStackTrace()

}

return false

}

// 开始录制

fun startCameraRecord(outFile: File) {

mVideoCapture ?: return

val outputFileOptions: VideoCapture.OutputFileOptions = VideoCapture.OutputFileOptions.Builder(outFile).build()

mVideoCapture!!.startRecording(outputFileOptions, mExecutorService, object : VideoCapture.OnVideoSavedCallback {

override fun onVideoSaved(outputFileResults: VideoCapture.OutputFileResults) {

YYLogUtils.w("视频保存成功,outputFileResults:" + outputFileResults.savedUri)

mCameraCallback?.takeSuccess()

}

override fun onError(videoCaptureError: Int, message: String, cause: Throwable?) {

YYLogUtils.e(message)

}

})

}

// 停止录制

fun stopCameraRecord(cameraCallback: ICameraCallback?) {

mCameraCallback = cameraCallback

mVideoCapture?.stopRecording()

}

// 释放资源

fun releseAll() {

mVideoCapture?.stopRecording()

mExecutorService.shutdown()

mCameraProvider?.unbindAll()

mCameraProvider?.shutdown()

mCameraProvider = null

}

}

对与封装之后的工具类来说,使用起来就更简单了:

override fun initCamera(context: Context): View {

mPreviewView = PreviewView(context)

mContext = context

mPreviewView.layoutParams = ViewGroup.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT)

mPreviewView.viewTreeObserver.addOnGlobalLayoutListener(object : OnGlobalLayoutListener {

override fun onGlobalLayout() {

if (mPreviewView.isShown) {

startCamera()

}

mPreviewView.viewTreeObserver.removeOnGlobalLayoutListener(this)

}

})

return mPreviewView

}

private fun startCamera() {

cameraXController.setUpCamera(mContext, mPreviewView.surfaceProvider)

}

如果想开启视频录制:

override fun startCameraRecord() {

cameraXController.startCameraRecord(outFile)

}

同时 CameraX 自身就自带裁剪功能,也不需要我们自定义 TextureView 实现了。

居中显示与居中裁剪的效果如下:

太好了,使用起来真的是超级简单,爱了爱了。

总结

本文对于常见的三种 Camera API 做了示例代码及其对于分别进行封装。对于使用哪一种 Camera 实现效果,大家可以自行选择。

如果我想要回调 NV21 的数据,其实我会选择 Camera1 ,因为它本身返回就是这个格式不需要转换,如果我想要回调 I420 格式,我会选择 Camera2 或 CameraX ,反正需要转换,他们更方便,特别是配合 libyuv 库,效率会更高。

如果想实现录制视频的功能呢?如果是普通的录制我会选择 CameraX 自带的录制视频功能,更加的简单方便。如果是特效的录制,那么我会选择 Camera2 或 CameraX 配合 GLSurfaceView 实现。

我个人比较喜欢 CameraX ,因为我们不是专业做相机的,对于一些简单的预览与录制的需求来说,CameraX 封装的蛮好用的,使用还简单,兼容性还很高,用起来成本也小。(个人观点,比较主观)

投稿作者:Newki,原文链接:https://juejin.cn/post/7252597901762625596

「点击关注,Carson每天带你学习一个Android知识点。」

最后福利:学习资料赠送

-

福利:本人亲自整理的「Android学习资料」 -

数量:10名 -

参与方式:「点击右下角”在看“并回复截图到公众号,随机抽取」

点击就能升职、加薪水!