大数据入门:Spark+Kudu的广告业务项目实战笔记(二)

点击上方蓝色字体,选择“设为星标”

1.功能二开发

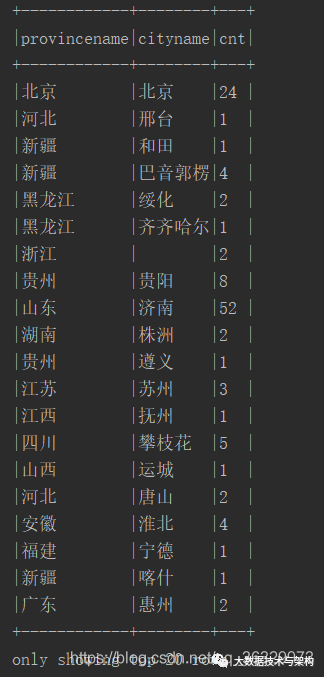

统计省份、城市数量分布情况,按照provincename与cityname分组统计

package com.imooc.bigdata.cp08.businessimport com.imooc.bigdata.cp08.utils.SQLUtilsimport org.apache.spark.sql.SparkSessionobject ProvinceCityStatApp {def main(args: Array[String]): Unit = {val spark = SparkSession.builder().master("local[2]").appName("ProvinceCityStatApp").getOrCreate()//从Kudu的ods表中读取数据,然后按照省份和城市分组即可val sourceTableName = "ods"val masterAddress = "hadoop000"val odsDF = spark.read.format("org.apache.kudu.spark.kudu").option("kudu.table", sourceTableName).option("kudu.master", masterAddress).load()//odsDF.show(false)odsDF.createOrReplaceTempView("ods")val result = spark.sql(SQLUtils.PROVINCE_CITY_SQL)result.show(false)spark.stop()}}

lazy val PROVINCE_CITY_SQL = "select provincename,cityname,count(1) as cnt from ods group by provincename,cityname" lazy val PROVINCE_CITY_SQL = "select provincename,cityname,count(1) as cnt from ods group by provincename,cityname"运行结果如图所示:

2.数据落地Kudu

其中KuduUtils.sink内容详见上一篇文章:

val sinkTableName = "province_city_stat"val partitionId = "provincename"val schema = SchemaUtils.ProvinceCitySchemaKuduUtils.sink(result,sinkTableName,masterAddress,schema,partitionId)

lazy val ProvinceCitySchema: Schema = {val columns = List(new ColumnSchemaBuilder("provincename",Type.STRING).nullable(false).key(true).build(),new ColumnSchemaBuilder("cityname",Type.STRING).nullable(false).key(true).build(),new ColumnSchemaBuilder("cnt",Type.INT64).nullable(false).key(true).build()).asJavanew Schema(columns)}

spark.read.format("org.apache.kudu.spark.kudu").option("kudu.master",masterAddress).option("kudu.table",sinkTableName).load().show()

有数据就可以了。

版权声明:

本文为大数据技术与架构整理,原作者独家授权。未经原作者允许违规转载追究侵权责任。

编辑|冷眼丶

微信公众号|import_bigdata

文章不错?点个【在看】吧! ?

评论