容器运行时Containerd基础

本文目录:

一、安装Containerd

二、运行一个busybox镜像

三、创建CNI网络

四、使containerd容器具备网络功能

五、与宿主机共享目录

六、与其它容器共享ns

七、docker/containerd并用

一、安装Containerd

本地安装Containerd:

yum install -y yum-utilsyum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoyum install -y containerd epel-releaseyum install -y jq

Containerd版本:

[root@containerd ~]# ctr versionClient:Version: 1.4.3Revision: 269548fa27e0089a8b8278fc4fc781d7f65a939bGo version: go1.13.15Server:Version: 1.4.3Revision: 269548fa27e0089a8b8278fc4fc781d7f65a939bUUID: b7e3b0e7-8a36-4105-a198-470da2be02f2

初始化Containerd配置:

containerd config default > /etc/containerd/config.tomlsystemctl enabled containerdsystemctl start containerd

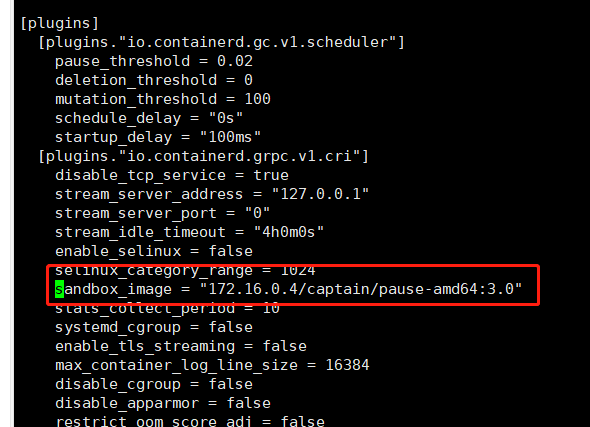

替换 containerd 默认的sand_box镜像,编辑/etc/containerd/config.toml文件:

# registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 可以使用阿里云镜像源sandbox_image = "172.16.0.4/captain/pause-amd64:3.0"

应用配置并重新运行containerd服务

systemctl daemon-reloadsystemctl restart containerd

二、运行一个busybox镜像:

预先准备:

[root@containerd ~]# # 拉取镜像[root@containerd ~]# ctr -n k8s.io i pull docker.io/library/busybox:latest[root@containerd ~]# # 创建一个container(此时还未运行)[root@containerd ~]# ctr -n k8s.io container create docker.io/library/busybox:latest busybox[root@containerd ~]# # 创建一个task[root@containerd ~]# ctr -n k8s.io task start -d busybox[root@containerd ~]# # 上述步骤也可以简写成如下[root@containerd ~]# # ctr -n k8s.io run -d docker.io/library/busybox:latest busybox

查看该容器在宿主机的PID:

[root@containerd ~]# ctr -n k8s.io task lsTASK PID STATUSbusybox 2356 RUNNING[root@containerd ~]# ps ajxf|grep "containerd-shim-runc\|2356"|grep -v grep1 2336 2336 1178 ? -1 Sl 0 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id busybox -address /run/containerd/containerd.sock2336 2356 2356 2356 ? -1 Ss 0 0:00 \_ sh

进入容器:

[root@containerd ~]# ctr -n k8s.io t exec --exec-id $RANDOM -t busybox sh

/ # uname -a

Linux containerd 3.10.0-1062.el7.x86_64 #1 SMP Wed Aug 7 18:08:02 UTC 2019 x86_64 GNU/Linux

/ # ls /etc

group localtime network passwd shadow

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

/ #

发送SIGKILL信号量杀死该容器:

[root@containerd ~]# ctr -n k8s.io t kill -s SIGKILL busybox[root@containerd ~]# ctr -n k8s.io t rm busyboxWARN[0000] task busybox exit with non-zero exit code 137

三、创建CNI网络

访问如下两个Git项目,并从release页面下载最新版本:

| 链接 | 说明 |

|---|---|

| containernetworking/plugins | CNI插件源码(本教程版本:v0.9.0) 文件名:cni-plugins-linux-amd64-v0.9.0.tgz |

| containernetworking/cni | CNI源码(本教程版本:v0.8.0) 文件名:cni-v0.8.0.tar.gz |

| https://www.cni.dev/plugins/ | CNI插件列表介绍文档 |

下载至HOME目录并解压:

[root@containerd ~]# pwd/root[root@containerd ~]# # 解压至HOME目录的cni-plugins/文件夹中[root@containerd ~]# mkdir -p cni-plugins[root@containerd ~]# tar xvf cni-plugins-linux-amd64-v0.9.0.tgz -C cni-plugins[root@containerd ~]# # 解压至HOME目录的cni/文件夹中[root@containerd ~]# tar -zxvf cni-v0.8.0.tar.gz[root@containerd ~]# mv cni-0.8.0 cni

本教程我们首先使用bridge插件创建一个网卡,首先执行如下指令:

mkdir -p /etc/cni/net.dcat >/etc/cni/net.d/10-mynet.conf <<EOF{"cniVersion": "0.2.0","name": "mynet","type": "bridge","bridge": "cni0","isGateway": true,"ipMasq": true,"ipam": {"type": "host-local","subnet": "10.22.0.0/16","routes": [{ "dst": "0.0.0.0/0" }]}}EOFcat >/etc/cni/net.d/99-loopback.conf <<EOF{"cniVersion": "0.2.0","name": "lo","type": "loopback"}EOF

随后激活网卡(说明:宿主机执行ip a命令即可看到一个cni0的网卡):

[root@containerd ~]# cd cni/scripts/[root@containerd scripts]# CNI_PATH=/root/cni-plugins ./priv-net-run.sh echo "Hello World"Hello World[root@containerd scripts]# ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:ea:35:42 brd ff:ff:ff:ff:ff:ffinet 192.168.105.110/24 brd 192.168.105.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::1c94:5385:5133:cd48/64 scope link noprefixroutevalid_lft forever preferred_lft forever10: cni0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000link/ether de:12:0b:ea:a4:bc brd ff:ff:ff:ff:ff:ffinet 10.22.0.1/24 brd 10.22.0.255 scope global cni0valid_lft forever preferred_lft foreverinet6 fe80::dc12:bff:feea:a4bc/64 scope linkvalid_lft forever preferred_lft forever

四、使containerd容器具备网络功能

注重细节的我们在步骤二中将会发现,busybox容器此刻仅有一张本地网卡,其是无法访问任何网络的,那么我们如何使其具备各容器互通、外部网络通信功能呢?不妨执行如下指令:

[root@containerd ~]# ctr -n k8s.io t lsTASK PID STATUSbusybox 5111 RUNNING[root@containerd ~]# # pid=5111[root@containerd ~]# pid=$(ctr -n k8s.io t ls|grep busybox|awk '{print $2}')[root@containerd ~]# netnspath=/proc/$pid/ns/net[root@containerd ~]# CNI_PATH=/root/cni-plugins /root/cni/scripts/exec-plugins.sh add $pid $netnspath

随后进入busybox容器我们将会发现其新增了一张网卡并可以实现外部网络访问:

[root@containerd ~]# ctr -n k8s.io task exec --exec-id $RANDOM -t busybox sh -/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever3: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether d2:f2:8d:53:fc:95 brd ff:ff:ff:ff:ff:ffinet 10.22.0.13/24 brd 10.22.0.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fe80::d0f2:8dff:fe53:fc95/64 scope linkvalid_lft forever preferred_lft forever/ # ping 114.114.114.114PING 114.114.114.114 (114.114.114.114): 56 data bytes64 bytes from 114.114.114.114: seq=0 ttl=127 time=17.264 ms64 bytes from 114.114.114.114: seq=0 ttl=127 time=13.838 ms64 bytes from 114.114.114.114: seq=1 ttl=127 time=18.024 ms64 bytes from 114.114.114.114: seq=2 ttl=127 time=15.316 ms

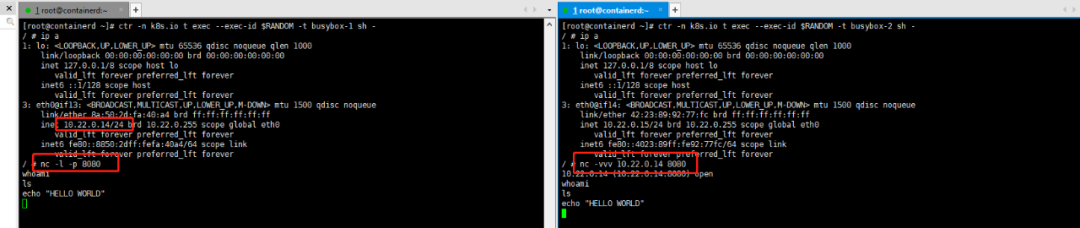

小试牛刀:按照上述方法分别创建两个名为busybox-1与busybox-2容器,借助nc -l -p 8080暴露TCP服务端口进行彼此通信。

五、与宿主机共享目录

通过执行如下方案,我们即可实现容器与宿主机的/tmp进行共享:

[root@docker scripts]# ctr -n k8s.io c create v4ehxdz8.mirror.aliyuncs.com/library/busybox:latest busybox1 --mount type=bind,src=/tmp,dst=/host,options=rbind:rw[root@docker scripts]# ctr -n k8s.io t start -d busybox1 bash[root@docker scripts]# ctr -n k8s.io t exec -t --exec-id $RANDOM busybox1 sh/ # echo "Hello world" > /host/1/ #[root@docker scripts]# cat /tmp/1Hello world

六、与其它容器共享ns

本节仅对pid ns共享进行举例,其它ns共享与该方案类似

首先我们对docker的ns共享进行实验:

[root@docker scripts]# docker run --rm -it -d busybox sh687c80243ee15e0a2171027260e249400feeeee2607f88d1f029cc270402cdd1[root@docker scripts]# docker run --rm -it -d --pid="container:687c80243ee15e0a2171027260e249400feeeee2607f88d1f029cc270402cdd1" busybox catfa2c09bd9c042128ebb2256685ce20e265f4c06da6d9406bc357d149af7b83d2[root@docker scripts]# docker ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESfa2c09bd9c04 busybox "cat" 2 seconds ago Up 1 second pedantic_goodall687c80243ee1 busybox "sh" 22 seconds ago Up 21 seconds hopeful_franklin[root@docker scripts]# docker exec -it 687c80243ee1 sh/ # ps auxPID USER TIME COMMAND1 root 0:00 sh8 root 0:00 cat15 root 0:00 sh22 root 0:00 ps aux

接下来仿照该方案我们基于containerd的方式实现pid ns共享:

[root@docker scripts]# ctr -n k8s.io t lsTASK PID STATUSbusybox 2255 RUNNINGbusybox1 2652 RUNNING[root@docker scripts]# # 这里的2652即为已有task运行时的pid号[root@docker scripts]# ctr -n k8s.io c create --with-ns "pid:/proc/2652/ns/pid" v4ehxdz8.mirror.aliyuncs.com/library/python:3.6-slim python[root@docker scripts]# ctr -n k8s.io t start -d python python # 这里启动了一个python的命令[root@docker scripts]# ctr -n k8s.io t exec -t --exec-id $RANDOM busybox1 sh/ # ps auxPID USER TIME COMMAND1 root 0:00 sh34 root 0:00 python341 root 0:00 sh47 root 0:00 ps aux

七、docker/containerd并用

参考链接:https://docs.docker.com/engine/reference/commandline/dockerd/

在完成对containerd的安装/配置启动后,我们可以在宿主机中安装docker客户端及服务。执行如下指令:

yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoyum update -y && yum install -y docker-ce-18.06.2.cesystemctl enable docker

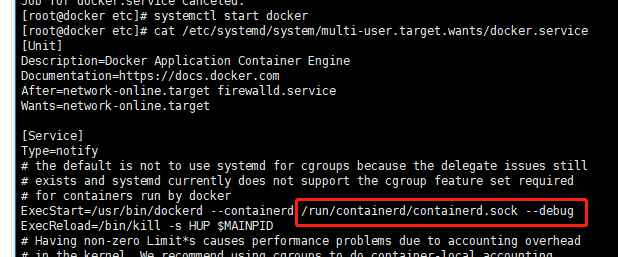

编辑/etc/systemd/system/multi-user.target.wants/docker.service文件并为其新增--containerd启动项:

保存,推出后执行如下指令:

[root@docker ~]# systemctl daemon-reload[root@docker ~]# systemctl start docker[root@docker ~]# ps aux|grep dockerroot 72570 5.0 2.9 872668 55216 ? Ssl 01:31 0:00 /usr/bin/dockerd --containerd /run/containerd/containerd.sock --debug

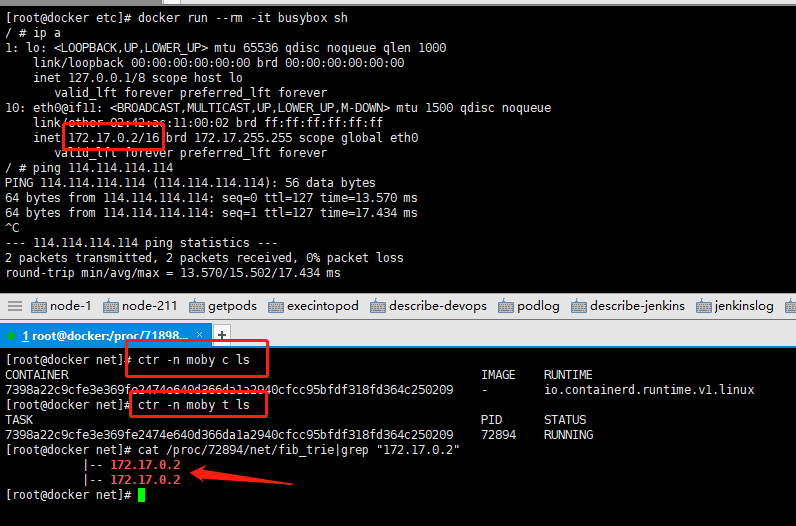

进行验证:

文章转载:K8S中文社区

(版权归原作者所有,侵删)

![]()

点击下方“阅读原文”查看更多