Kubernetes集群搭建超详细总结(CentOS版)

学习Kubernetes的关键一步就是要学会搭建一套k8s集群。在今天的文章中作者将最近新总结的搭建技巧,无偿分享给大家!废话不多说,直接上干货!

01、系统环境准备

要安装部署Kubernetes集群,首先需要准备机器,最直接的办法可以到公有云(如阿里云等)申请几台虚拟机。而如果条件允许,拿几台本地物理服务器来组建集群自然是最好不过了。但是这些机器需要满足以下几个条件:

要求64位Linux操作系统,且内核版本要求3.10及以上,能满足安装Docker项目所需的要求;

机器之间要保持网络互通,这是未来容器之间网络互通的前提条件;

要有外网访问权限,因为部署的过程中需要拉取相应的镜像,要求能够访问到gcr.io、quay.io这两个docker registry,因为有小部分镜像需要从这里拉取;

单机可用资源建议2核CPU、8G内存或以上,如果小一点也可以但是能调度的Pod数量就比较有限了;

磁盘空间要求在30GB以上,主要用于存储Docker镜像及相关日志文件;

在本次实验中我们准备了两台虚拟机,其具体配置如下:

2核CPU、2GB内存,30GB的磁盘空间;

Unbantu 20.04 LTS的Sever版本,其Linux内核为5.4.0;

内网互通,外网访问权限不受控制;

02、Kubernetes集群部署工具Kubeadm介绍

作为典型的分布式系统,Kubernetes的部署一直是困扰初学者进入Kubernetes世界的一大障碍。在发布早期Kubernetes的部署主要依赖于社区维护的各种脚本,但这其中会涉及二进制编译、配置文件以及kube-apiserver授权配置文件等诸多运维工作。目前各大云服务厂商常用的Kubernetes部署方式是使用SaltStack、Ansible等运维工具自动化地执行这些繁琐的步骤,但即使这样,这个部署的过程对于初学者来说依然是非常繁琐的。

正是基于这样的痛点,在志愿者的推动下Kubernetes社区终于发起了kubeadm这一独立的一键部署工具,使用kubeadm我们可以通过几条简单的指令来快速地部署一个kubernetes集群。在接下来的内容中,就将具体演示如何使用kubeadm来部署一个简单结构的Kubernetes集群。

03、安装kubeadm及Docker环境

正是基于这样的痛点,在志愿者的推动下Kubernetes社区终于发起了kubeadm这一独立的一键部署工具,使用kubeadm我们可以通过几条简单的指令来快速地部署一个kubernetes集群。在接下来的内容中,就将具体演示如何使用kubeadm来部署一个简单结构的Kubernetes集群。

前面简单介绍了Kubernetes官方发布一键部署工具kubeadm,只需要添加kubeadm的源,然后直接用yum安装即可,具体操作如下:

1)、编辑操作系统安装源配置文件,添加kubernetes镜像源,命令如下:

#添加Docker阿里镜像源

[root@centos-linux ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

#安装Docker

[root@centos-linux ~]# yum -y install docker-ce-18.09.9-3.el7

#启动Docker并设置开机启动

[root@centos-linux ~]# systemctl enable docker

添加Kubernetes yum镜像源,由于网络原因,也可以换成国内Ubantu镜像源,如阿里云镜像源地址:

添加阿里云Kubernetes yum镜像源

# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2)、完成上述步骤后就可以通过yum命令安装kubeadm了,如下:

[root@centos-linux ~]# yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

当前版本是最新版本1.21,这里安装1.20。

#查看安装的kubelet版本信息

[root@centos-linux ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.0", GitCommit:"af46c47ce925f4c4ad5cc8d1fca46c7b77d13b38", GitTreeState:"clean", BuildDate:"2020-12-08T17:59:43Z", GoVersion:"go1.15.5", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

在上述安装kubeadm的过程中,kubeadm和kubelet、kubectl、kubernetes-cni这几个kubernetes核心组件的二进制文件也都会被自动安装好。

3)、Docker服务启动及限制修改

在具体运行kubernetes部署之前需要对Docker的配置信息进行一些调整。首先,编辑系统/etc/default/grub文件,在配置项GRUB_CMDLINE_LINUX中添加如下参数:

GRUB_CMDLINE_LINUX=" cgroup_enable=memory swapaccount=1"

完成编辑后保存执行如下命令,并重启服务器,命令如下:

root@kubernetesnode01:/opt/kubernetes-config# reboot

上述修改主要解决的是可能出现的“docker警告WARNING: No swap limit support”问题。其次,编辑创建/etc/docker/daemon.json文件,添加如下内容:

# cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://6ze43vnb.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

完成保存后执行重启Docker命令,如下:

# systemctl restart docker

此时可以查看Docker的Cgroup信息,如下:

# docker info | grep Cgroup

Cgroup Driver: systemd

# swapoff -a

04、部署Kubernetes的Master节点

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

controllerManager:

extraArgs:

horizontal-pod-autoscaler-use-rest-clients: "true"

horizontal-pod-autoscaler-sync-period: "10s"

node-monitor-grace-period: "10s"

apiServer:

extraArgs:

runtime-config: "api/all=true"

kubernetesVersion: "v1.20.0"

在上述yaml配置文件中“horizontal-pod-autoscaler-use-rest-clients: "true"”这个配置,表示将来部署的kuber-controller-manager能够使用自定义资源(Custom Metrics)进行自动水平扩展,感兴趣的读者可以自行查阅相关资料!而“v1.20.0”就是要kubeadm帮我们部署的Kubernetes版本号。

需要注意的是,如果执行过程中由于国内网络限制问题导致无法下载相应的Docker镜像,可以根据报错信息在国内网站(如阿里云)上找到相关镜像,然后再将这些镜像重新tag之后再进行安装。具体如下:

#从阿里云Docker仓库拉取Kubernetes组件镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.20.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.20.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.20.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.20.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

#重新tag镜像

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.20.0 k8s.gcr.io/kube-scheduler:v1.20.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.20.0 k8s.gcr.io/kube-controller-manager:v1.20.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.20.0 k8s.gcr.io/kube-apiserver:v1.20.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.20.0 k8s.gcr.io/kube-proxy:v1.20.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 k8s.gcr.io/etcd:3.4.13-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

此时通过Docker命令就可以查看到这些Docker镜像信息了,命令如下:

root@kubernetesnode01:/opt/kubernetes-config# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.18.1 4e68534e24f6 2 months ago 117MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64 v1.18.1 4e68534e24f6 2 months ago 117MB

k8s.gcr.io/kube-controller-manager v1.18.1 d1ccdd18e6ed 2 months ago 162MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64 v1.18.1 d1ccdd18e6ed 2 months ago 162MB

k8s.gcr.io/kube-apiserver v1.18.1 a595af0107f9 2 months ago 173MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64 v1.18.1 a595af0107f9 2 months ago 173MB

k8s.gcr.io/kube-scheduler v1.18.1 6c9320041a7b 2 months ago 95.3MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64 v1.18.1 6c9320041a7b 2 months ago 95.3MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 4 months ago 683kB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 4 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 4 months ago 43.8MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 4 months ago 43.8MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 8 months ago 288MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64 3.4.3-0 303ce5db0e90 8 months ago 288MB

root@kubernetesnode01:/opt/kubernetes-config# kubeadm init --config kubeadm.yaml --v=5

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.211.55.13:6443 --token yi9lua.icl2umh9yifn6z9k \

--discovery-token-ca-cert-hash sha256:074460292aa167de2ae9785f912001776b936cec79af68cec597bd4a06d5998d

从上面部署执行结果中可以看到,部署成功后kubeadm会生成如下指令:

kubeadm join 10.211.55.13:6443 --token yi9lua.icl2umh9yifn6z9k \

--discovery-token-ca-cert-hash sha256:074460292aa167de2ae9785f912001776b936cec79af68cec597bd4a06d5998d

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

centos-linux.shared NotReady control-plane,master 6m55s v1.20.0

# kubectl describe node centos-linux.shared

该命令可以非常详细地获取节点对象的状态、事件等详情,这种方式也是调试Kubernetes集群时最重要的排查手段。根据显示的如下信息:

...

Conditions

...

Ready False... KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

...

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-66bff467f8-l4wt6 0/1 Pending 0 64m

coredns-66bff467f8-rcqx6 0/1 Pending 0 64m

etcd-kubernetesnode01 1/1 Running 0 64m

kube-apiserver-kubernetesnode01 1/1 Running 0 64m

kube-controller-manager-kubernetesnode01 1/1 Running 0 64m

kube-proxy-wjct7 1/1 Running 0 64m

kube-scheduler-kubernetesnode01 1/1 Running 0 64m

05、部署Kubernetes网络插件

# kubectl apply -f https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.apps/weave-net created

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-66bff467f8-l4wt6 1/1 Running 0 116m

coredns-66bff467f8-rcqx6 1/1 Running 0 116m

etcd-kubernetesnode01 1/1 Running 0 116m

kube-apiserver-kubernetesnode01 1/1 Running 0 116m

kube-controller-manager-kubernetesnode01 1/1 Running 0 116m

kube-proxy-wjct7 1/1 Running 0 116m

kube-scheduler-kubernetesnode01 1/1 Running 0 116m

weave-net-746qj 2/2 Running 0 14m

可以看到,此时所有的系统Pod都成功启动了,而刚才部署的Weave网络插件则在kube-system下面新建了一个名叫“weave-net-746qj”的Pod,而这个Pod就是容器网络插件在每个节点上的控制组件。

06、部署Worker节点

root@kubenetesnode02:~# kubeadm join 10.211.55.6:6443 --token jfulwi.so2rj5lukgsej2o6 --discovery-token-ca-cert-hash sha256:d895d512f0df6cb7f010204193a9b240e8a394606090608daee11b988fc7fea6 --v=5

...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#创建配置目录

root@kubenetesnode02:~# mkdir -p $HOME/.kube

#将Master节点中$/HOME/.kube/目录中的config文件拷贝至Worker节点对应目录

root@kubenetesnode02:~# scp root@10.211.55.6:$HOME/.kube/config $HOME/.kube/

#权限配置

root@kubenetesnode02:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@kubernetesnode02:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubenetesnode02 NotReady <none> 33m v1.18.4

kubernetesnode01 Ready master 29h v1.18.4

root@kubernetesnode02:~# kubectl describe node kubenetesnode02

...

Conditions:

...

Ready False ... KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

...

#从阿里云拉取必要镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.20.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

#将镜像重新打tag

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.20.0 k8s.gcr.io/kube-proxy:v1.20.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

如若一切正常,则继续查看节点状态,命令如下:

root@kubenetesnode02:~# kubectl get node

NAME STATUS ROLES AGE VERSION

kubenetesnode02 Ready <none> 7h52m v1.20.0

kubernetesnode01 Ready master 37h v1.20.0

root@kubenetesnode02:~# kubectl label node kubenetesnode02 node-role.kubernetes.io/worker=worker

root@kubenetesnode02:~# kubectl get node

NAME STATUS ROLES AGE VERSION

kubenetesnode02 Ready worker 8h v1.18.4

kubernetesnode01 Ready master 37h v1.18.4

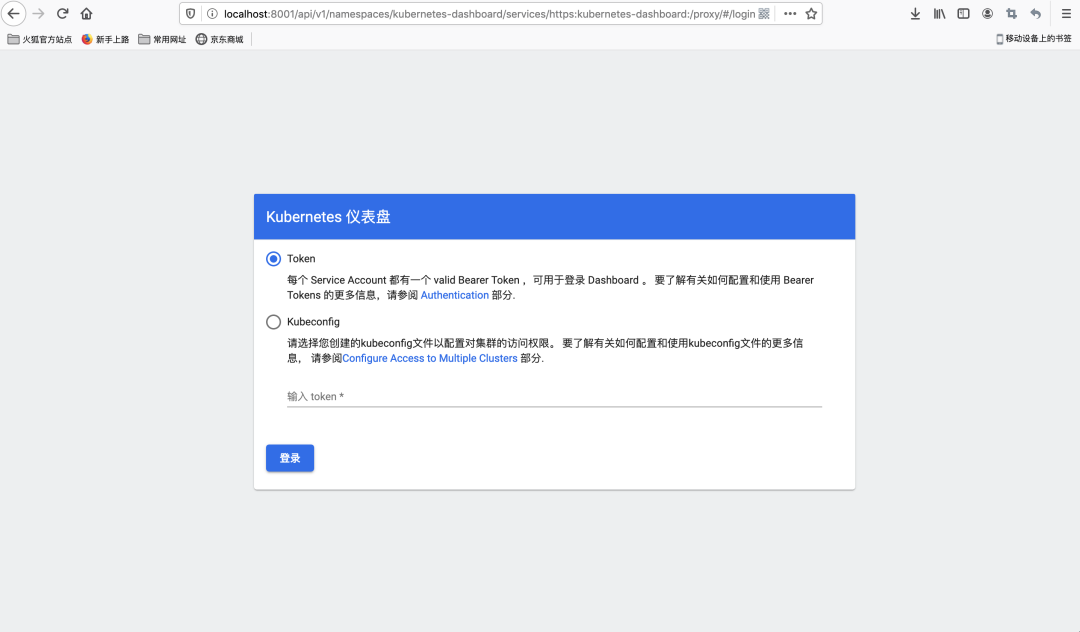

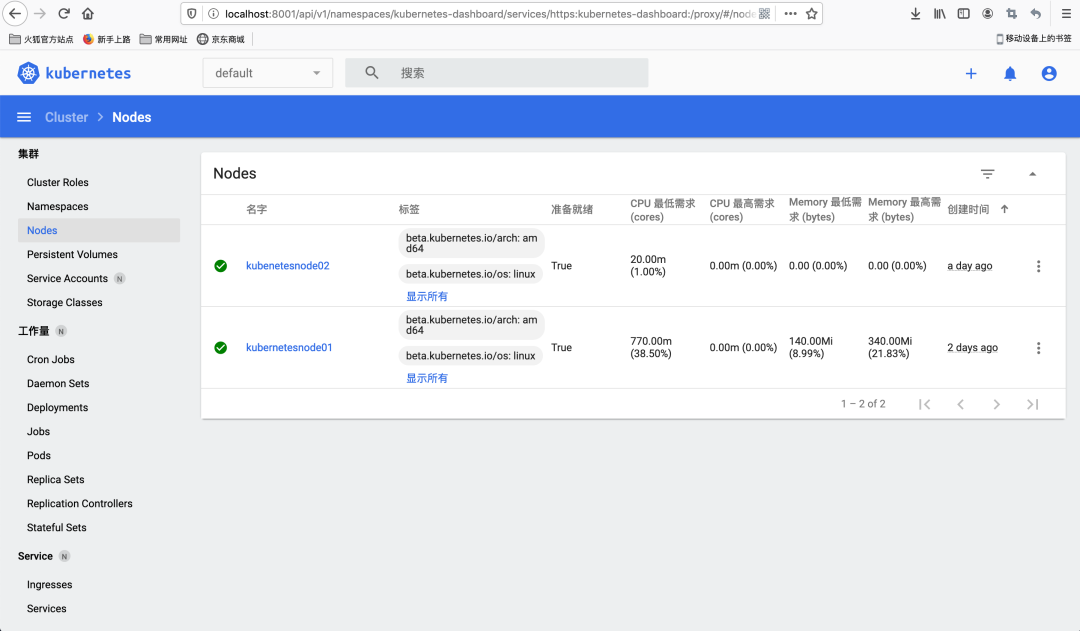

07、部署Dashboard可视化插件

root@kubenetesnode02:~# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

root@kubenetesnode02:~# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6b4884c9d5-xfb8b 1/1 Running 0 12h

kubernetes-dashboard-7f99b75bf4-9lxk8 1/1 Running 0 12h

root@kubenetesnode02:~# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.97.69.158 <none> 8000/TCP 13h

kubernetes-dashboard ClusterIP 10.111.30.214 <none> 443/TCP 13h

qiaodeMacBook-Pro-2:.kube qiaojiang$ kubectl proxy

Starting to serve on 127.0.0.1:8001

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

1)、创建一个服务账号

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

qiaodeMacBook-Pro-2:.kube qiaojiang$ kubectl apply -f dashboard-adminuser.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

serviceaccount/admin-user configured

2)、创建ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

qiaodeMacBook-Pro-2:.kube qiaojiang$ kubectl apply -f dashboard-clusterRoleBingding.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

3)、获取Bearer Token

qiaodeMacBook-Pro-2:.kube qiaojiang$ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-xxq2b

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 213dce75-4063-4555-842a-904cf4e88ed1

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlplSHRwcXhNREs0SUJPcTZIYU1kT0pidlFuOFJaVXYzLWx0c1BOZzZZY28ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXh4cTJiIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIyMTNkY2U3NS00MDYzLTQ1NTUtODQyYS05MDRjZjRlODhlZDEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.MIjSewAk4aVgVCU6fnBBLtIH7PJzcDUozaUoVGJPUu-TZSbRZHotugvrvd8Ek_f5urfyYhj14y1BSe1EXw3nINmo4J7bMI94T_f4HvSFW1RUznfWZ_uq24qKjNgqy4HrSfmickav2PmGv4TtumjhbziMreQ3jfmaPZvPqOa6Xmv1uhytLw3G6m5tRS97kl0i8A1lqnOWu7COJX0TtPkDrXiPPX9IzaGrp3Hd0pKHWrI_-orxsI5mmFj0cQZt1ncHarCssVnyHkWQqtle4ljV2HAO-bgY1j0E1pOPTlzpmSSbmAmedXZym77N10YNaIqtWvFjxMzhFqeTPNo539V1Gg

到这里就完成了Kubernetes可视化插件的部署并通过本地Proxy的方式进行了登录。在实际的生产环境中如果觉得每次通过本地Proxy的方式进行访问不够方便,也可以使用Ingress方式配置集群外访问Dashboard,感兴趣的读者可以自行尝试下。也可以先通过通过暴露端口,设置dashboard的访问,例如:

#查看svc名称

# kubectl get sc -n kubernetes-dashboard

# kubectl edit services -n kubernetes-dashboard kubernetes-dashboard

然后修改配置文件,如下:

ports:

- nodePort: 30000

port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

之后就可以通过IP+nodePort端口访问了!例如:

https://47.98.33.48:30000/

08、Master调整Taint/Toleration策略

root@kubenetesnode02:~# kubectl describe node kubernetesnode01

Name: kubernetesnode01

Roles: master

...

Taints: node-role.kubernetes.io/master:NoSchedule

...

root@kubernetesnode01:~# kubectl taint nodes --all node-role.kubernetes.io/master-

09、Kubernetes集群重启命令

如果服务器断电,或者重启,可通过如下命令重启集群:

#重启docker

systemctl daemon-reload

systemctl restart docker

#重启kubelet

systemctl restart kubelet.service

以上就是在CentOS 7 系统环境下搭建一组Kubernetes学习集群的详细步骤,其它Linux发行版本的部署方法也类似,大家可以根据自己的需求选择!

—————END—————

推荐阅读