最受关注的 Cilium Service Mesh 到底怎么玩? - 上手实践

大家好,我是张晋涛。

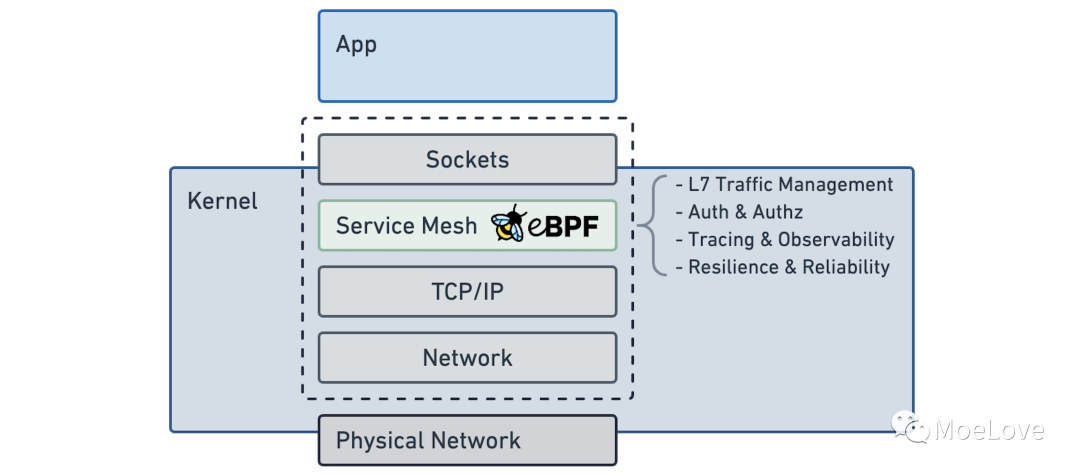

Cilium 是一个基于 eBPF 技术,用于为容器工作负载间提供安全且具备可观测性的网络连接的开源软件。

如果你对 Cilium 还不太了解,可以参考我之前的两篇文章:

最近 Cilium v1.11.0 正式发布了,增加 Open Telemetry 的支持以及其他一些增强特性。同时,也宣布了 Cilium Service Mesh 的计划。当前 Cilium Service Mesh 正处于测试阶段,预期在 2022 年会合并到 Cilium v1.12 版本中。

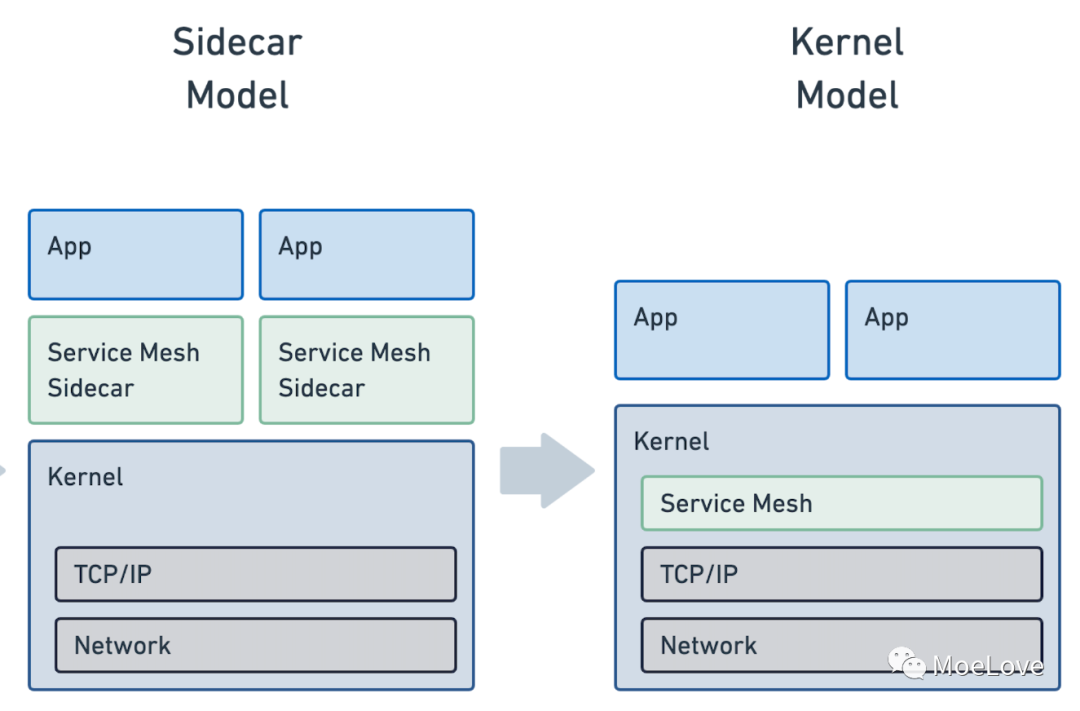

Cilium Service Mesh 也带来了一个全新的模式。

Cilium 直接通过 eBPF 技术实现的 Service Mesh 相比我们常规的 Istio/Linkerd 等方案,最显著的特点就是将 Sidecar proxy 模型替换成了 Kernel 模型, 如下图:

不再需要每个应用程序旁边都放置一个 Sidecar 了,直接在每台 Node 上提供支持。

我在几个月前就已经知道了这个消息并且进行了一些讨论,最近随着 isovalent 的一篇文章 How eBPF will solve Service Mesh - Goodbye Sidecars ,Cilium Service Mesh 也成为了大家关注的焦点。

本篇我带你实际体验下 Cilium Service Mesh。

安装部署

这里我使用 KIND 作为测试环境,我的内核版本是 5.15.8 。

准备 KIND 集群

关于 KIND 命令行工具的安装这里就不再赘述了,感兴趣的小伙伴可以参考我之前的文章 《使用KIND搭建自己的本地 Kubernetes 测试环境》。

以下是我创建集群使用的配置文件:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

nodes:

- role: control-plane

- role: worker

- role: worker

- role: worker

networking:

disableDefaultCNI: true

创建集群:

➜ cilium-mesh kind create cluster --config kind-config.yaml

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.22.4) 🖼

✓ Preparing nodes 📦 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

安装 Cilium CLI

这里我们使用 Cilium CLI 工具进行 Cilium 的部署。

➜ cilium-mesh curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz\{,.sha256sum\}

[1/2]: https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz --> cilium-linux-amd64.tar.gz

--_curl_--https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 154 100 154 0 0 243 0 --:--:-- --:--:-- --:--:-- 242

100 664 100 664 0 0 579 0 0:00:01 0:00:01 --:--:-- 579

100 14.6M 100 14.6M 0 0 2928k 0 0:00:05 0:00:05 --:--:-- 3910k

[2/2]: https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz.sha256sum --> cilium-linux-amd64.tar.gz.sha256sum

--_curl_--https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz.sha256sum

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 164 100 164 0 0 419 0 --:--:-- --:--:-- --:--:-- 418

100 674 100 674 0 0 861 0 --:--:-- --:--:-- --:--:-- 861

100 92 100 92 0 0 67 0 0:00:01 0:00:01 --:--:-- 0

➜ cilium-mesh ls

cilium-linux-amd64.tar.gz cilium-linux-amd64.tar.gz.sha256sum kind-config.yaml

➜ cilium-mesh tar -zxvf cilium-linux-amd64.tar.gz

cilium

加载镜像

在部署 Cilium 的过程中需要一些镜像,我们可以提前下载后加载到 KIND 的 Node 节点中。如果你的网络比较顺畅, 那这一步可以跳过。

➜ cilium-mesh ciliumMeshImage=("quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1" "quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1" "quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1")

➜ cilium-mesh for i in ${ciliumMeshImage[@]}

do

docker pull $i

kind load docker-image $i

done

部署 cilium

接下来我们直接使用 Cilium CLI 完成部署。注意这里的参数。

➜ cilium-mesh cilium install --version -service-mesh:v1.11.0-beta.1 --config enable-envoy-config=true --kube-proxy-replacement=probe --agent-image='quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1' --operator-image='quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1' --datapath-mode=vxlan

🔮 Auto-detected Kubernetes kind: kind

✨ Running "kind" validation checks

✅ Detected kind version "0.12.0"

ℹ️ using Cilium version "-service-mesh:v1.11.0-beta.1"

🔮 Auto-detected cluster name: kind-kind

🔮 Auto-detected IPAM mode: kubernetes

🔮 Custom datapath mode: vxlan

🔑 Found CA in secret cilium-ca

🔑 Generating certificates for Hubble...

🚀 Creating Service accounts...

🚀 Creating Cluster roles...

🚀 Creating ConfigMap for Cilium version 1.11.0...

ℹ️ Manual overwrite in ConfigMap: enable-envoy-config=true

🚀 Creating Agent DaemonSet...

🚀 Creating Operator Deployment...

⌛ Waiting for Cilium to be installed and ready...

✅ Cilium was successfully installed! Run 'cilium status' to view installation health

查看状态

在安装成功后, 可以通过 cilium status命令来查看当前 Cilium 的部署情况。

➜ cilium-mesh cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 4, Ready: 4/4, Available: 4/4

Containers: cilium Running: 4

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1: 4

cilium-operator quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1: 1

启用 Hubble

Hubble 主要是用来提供可观测能力的。在启用它之前,需要先加载一个镜像,如果网络畅通可以跳过。

docker.io/envoyproxy/envoy:v1.18.2@sha256:e8b37c1d75787dd1e712ff389b0d37337dc8a174a63bed9c34ba73359dc67da7

然后使用 Cilium CLI 开启 Hubble :

➜ cilium-mesh cilium hubble enable --relay-image='quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1' --ui

🔑 Found CA in secret cilium-ca

✨ Patching ConfigMap cilium-config to enable Hubble...

♻️ Restarted Cilium pods

⌛ Waiting for Cilium to become ready before deploying other Hubble component(s)...

🔑 Generating certificates for Relay...

✨ Deploying Relay from quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1...

✨ Deploying Hubble UI from quay.io/cilium/hubble-ui:v0.8.3 and Hubble UI Backend from quay.io/cilium/hubble-ui-backend:v0.8.3...

⌛ Waiting for Hubble to be installed...

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: OK

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 4, Ready: 4/4, Available: 4/4

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Unavailable: 1/1

Containers: cilium Running: 4

cilium-operator Running: 1

hubble-relay Running: 1

hubble-ui Running: 1

Cluster Pods: 5/5 managed by Cilium

Image versions cilium quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1: 4

cilium-operator quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1: 1

hubble-relay quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1: 1

hubble-ui quay.io/cilium/hubble-ui:v0.8.3: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.8.3: 1

hubble-ui docker.io/envoyproxy/envoy:v1.18.2@sha256:e8b37c1d75787dd1e712ff389b0d37337dc8a174a63bed9c34ba73359dc67da7: 1

测试 7 层 Ingress 流量管理

安装LB

这里我们可以给 KIND 集群中安装 MetaLB ,以便于我们可以使用 LoadBalancer 类型的 svc 资源(Cilium 会默认创建一个 LoadBalancer 类型的 svc)。如果不安装 MetaLB ,那也可以使用 NodePort 的方式来进行替代。

具体过程就不一一介绍了,直接按下述操作步骤执行即可。

➜ cilium-mesh kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/master/manifests/namespace.yaml

namespace/metallb-system created

➜ cilium-mesh kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

secret/memberlist created

➜ cilium-mesh kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/master/manifests/metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller created

➜ cilium-mesh docker network inspect -f '{{.IPAM.Config}}' kind

[{172.18.0.0/16 172.18.0.1 map[]} {fc00:f853:ccd:e793::/64 fc00:f853:ccd:e793::1 map[]}]

➜ cilium-mesh vim kind-lb-cm.yaml

➜ cilium-mesh cat kind-lb-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.18.255.200-172.18.255.250

➜ cilium-mesh kubectl apply -f kind-lb-cm.yaml

configmap/config created

加载镜像

这里我们使用 hashicorp/http-echo:0.2.3作为示例程序,它们可以按照启动参数的不同响应不同的内容。

➜ cilium-mesh docker pull hashicorp/http-echo:0.2.3

0.2.3: Pulling from hashicorp/http-echo

86399148984b: Pull complete

Digest: sha256:ba27d460cd1f22a1a4331bdf74f4fccbc025552357e8a3249c40ae216275de96

Status: Downloaded newer image for hashicorp/http-echo:0.2.3

docker.io/hashicorp/http-echo:0.2.3

➜ cilium-mesh kind load docker-image hashicorp/http-echo:0.2.3

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker2", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-control-plane", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker3", loading...

部署测试服务

本文中的所有配置文件均可在 https://github.com/tao12345666333/practical-kubernetes/tree/main/cilium-mesh 代码仓库中获取。

我们使用如下配置进行测试服务的部署:

apiVersion: v1

kind: Pod

metadata:

labels:

run: foo-app

name: foo-app

spec:

containers:

- image: hashicorp/http-echo:0.2.3

args:

- "-text=foo"

name: foo-app

ports:

- containerPort: 5678

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

run: foo-app

name: foo-app

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

run: foo-app

---

apiVersion: v1

kind: Pod

metadata:

labels:

run: bar-app

name: bar-app

spec:

containers:

- image: hashicorp/http-echo:0.2.3

args:

- "-text=bar"

name: bar-app

ports:

- containerPort: 5678

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

run: bar-app

name: bar-app

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

run: bar-app

新建如下的 Ingress 资源文件:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: cilium-ingress

namespace: default

spec:

ingressClassName: cilium

rules:

- http:

paths:

- backend:

service:

name: foo-app

port:

number: 5678

path: /foo

pathType: Prefix

- backend:

service:

name: bar-app

port:

number: 5678

path: /bar

pathType: Prefix

创建 Ingress 资源,然后可以看到产生了一个新的 LoadBalancer 类型的 svc 。

➜ cilium-mesh kubectl apply -f cilium-ingress.yaml

ingress.networking.k8s.io/cilium-ingress created

➜ cilium-mesh kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

bar-app ClusterIP 10.96.229.141 5678/TCP 106s

cilium-ingress-cilium-ingress LoadBalancer 10.96.161.128 172.18.255.200 80:31643/TCP 4s

foo-app ClusterIP 10.96.166.212 5678/TCP 106s

kubernetes ClusterIP 10.96.0.1 443/TCP 81m

➜ cilium-mesh kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

cilium-ingress cilium * 172.18.255.200 80 1m

测试

使用 curl 命令进行测试访问,发现可以按照 Ingress 资源中的配置得到正确的响应。查看响应头,我们会发现这里的代理实际上还是使用的 Envoy 来完成的。

➜ cilium-mesh curl 172.18.255.200

➜ cilium-mesh curl 172.18.255.200/foo

foo

➜ cilium-mesh curl 172.18.255.200/bar

bar

➜ cilium-mesh curl -I 172.18.255.200/bar

HTTP/1.1 200 OK

Content-Length: 4

Connection: keep-alive

Content-Type: text/plain; charset=utf-8

Date: Sat, 18 Dec 2021 06:02:56 GMT

Keep-Alive: timeout=4

Proxy-Connection: keep-alive

Server: envoy

X-App-Name: http-echo

X-App-Version: 0.2.3

X-Envoy-Upstream-Service-Time: 0

➜ cilium-mesh curl -I 172.18.255.200/foo

HTTP/1.1 200 OK

Content-Length: 4

Connection: keep-alive

Content-Type: text/plain; charset=utf-8

Date: Sat, 18 Dec 2021 06:03:01 GMT

Keep-Alive: timeout=4

Proxy-Connection: keep-alive

Server: envoy

X-App-Name: http-echo

X-App-Version: 0.2.3

X-Envoy-Upstream-Service-Time: 0

测试 CiliumEnvoyConfig

在使用上述方式部署 CIlium 后, 它其实还安装了一些 CRD 资源。其中有一个是 CiliumEnvoyConfig用于配置服务之间代理的。

➜ cilium-mesh kubectl api-resources |grep cilium.io

ciliumclusterwidenetworkpolicies ccnp cilium.io/v2 false CiliumClusterwideNetworkPolicy

ciliumendpoints cep,ciliumep cilium.io/v2 true CiliumEndpoint

ciliumenvoyconfigs cec cilium.io/v2alpha1 false CiliumEnvoyConfig

ciliumexternalworkloads cew cilium.io/v2 false CiliumExternalWorkload

ciliumidentities ciliumid cilium.io/v2 false CiliumIdentity

ciliumnetworkpolicies cnp,ciliumnp cilium.io/v2 true CiliumNetworkPolicy

ciliumnodes cn,ciliumn cilium.io/v2 false CiliumNode

部署测试服务

可以先进行 Hubble 的 port-forward

➜ cilium-mesh cilium hubble port-forward

默认会监听到 4245 端口上,如果不提前执行此操作就会出现下述内容

🔭 Enabling Hubble telescope...

⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp [::1]:4245: connect: connection refused"

如果已经开启 Hubble 的 port-forward ,正常情况下会得到如下输出:

➜ cilium-mesh cilium connectivity test --test egress-l7

ℹ️ Monitor aggregation detected, will skip some flow validation steps

⌛ [kind-kind] Waiting for deployments [client client2 echo-same-node] to become ready...

⌛ [kind-kind] Waiting for deployments [echo-other-node] to become ready...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/client-6488dcf5d4-pk6w9 to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/client2-5998d566b4-hrhrb to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/echo-other-node-f4d46f75b-bqpcb to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/echo-same-node-745bd5c77-zpzdn to appear...

⌛ [kind-kind] Waiting for Service cilium-test/echo-other-node to become ready...

⌛ [kind-kind] Waiting for Service cilium-test/echo-same-node to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.5:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.5:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.3:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.3:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.2:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.2:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.4:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.4:32133 (cilium-test/echo-same-node) to become ready...

ℹ️ Skipping IPCache check

⌛ [kind-kind] Waiting for pod cilium-test/client-6488dcf5d4-pk6w9 to reach default/kubernetes service...

⌛ [kind-kind] Waiting for pod cilium-test/client2-5998d566b4-hrhrb to reach default/kubernetes service...

🔭 Enabling Hubble telescope...

ℹ️ Hubble is OK, flows: 16380/16380

🏃 Running tests...

[=] Skipping Test [no-policies]

[=] Skipping Test [allow-all]

[=] Skipping Test [client-ingress]

[=] Skipping Test [echo-ingress]

[=] Skipping Test [client-egress]

[=] Skipping Test [to-entities-world]

[=] Skipping Test [to-cidr-1111]

[=] Skipping Test [echo-ingress-l7]

[=] Test [client-egress-l7]

..........

[=] Skipping Test [dns-only]

[=] Skipping Test [to-fqdns]

✅ All 1 tests (10 actions) successful, 10 tests skipped, 0 scenarios skipped.

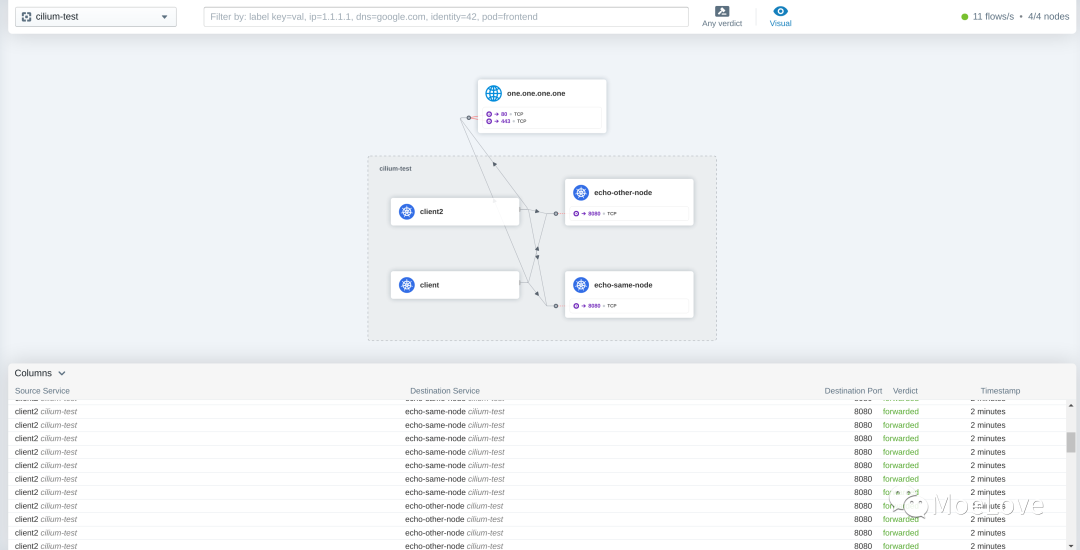

我们也可以同时打开UI看看:

➜ cilium-mesh cilium hubble ui

ℹ️ Opening "http://localhost:12000" in your browser...

效果图如下:

这个操作实际上会进行如下部署:

➜ cilium-mesh kubectl -n cilium-test get all

NAME READY STATUS RESTARTS AGE

pod/client-6488dcf5d4-pk6w9 1/1 Running 0 66m

pod/client2-5998d566b4-hrhrb 1/1 Running 0 66m

pod/echo-other-node-f4d46f75b-bqpcb 1/1 Running 0 66m

pod/echo-same-node-745bd5c77-zpzdn 1/1 Running 0 66m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/echo-other-node NodePort 10.96.124.211 8080:32751/TCP 66m

service/echo-same-node NodePort 10.96.136.252 8080:32133/TCP 66m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/client 1/1 1 1 66m

deployment.apps/client2 1/1 1 1 66m

deployment.apps/echo-other-node 1/1 1 1 66m

deployment.apps/echo-same-node 1/1 1 1 66m

NAME DESIRED CURRENT READY AGE

replicaset.apps/client-6488dcf5d4 1 1 1 66m

replicaset.apps/client2-5998d566b4 1 1 1 66m

replicaset.apps/echo-other-node-f4d46f75b 1 1 1 66m

replicaset.apps/echo-same-node-745bd5c77 1 1 1 66m

我们也可以看看它的 label:

➜ cilium-mesh kubectl get pods -n cilium-test --show-labels -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

client-6488dcf5d4-pk6w9 1/1 Running 0 67m 10.244.3.7 kind-worker3 kind=client,name=client,pod-template-hash=6488dcf5d4

client2-5998d566b4-hrhrb 1/1 Running 0 67m 10.244.3.18 kind-worker3 kind=client,name=client2,other=client,pod-template-hash=5998d566b4

echo-other-node-f4d46f75b-bqpcb 1/1 Running 0 67m 10.244.1.146 kind-worker2 kind=echo,name=echo-other-node,pod-template-hash=f4d46f75b

echo-same-node-745bd5c77-zpzdn 1/1 Running 0 67m 10.244.3.164 kind-worker3 kind=echo,name=echo-same-node,other=echo,pod-template-hash=745bd5c77

测试

这里我们在主机上进行操作下, 先拿到 client2 的 Pod 名称,然后通过 Hubble 命令观察所有访问此 Pod 的流量。

➜ cilium-mesh export CLIENT2=client2-5998d566b4-hrhrb

➜ cilium-mesh hubble observe --from-pod cilium-test/$CLIENT2 -f

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: SYN)

Dec 18 14:07:37.201: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:37.201: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:37.202: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:37.203: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: SYN)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: SYN)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:50.771: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:50.771: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:50.772: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.772: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK)

以上输出是由于我们执行了下面的操作:

kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/

kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-other-node:8080/

日志中基本上都是 to-endpoint 或者 to-overlay的。

测试使用 proxy

需要先安装 networkpolicy , 我们可以直接从 Cilium CLI 的仓库中拿到。

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium-cli/master/connectivity/manifests/client-egress-l7-http.yaml

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium-cli/master/connectivity/manifests/client-egress-only-dns.yaml

然后重复上面的请求:

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-other-node.cilium-test.svc.cluster.local. A)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-other-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:33:40.572: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-other-node:8080/)

Dec 18 14:33:40.573: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:33:40.573: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK)

执行另一个请求:

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/

也可以看到如下输出,其中有 to-proxy的字样。

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 14:45:18.859: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:45:18.859: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

其实看请求头更加方便:

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -I echo-same-node:8080/

HTTP/1.1 403 Forbidden

content-length: 15

content-type: text/plain

date: Sat, 18 Dec 2021 14:47:39 GMT

server: envoy

之前都是如下:

# 没有 proxy

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/

* Trying 10.96.136.252:8080...

* Connected to echo-same-node (10.96.136.252) port 8080 (#0)

> GET / HTTP/1.1

> Host: echo-same-node:8080

> User-Agent: curl/7.78.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Vary: Origin, Accept-Encoding

< Access-Control-Allow-Credentials: true

< Accept-Ranges: bytes

< Cache-Control: public, max-age=0

< Last-Modified: Sat, 26 Oct 1985 08:15:00 GMT

< ETag: W/"809-7438674ba0"

< Content-Type: text/html; charset=UTF-8

< Content-Length: 2057

< Date: Sat, 18 Dec 2021 14:07:37 GMT

< Connection: keep-alive

< Keep-Alive: timeout=5

请求一个不存在的地址:

以前请求响应是 404 ,现在是 403 ,并得到如下内容

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/foo

* Trying 10.96.136.252:8080...

* Connected to echo-same-node (10.96.136.252) port 8080 (#0)

> GET /foo HTTP/1.1

> Host: echo-same-node:8080

> User-Agent: curl/7.78.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 403 Forbidden

< content-length: 15

< content-type: text/plain

< date: Sat, 18 Dec 2021 14:50:38 GMT

< server: envoy

<

Access denied

* Connection #0 to host echo-same-node left intact

日志中也都是 to-proxy的字样。

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request DROPPED (HTTP/1.1 GET http://echo-same-node:8080/foo)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:50:39.187: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

我们使用如下内容作为 Envoy 的配置文件,其中包含 rewrite 策略。

apiVersion: cilium.io/v2alpha1

kind: CiliumEnvoyConfig

metadata:

name: envoy-lb-listener

spec:

services:

- name: echo-other-node

namespace: cilium-test

- name: echo-same-node

namespace: cilium-test

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listener

name: envoy-lb-listener

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: envoy-lb-listener

rds:

route_config_name: lb_route

http_filters:

- name: envoy.filters.http.router

- "@type": type.googleapis.com/envoy.config.route.v3.RouteConfiguration

name: lb_route

virtual_hosts:

- name: "lb_route"

domains: ["*"]

routes:

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: "cilium-test/echo-same-node"

weight: 50

- name: "cilium-test/echo-other-node"

weight: 50

retry_policy:

retry_on: 5xx

num_retries: 3

per_try_timeout: 1s

regex_rewrite:

pattern:

google_re2: {}

regex: "^/foo.*$"

substitution: "/"

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: "cilium-test/echo-same-node"

connect_timeout: 5s

lb_policy: ROUND_ROBIN

type: EDS

outlier_detection:

split_external_local_origin_errors: true

consecutive_local_origin_failure: 2

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: "cilium-test/echo-other-node"

connect_timeout: 3s

lb_policy: ROUND_ROBIN

type: EDS

outlier_detection:

split_external_local_origin_errors: true

consecutive_local_origin_failure: 2

测试请求时,发现可以正确的得到响应了。

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -X GET -I echo-same-node:8080/

HTTP/1.1 200 OK

x-powered-by: Express

vary: Origin, Accept-Encoding

access-control-allow-credentials: true

accept-ranges: bytes

cache-control: public, max-age=0

last-modified: Sat, 26 Oct 1985 08:15:00 GMT

etag: W/"809-7438674ba0"

content-type: text/html; charset=UTF-8

content-length: 2057

date: Sat, 18 Dec 2021 15:00:01 GMT

x-envoy-upstream-service-time: 1

server: envoy

并且请求 /foo地址时,也可以正确的得到响应了。

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -X GET -I echo-same-node:8080/foo

HTTP/1.1 200 OK

x-powered-by: Express

vary: Origin, Accept-Encoding

access-control-allow-credentials: true

accept-ranges: bytes

cache-control: public, max-age=0

last-modified: Sat, 26 Oct 1985 08:15:00 GMT

etag: W/"809-7438674ba0"

content-type: text/html; charset=UTF-8

content-length: 2057

date: Sat, 18 Dec 2021 15:01:40 GMT

x-envoy-upstream-service-time: 2

server: envoy

同时:请求 /foo 的时候,流量如下: 直接转换成功了对/的访问

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:02:22.543: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:02:22.544: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

多次请求看日志:

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:07:20.884: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53064 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53064 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:07:20.886: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:07:20.886: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:26.086: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:44.739: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:07:44.739: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:44.742: cilium-test/client2-5998d566b4-hrhrb:53068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:44.742: cilium-test/client2-5998d566b4-hrhrb:53068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:07:44.744: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:07:44.744: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

可以看到它真的成功的进行了负载均衡。

总结

本文我带你部署了 Cilium Service Mesh,并通过两个示例,带你体验了 Cilium Service Mesh 的工作情况。

整体而言, 这种方式能带来一定的便利性,但它的服务间流量配置主要依靠于 CiliumEnvoyConfig ,不算太方便。

一起来期待它后续的演进!

PS: 本文中的所有配置文件均可在 https://github.com/tao12345666333/practical-kubernetes/tree/main/cilium-mesh 代码仓库中获取。